Techbase had already integrated the Raspberry Pi CM4 module into several industrial products including Modberry 500 CM4 DIN Rail industrial computer, ModBerry AI GATEWAY 9500-CM4 with a Google Edge TPU, and ClusBerry 9500-CM4 that combines several Raspberry Pi CM4 modules into a DIN Rail mountable system. The company has now announced another Raspberry Pi Compute Module 4 gateway – iModGATE-AI – specially designed for failure monitoring and predictive maintenance of IoT installations, which also embeds a Google Coral Edge TPU AI module to accelerate computer vision. iModGATE-AI gateway specifications: SoM – Raspberry Pi Compute Module 4 with up to 32GB eMMC AI accelerator – Google Coral Edge TPU AI module Video Output – HDMI port Connectivity – Gigabit Ethernet USB – USB 2.0 port Sensors – 9-axis motion tracking module with 3-axis gyroscope with Programmable FSR, 3-axis accelerometer with Programmable FSR, 3-axis compass (magnetometer) Expansion 2x 16-pin block terminal Advanced […]

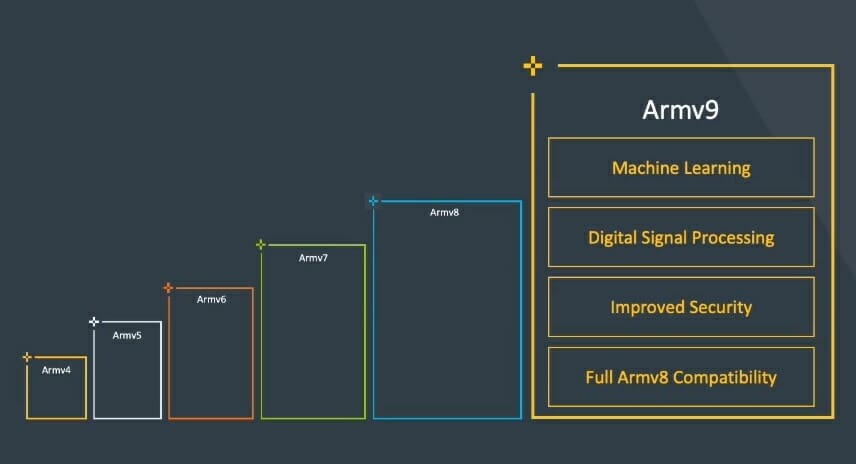

Armv9 architecture to focus on AI, security, and “specialized compute”

Armv8 was announced in October 2011 as the first 64-bit architecture from Arm. while keeping compatibility with 32-bit Armv7 code. Since then we’ve seen plenty of Armv8 cores from the energy-efficient Cortex-A35 to the powerful Cortex-X1 core, as long as some custom cores from Arm partners. But Arm has now announced the first new architecture in nearly ten years with Armv9 which builds upon Armv8 but adds blocks for artificial intelligence, security, and “specialized compute” which are basically hardware accelerators or instructions optimized for specific tasks. Armv9 still supports Aarch32 and Aarch64 instructions, NEON, Crypto Extensions, Trustzone, etc…, and is more an evolution of Armv8 rather than a completely new architecture. Some of the new features brought about by Armv9-A include: Scalable Vector Extension v2 (SVE2) is a superset of the Armv8-A SVE found in some Arm supercomputer core with the addition of fixed-point arithmetic support, vector length in multiples […]

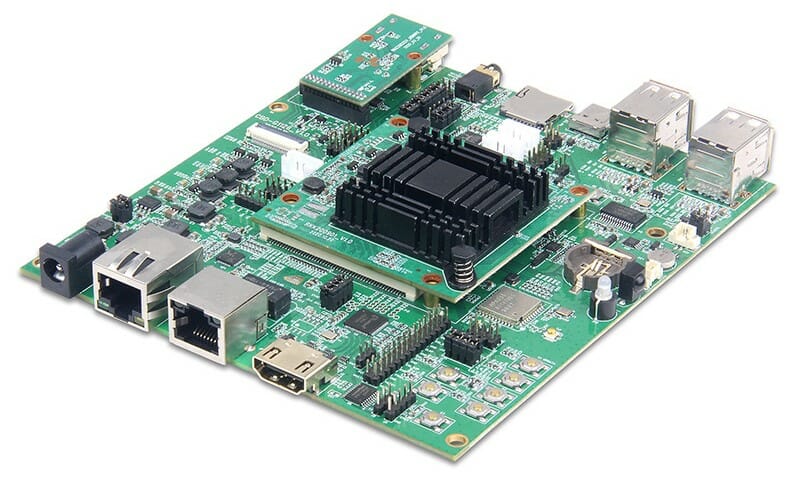

Geniatech DB1126 development board features RV1126 SoC for AI applications

Since the release of Rockchip RV1126 SoC, we have covered the detailed specifications on the chip and the RV1126-based Firefly dual-lens AI camera module. To take advantage of hybrid MCU cores from ARM and RISC-V, Geniatech has announced the DB1126 development board, a new addition to their long list of ARM Embedded developer boards designed to tackle any task requiring artificial intelligence. The new Developer Board 1126 development board gets its name from Rockchip RV1126 SoC with a quad-core ARM Cortex-A7 and RISC-V MCU and a neural network acceleration with performance up to 2.0 TOPS that support INT8/ INT16. As the company says, “DB1126 is a high-performance, versatile, high-computing (2TOPS) general-purpose, intelligent integrated carrier board + core board.” The board is not just a simple development board but also a carrier board with a core board connected via a board-to-board (BTB) double groove connection. Geniatech has provided the DB1126 development […]

Snapdragon 780G 5G SoC announced with 12 TOPs AI performance, triple ISP, WIFI 6E

Qualcomm has just announced an update to its Snapdragon 765G 5G processor with Snapdragon 780G 5G mobile platform equipped with Qualcomm Spectra 570 triple ISP (Image Signal Processor) and a 6th generation Qualcomm AI Engine that delivers up to 12TOPS AI performance, or twice that of Snapdragon 765G 5G SoC. As a “G” part, Snapdragon 780G comes with Snapdragon Elite Gaming features to improve the gaming experience with updateable GPU drivers, ultra-smooth gaming, and True 10-bit HDR gaming. Snapdragon 780G also updates its 5G modem with Snapdragon X53 with peak download speeds of 3.3 Gbps on sub-6 GHz frequencies, and supports premium Wi-Fi 6/6E and Bluetooth audio features offered on Snapdragon 888 premium SoC. Snapdragon 780G 5G (SM7350-AB) key features and specifications: CPU – Octa-core Kryo 670 processor with 1x Arm Cortex-A78 @ 2.4GHz, 3x Arm Cortex-A78 @ 2.2GHz, 4x Arm Cortex-A55 @ 1.9GHz GPU – Adreno 642 with support […]

Made in Thailand CorgiDude RISC-V AI board aims to teach machine learning

There’s a relatively small but active maker community in Thailand, and we’ve covered or even reviewed some made in Thailand boards including ESP8266 and ESP32 boards, a 3G Raspberry Pi HAT, and KidBright education platform among others. MakerAsia has developed CorgiDude, a board based on the version of Sipeed M1 RISC-V AI module with built-in WiFi, and part as a kit with a camera and a display used to teach machine learning and artificial intelligence with MicroPython or C/C++ programming. CorgiDude board specifications: AI Wireless Module – Sipeed M1W Module with Kendryte K210 dual-core 64-bit RISC-V RV64IMAFDC CPU @ 400Mhz with FPU, various AI accelerators (KPU, FFT accelerator…), 8MiB on-chip SRAM Espressif ESP8285 single-core 2.4 GHz WiFi 4 SoC plus IPEX antenna connector Storage – MicroSD card slot Camera I/F for 2MP OV2640 sensor up to 1280 × 1024 (SXGA) @ 30 fosm SVGA @ 30 fps, or CIF @ […]

Annke CZ400 AI security camera reviewed with basic and smart events

At the beginning of this month, I started the review of Annke CZ400 AI security camera by listing specifications, unboxing the device, and doing a partial teardown, notably to install a MicroSD card. In theory, the camera comes with more advanced AI features than Reolink RLC-810A 4K CCTV camera that only supports people and vehicle detection, as the Annke security camera can handle face detection, line crossing, unattended baggage detection, and other smart event detections. So let’s see how it performs Annke CZ400 installation The first challenge was the installation, as I told the company I would not be willing to install the camera on the ceiling since I’m renting, and preferred wall-mounting. after checking the user manual that included a wall mount, I decided to go ahead, and get the review unit. But sadly, the wall mount is not included in the package, and Annke even told me they […]

ADLINK launches SMARC Short Size Module, Devkit with NXP i.MX 8M Plus

We had seen many i.MX 8M Plus modules with built-in AI accelerator announced at Embedded World 2021, including two SMARC modules from Congatec and iWave Systems. ADLINK has added another of i.MX 8M Plus module compliant with SMARC 2.1 “short” standard with LEC-IMX8MP system-on-module equipped with up to 8GB RAM, 128 GB eMMC flash, as well as a development kit called I-Pi SMARC IMX8M Plus prototyping platform. LEC-IMX8MP module specifications Specifications: SoC – NXP i.MX8M Plus with quad-core ARM Cortex-A53 processor, Vivante GC380 2D GPU and GC7000UL 3D GPU, 1080p60 video decoder & encoder, optional 2.3 TOPS Neural Processing Unit (NPU) System Memory – 2/4/8GB LPDDR4L-4266 Storage – 16, 32, 64, or 128GB eMMC flash (build option) Wireless – Optional 802.11 ac/a/b/g/n WiFi 5 2X2 MIMO and Bluetooth 5.0 module 314-pin MXM 3.0 edge connector Storage – 1x SDIO (4-bit) compatible with SD/SDIO standard, up to version 3.0 Display – […]

UP Squared Pro SBC gets more I/Os, 3x M.2 sockets, TPM 2.0 security chip

UP Squared Apollo Lake SBC was launched in 2016 via a crowdfunding campaign with a price starting at just 89 Euros for the model with 2GB RAM 16GB storage, and a dual-core Intel Celeron N3350 processor. AAEON has now announced an update simply called UP Squared Pro with many of the same features, but greater expandability and I/O features, for example, to add 5G modules and AI accelerators through one of the three M.2 sockets, as well as improved security via a TPM 2.0 chip. UP Squared Pro (UPN-APL01) specifications with highlights in bold or strikethrough in comparison to the UP Squared specifications (in 2016): SoC (one or the other) Intel Celeron N3350 dual-core “Apollo Lake” processor @ 1.1 GHz / 2.4 GHz with 12 EU Intel HD graphics 500 @ 200 MHz / 650 MHz (6W TDP) Intel Pentium N4200 quad-core “Apollo Lake” processor @ 1.1 GHz / 2.5 […]