In the first part of Foscam SPC WiFi spotlight camera review, we checked out the specifications, the package content, and some of the main components of the camera with SigmaStar SSC337DE processor and a Realtek RTL8822CS dual-band WiFi 5 module. I’ve now had time to play with the camera and tested it with the Foscam Android app, including human detection, and enabled ONVIF support to use the camera with compatible third-party apps or programs. Foscam SPC installation and setup with Android app Foscam SPC camera is designed to be wall-mounted, but for this review, I simply attached it to the branch of a small tree. Note that contrary to the other cameras I’ve tested, the camera angle can only be adjusted up and down or rotated, but there’s no direct left or right adjustment. I’ve also connected the power adapter to power up the camera, but I did not install […]

Zidoo M6 RK3566 mini PC & SBC supports GbE, WiFi 6, 5G connectivity

Zidoo M6 is both a complete mini PC and a single board computer based on Rockchip RK3566 processor with up to 8GB RAM with plenty of peripherals connectivity options including built-in Gigabit Ethernet, WiFi 6, Bluetooth 5, and support for 5G modems. Several years ago, Zidoo used to serve the TV box market, but they’ve now veered towards higher-end media players, digital signage players, and industrial applications. While we’ve already seen some RK3566 TV boxes on the market, using the AIoT processor does not make much sense in this type of product, and instead, Zidoo M6 targets AI Edge gateways, digital signage, and other AIoT products. Zidoo M6 specifications: SoC – Rockchip RK3566 with a quad-core Cortex-A55 processor @ up to 1.8GHz, Arm Mali-G52 2EE GPU with support for OpenGL ES 1.1/2.0/3.2. OpenCL 2.0. Vulkan 1.1, 0.8 TOPS AI accelerator, 4K H.265/H.265/VP9 video decoder, 1080p100 H.265/H.264 video encoder. System Memory […]

Foscam SPC WiFi Spotlight Camera Review – Part1: Unboxing and Teardown

I’ve been reviewing a few IP cameras with built-in AI features with Vacom Cam, Reolink RLC-810A, and Annke CZ400 AI security camera which had by far the most advanced features going beyond human detection with luggage monitoring, line crossing-detection, and many more. Today, I’ve received another model with basic human detection. But Foscam SPC security camera also happens to come with a motion-activated spotlight, and it is the first camera I’ve ever received with support for dual-band WiFi meaning 2.4GHz or 5 GHz WiFi can be used as needed. In the first part of the review, I’ll go through the specs, do an unboxing, and go through teardown photos to check the internals. Foscam SPC key features and specifications Some of the highlights listed in the user manual and package: Camera 4MP camera up to 2560×1440 resolution @ 25 fps, 156° view of view (diagonal) HDR support 2x white LED […]

Vecow EAC-2000 fanless embedded system is powered by NVIDIA Jetson Xavier NX

Vecow Vecow EAC-2000 series fanless embedded system features NVIDIA Jetson Xavier NX module for the deployment of AI vision and industrial applications including traffic vision, intelligent surveillance, auto optical inspection, Smart Factory, AMR/AGV, and other AIoT/Industry 4.0 applications. The computer comes with up to four Fakra-Z connectors to connect GMSL cameras, as well as four Gigabit Ethernet ports, two of which with PoE+ support, takes 9V to 50V wide range DC input, and can operate in a wide temperature range from -25°C to 70°C. Two models part of Vecow EAC-2000 embedded system family are currently offered with the following specifications: System-on-Module – NVIDIA Jetson Xavier NX with CPU – 6-core NVIDIA Carmel ARM v8.2 64-bit CPU GPU -384-core NVIDIA Volta GPU with 48 Tensor Cores DL Accelerator -2x NVDLA Engines System Memory – 8GB LPDDR4x DRAM Storage – 16GB eMMC flash Storage – M.2 Key M Socket (2280) for SSD, […]

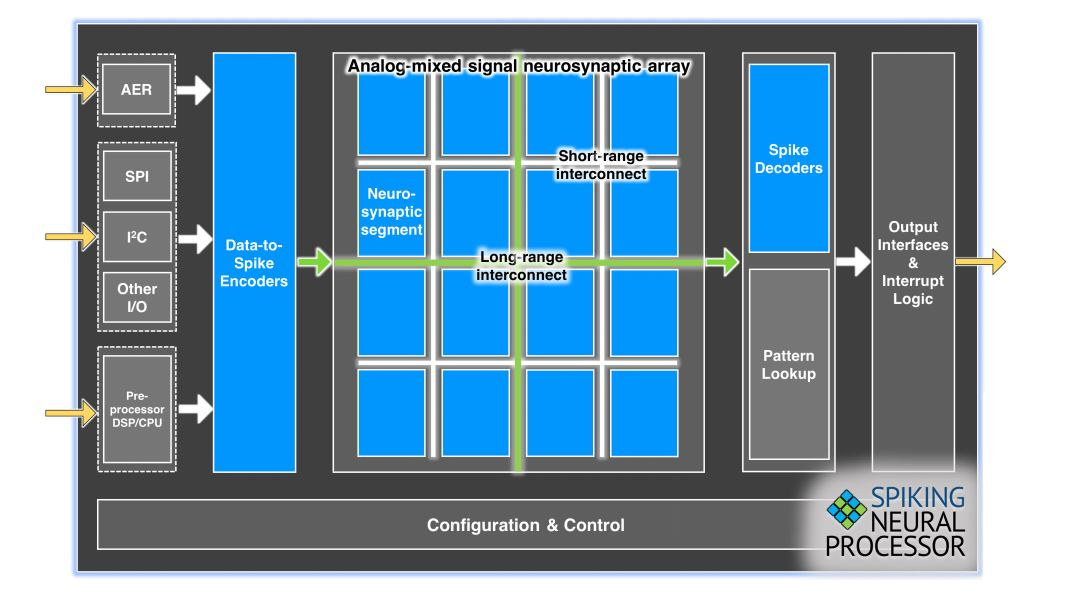

Innatera neuromorphic AI accelerator for spiking neural networks (SNN) enables sub-mW AI inference

Most AI accelerators currently rely on CNN (convolutional neural network) to perform AI inference in a much faster and efficient way than on CPU cores, or even GPUs. But there’s another type of neural network, namely spiking neural networks (SNN) that uses the timing of spikes in an electrical signal to perform pattern recognition tasks in a way similar to neurons in the brain. The claims in terms of efficiency are quite unbelievable, with up to 10,000 times more performance per watts than in microprocessor and digital accelerometer, 500 times lower energy, and 100 times shorter latency. Several companies are working on neuromorphic AI accelerators for spiking neural networks, with notably Prophesee focusing on image processing, and Innatera that is working on an ultra-low-power AI accelerator handling audio, health, and radar for sound and speech recognition, vital signs monitoring, elderly person fall sensors, etc… Innatera recently provided additional information about […]

MAIX-II A AI camera board combines Allwinner R329 smart audio processor with USB-C camera

Earlier this year, we wrote about Sipeed MAIX-II Dock AIoT vision devkit with an Allwinner V831 camera processor with a small 200 MOPS NPU, an Omnivision SP2305 2MP camera sensor, and a 1.3-inch display. But for some reason, which could be supply issues, Sipeed has designed a much different variant called MAIX-II A with a board based on Allwinner R329 smart audio processor, a 720p30 USB camera module, and a 1.5-inch display. MAIX-II A board specifications: Main M.2 module – Maix-II A module with Allwinner R329 dual-core Cortex-A53 processor @ 1.5 GHz, 256MB DDR3 on-chip, a dual-core HIFI4 DSP @ 400 MHz, and Arm China AIPU AI accelerator for up to 256 MOPS, plus Wi-Fi & BLE and a footprint for an SPI Flash. Storage – MicroSD card socket Display – 1.5-inch LCD display with 240×240 resolution Audio – Dual microphones, 3W speakers Camera – 720p USB-C camera module based […]

XUAN-Bike self-balancing, self-riding bicycle relies on flywheel, 22 TOPS Huawei Ascend A310 AI processor

After Huawei engineer Peng Zhihui Jun fell off this bicycle, he decided he should create a self-balancing, self-riding bicycle, and ultimately this gave birth to the XUAN-Bike, with XUAN standing for eXtremely, Unnatural Auto-Navigation, and also happening to be an old Chinese name for cars. The bicycle relies on a flywheel and a control board with ESP32 and MPU6050 IMU for stabilization connected over a CAN bus to the motors, as well as Atlas 200 DK AI Developer Kit equipped with the 22 TOPS Huawei Ascend A310 AI processor consuming under 8W connected to a 3D depth camera and motor for self-riding. It’s not the first time we see this type of bicycle or even motorcycle, but the XUAN-Bike design is also fairly well-documented with the hardware design (electronics + 3D Fusion360 CAD files) and some documentation in Chinese uploaded to Github. The software part has not been released so […]

Station M2 business-card sized Android 11 mini PC, also supports Ubuntu & Buildroot

After introducing Station P2 Rockchip RK3568 mini PC in March of this year, Firefly has now launched another, cheaper model with the ultra-thin Station M2 computer based on the company’s ROC-RK3566-PC single board computer equipped with Rockchip RK3566 SoC. Station M2 is only slightly larger than a business card, but packs up to 8GB RAM, M.2 SSD storage, HDMI 2.0, Gigabit Ethernet, and USB 3.0/2.0 ports. Station M2 specifications: SoC – Rockchip RK3566 with a quad-core Cortex-A55 processor @ up to 1.8GHz. Arm Mali-G52 2EE GPU with support for OpenGL ES 1.1/2.0/3.2. OpenCL 2.0. Vulkan 1.1, 0.8 TOPS AI accelerator, 4K H.265/H.265/VP9 video decoder, 1080p100 H.265/H.264 video encoder. System Memory – 2GB or 4GB LPDDR4 (8GB optional) Storage – 32GB or 64GB (128GB eMMC optional), M.2 PCIe 2.0 socket for 2242 NVMe SSD, MicroSD card socket Video Output – 1x HDMI port up to 4Kp60 Audio – 3.5mm headphone jack, […]