Traverse Ten64 is a networking platform designed for 4G/5G gateways, local edge gateways for cloud architectures, IoT gateways, and network-attached-storage (NAS) devices for home and office use.

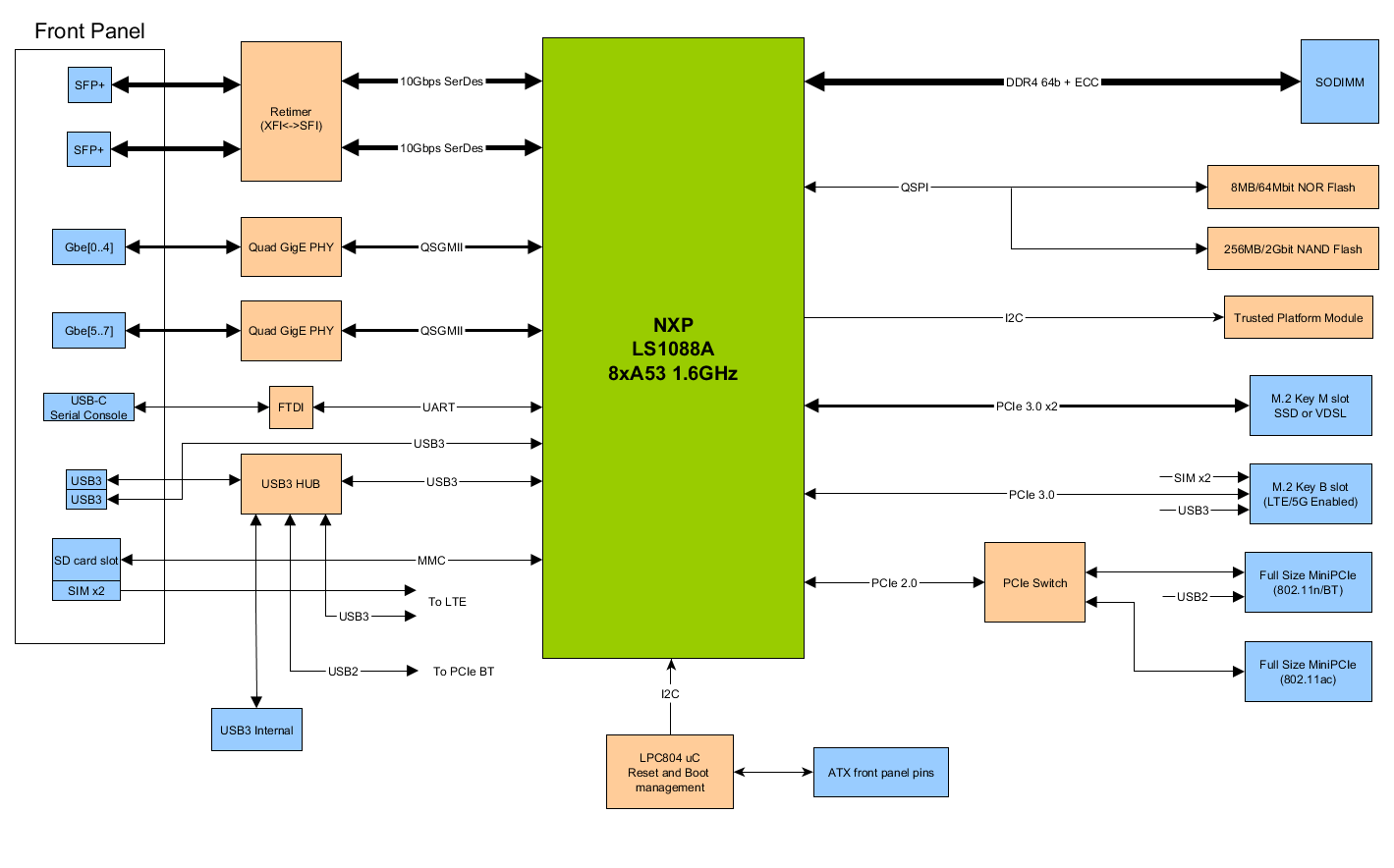

Ten64 system runs Linux mainline on based NXP Layerscape LS1088A octa-core Cortex-A53 communication processor with ECC memory support, and offers eight Gigabit Ethernet ports, two 10GbE SFP+ cages, as well as mini PCIe and M.2 expansion sockets.

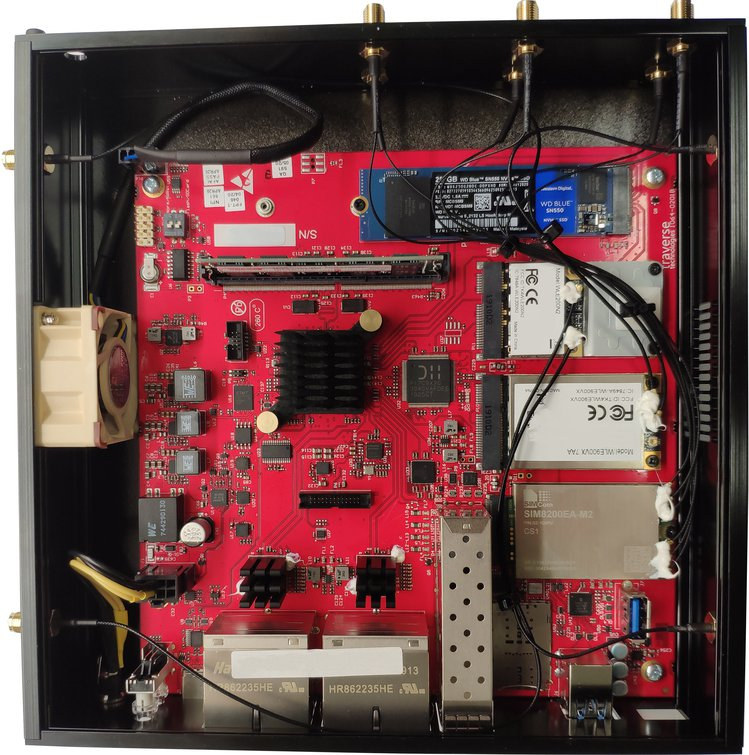

- SoC – NXP QorIQ LS1088 octa-core Cortex-A53 processor @ 1.6 GHz, 64-bit with virtualization, crypto, and IOMMU support

- System Memory – 4GB to 32GB DDR4 SO-DIMMs (including ECC) at 2100 MT/s

- Storage

- 8 MB onboard QSPI NOR flash

- 256 MB onboard NAND flash

- NVMe SSDs via M.2 Key M

- microSD socket multiplexed with SIM2

- Networking

- 8x 1000Base-T Gigabit Ethernet ports (RJ45)

- 2x 10GbE SFP+ cages

- Optional wireless M.2/mPCIe card plus 3-choose-2 nanoSIM/microSD socket and up to 11x SMA connectors for antennas

- USB – 2x USB 3.0 ports, 1x USB 3.0 interface via internal header, USB-C port for serial console

- Expansion

- 1x M.2 Key M (PCIe 3.0×2)

- 2x miniPCIe (PCIe 2.0)

- 1x M.2 Key B (PCIe 3.0×1 + USB 3)

- Power Supply – 12 VDC via 8-pin connector, ATX 12V and 2.5 mm DC adapters available

- Power Consumption – > 20 W (typ.)

- Dimensions

- Board 175 mm x 170 mm (compatible with Mini-ITX)

- Enclosure – 200 mm x 200 mm x 45 mm (approximately 1U high, metal construction, internal cooling fan)

The system runs U-Boot v2019.X+, Linux Kernel 5.0+ (mainline), OpenWrt and standard Linux distributions via EFI, and supports NXP DPAA2 (Data Path Acceleration Architecture Gen2) with all drivers provided. Documentation is provided on Traverse website, where you’ll also find the firmware images, and the source code has been committed on Gitlab, but there does not seem to be any active development done on Gitlab.

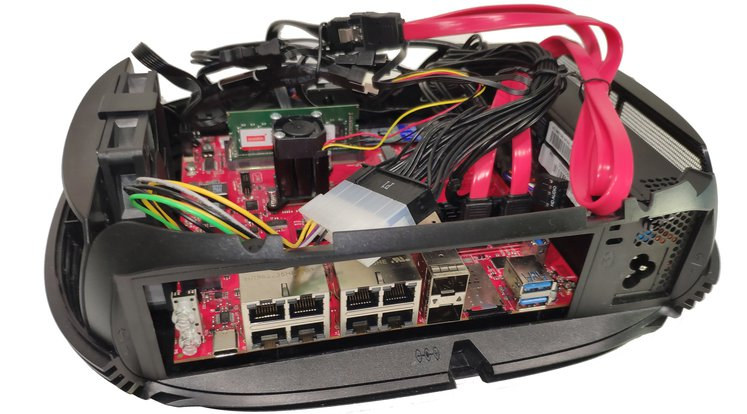

You may have seen one of the use cases listed in the introduction is NAS, but with no SATA ports on the solution, it does not seem that well-suited. That’s why Traverse also offers a NAS DIY Kit with heatsink & fan, ATX power adapter, a four-port SATA controller via an M.2 card, and an I/O faceplate. That looks overkills for a NAS, but maybe there are some applications that may benefit from a NAS with 10 Ethernet interfaces.

Ten64 provides an alternative to platforms like MacchiatoBin mini-ITX networking board, Turris Omnia open-source hardware router, or ClearFog CX LX2K networking board with a different set of features and price points.

Traverse Ten64 has just launched on Crowd Supply with a $60,000 funding target, and the networking platform is offered for $579 and up with the mainboard installed in the metal enclosure with a fan, a 60 W power supply with regional power cord, a USB-C console cable, a recovery microSD card, a SIM eject tool, and a hex key. ECC RAM is available as an option for $60 for 8GB to $240 for 32GB. The DIY NAS kit is listed for $70, but will only be useful if you purchase the $579 system. Shipping is free to the US, and $40 to the rest o the world. Backers should expect Ten64 to ship in March 2021.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress