There have been attempts to bring Arm processors to desktop PC’s in recent years with projects such as 96Boards Synquacer based on SocioNext SC2A11 24-core Cortex-A53 server processor or Clearfog-ITX workstation equipped with the more powerful NXP LX2160A 16-core Arm Cortex A72 networkingprocessor @ 2.2 GHz.

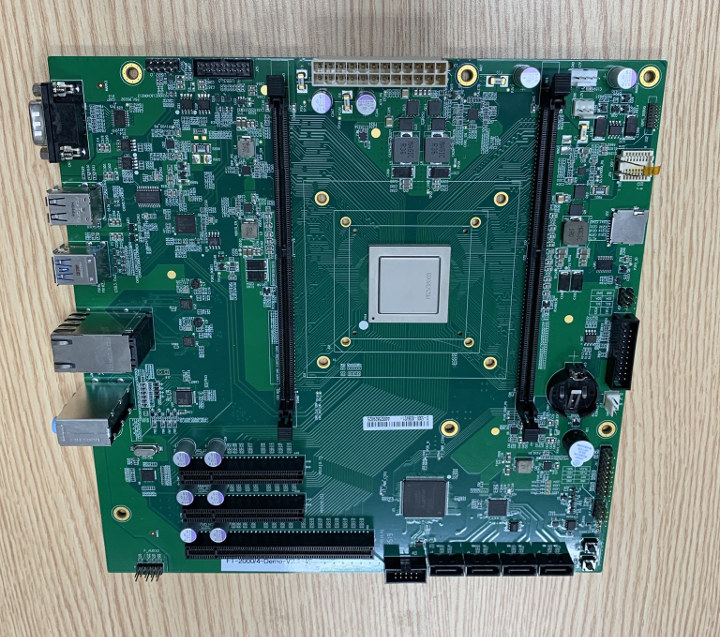

Those solutions were also based server and networking SoCs, but there may soon be another option specifically designed for Arm Desktop PCs as a photo of an Arm Micro-ATX motherboard just showed up on Twitter.

Here are the specifications we derive from the Tweet and the photo:

- SoC – Phytium FT2000/4 quad core custom Armv8 (FTC663) desktop processor @ 2.6 – 3.0 GHz with 4MB L2 Cache (2MB per two cores) and 4MB L3 Cache; 16nm process; 10W power consumption; 1144-pin FCBGA package (35×35 mm)

- System Memory – 2x SO-DIMM slot supporting 72-bit (ECC) DDR4-3200 memory

- Storage – 4x SATA 3.0 connectors; MicroSD card slot

- Video Output – N/A – Discrete PCIe graphics card required

- Audio – Combo jack with 3x 3.5mm port, audio header

- Networking – 2x Gigabit Ethernet ports

- USB – 2x USB 3.0 ports, 2x USB 2.0 ports, 1x USB 3.0 interface via header and 2x USB 2.0 interfaces via header

- Expansion – 1x PCIe x16 and 2x PCIe x8 slots, various pin headers

- Misc – RS-232 serial port, RTC with battery, buzzer, buttons

- Power Supply – ATX connector

- Dimensions – 244 x 244 mm (Micro-ATX form factor)

That’s about all we know about the “FT-2000/4 Demo” motherboard so far, but if you want to know more about the processor check out last month’s announcement (in Chinese), where you’ll also find documentation (still in Chinese) after scrolling a bit.

Basically Phytium FT-2000/4 is an Armv8 desktop processor which consumes up to 10 Watts (3.8W at 1 GHz), and achieves 61.1 and 62.5 points in respectively SPEC2006 integer and the floating-point benchmarks.

The company further explains the desktop PC version of “Galaxy Kirin” operating system (is that Ubuntu Kylin?) has been ported to the processor, and other companies have also been involved in software development. Manufactures such as Lenovo, Baolongda, Lianda, Chuangzhicheng, EVOC, Hanwei and others are developing FT-2000/4-based desktops, notebooks, and all-in-one PC that will launch in Q4 2019 onwards. So we should probably watch that space.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress