A few months ago, we wrote that SolidRun was working on ClearFog ITX workstation with an NXP LX2160A 16-core Arm Cortex-A72 processor, support for up to 64GB RAM, and a motherboard following the mini-ITX form factor that would make it an ideal platform as an Arm developer platform.

Since then the company split the project into two parts: the ClearFog CX LX2K mini-ITX board will focus on networking application, while HoneyComb LX2K has had some of the networking stripped to keep the cost in check for developers planning to use the mini-ITX board as an Arm workstation. Both boards use the exact same LX2160A COM Express module.

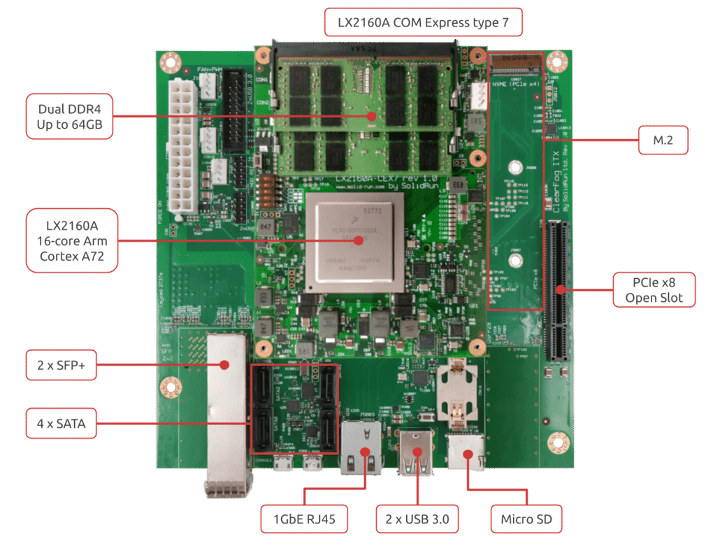

HoneyComb LX2K specifications:

HoneyComb LX2K specifications:

- COM Module – CEx7 LX2160A COM Express module with NXP LX2160A 16-core Arm Cortex A72 processor @ 2.2 GHz (2.0 GHz for pre-production developer board)

- System Memory – Up to 64GB DDR4 dual-channel memory up to 3200 Mpts via SO-DIMM sockets on COM module (pre-production will work up to 2900 Mpts)

- Storage

- M.2 2240/2280

/22110SSD support - MicroSD slot

- 64GB eMMC flash

- 4 x SATA 3.0 ports

- M.2 2240/2280

- Networking

1x QSFP28 100Gbps cage (100Gbps/4x25Gbps/4x10Gbps)4x2x SFP+ ports (10 GHz each)- 1x Gigabit Ethernet copper (RJ45)

M.2 2230 with SIM card

- USB – 3x USB 3.0, 3x USB 2.0

- Expansion – 1x PCIe x8 Gen 4.0 socket (Note: pre-production board will be limited to PCIe gen 3.0)

- Debugging – MicroUSB for debugging (UART over USB)

- Misc – USB to STM32 for remote management

- Power Supply – ATX standard

- Dimensions – 170 x 170mm (Mini ITX Form Factor) with support for metal enclosure

The pre-production developer board is fitted with NXP LX2160A pre-production silicon which explains some of the limitations. The metal enclosure won’t be available for the pre-production board, and software features will be limited with the lack of SBSA compliance, UEFI, and mainline Linux support. It will support Linux 4.14.x only. You may want to visit the developer resources page for more technical information.

With those details out of the way, you can pre-order the pre-production board right now for $550 without RAM with shipment expected by August. This is mostly suitable for developers, as the software may not be fully ready. The final HoneyComb LX2K Arm workstation board will become available in November 2019 for $750. If you’d like the network board with 100GbE instead, you can pre-order ClearFog LX2K for $980 without RAM, and delivery is scheduled for September 2019.

If you are interested in benchmarks, Jon Nettleton of SolidRun shared results for Openbenchmarking C-Ray, 7-zip, and sbc-bench among others.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress