Firefly ROC-RK3576-PC is a low-power, low-profile SBC built around the Rockchip RK3576 octa-core Cortex-A72/A53 SoC which we also find in the Forlinx FET3576-C, the Banana Pi BPI-M5, and Mekotronics R57 Mini PC. In terms of power and performance, this SoC falls in between the Rockchip RK3588 and RK3399 SoCs and can be used for AIoT applications thanks to its 6 TOPS NPU.

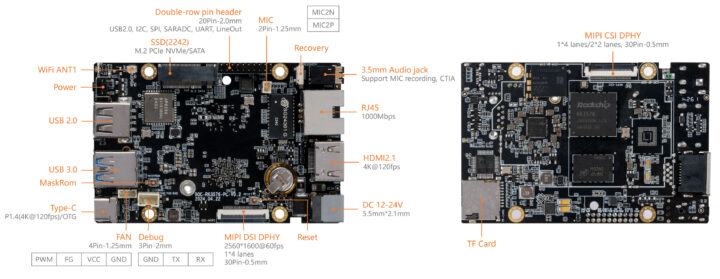

Termed “mini computer” by Firefly this SBC supports up to 8GB LPDDR4/LPDDR4X memory and 256GB of eMMC storage. Additionally, it offers Gigabit Ethernet, WiFi 5, and Bluetooth 5.0 for connectivity. An M.2 2242 PCIe/SATA socket and microSD card can be used for storage, and the board also offers HDMI and MIPI DSI display interfaces, two MIPI CSI camera interfaces, a few USB ports, and a 40-pin GPIO header.

Firefly ROC-RK3576-PC specifications

- SoC – Rockchip RK3576

- CPU

- 4x Cortex-A72 cores at 2.2GHz, four Cortex-A53 cores at 1.8GHz

- Arm Cortex-M0 MCU at 400MHz

- GPU – ARM Mali-G52 MC3 GPU clocked at 1GHz with support for OpenGL ES 1.1, 2.0, and 3.2, OpenCL up to 2.0, and Vulkan 1.1 embedded 2D acceleration

- NPU – 6 TOPS (INT8) AI accelerator with support for INT4/INT8/INT16/BF16/TF32 mixed operations.

- VPU

- Video Decoder: H.264, H.265, VP9, AV1, and AVS2 up to 8K at 30fps or 4K at 120fps.

- Video Encoder: H.264 and H.265 up to 4K at 60fps, (M)JPEG encoder/decoder up to 4K at 60fps.

- CPU

- System Memory – 4GB or 8GB 32-bit LPDDR4/LPDDR4x

- Storage

- 16GB to 256GB eMMC flash options

- MicroSD card slot

- M.2 (2242 PCIe NVMe/SATA SSD)

- Footprint for UFS 2.0 storage

- Video Output

- HDMI 2.0 port up to 4Kp120

- MIPI DSI connector up to 2Kp60

- DisplayPort 1.4 via USB-C up to 4Kp120

- Audio

- 3.5mm Audio jack (Support MIC recording and American Standard CTIA)

- Line OUT

- Camera I/F – 1x MIPI CSI DPHY(30Pin-0.5mm, 1*4 lanes/2*2 lanes)

- Networking

- Low-profile Gigabit Ethernet RJ45 port with Motorcomm YT8531

- WiFi 5 and Bluetooth 5.2 via AMPAK AP6256

- USB – 1x USB 3.0 port, 1x USB 2.0 port, 1x USB Type-C port

- Expansion

- 40-pin GPIO header

- M.2 for PCIe socket

- Misc

- External watchdog

- 4-pin fan connector

- 1x Debug port

- I2C, SPI, USART

- SARADC

- Power

- Supply voltage – DC 12V (5.5mm * 2.1mm, support 12V~24V wide voltage input)

- Power Consumption – Normal: 1.2W(12V/100mA), Max: 6W(12V/500mA), Min: 0.096W(12V/8mA)

- Dimensions – 93.00 x 60.15 x 12.49mm

- Weight – 50 grams

- Environment

- Temperature Range: -20°C- 60°C

- Humidity – 10%90%RH (non-condensing)

The Firefly ROC-RK3576-PC SBC supports Android 14 and Ubuntu, along with Buildroot and QT is supported through official Rockchip support. Third-party Debian images may become available soon. More information about the SBC can be found on the product page and the Wiki but at the time of writing, there is no information available on the latter page.

As Firefly is portraying the SBC is designed for AI workload, it will support complex AI models like Gemma-2B, LlaMa2-7B, ChatGLM3-6B, and Qwen1.5-1.8B, which are often used for language processing and understanding. It will also support older AI models like CNN, RNN, and LSTM for added flexibility. Additionally, you can use popular AI development tools like TensorFlow, PyTorch, and others, and even create custom functions for your needs.

The ROC-RK3576-PC SBC is priced at around $159.00 for the 4G+32GB variant and $189.00 for the 8G+64G variant on the official Firefly store, but you’ll also find both variants on AliExpress for $199-$229 including shipping.

Debashis Das is a technical content writer and embedded engineer with over five years of experience in the industry. With expertise in Embedded C, PCB Design, and SEO optimization, he effectively blends difficult technical topics with clear communication

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress