We previously tested Edge Impulse machine learning platform showing how to train and deploy a model with IMU data from the XIAO BLE sense board relatively easily. Since then the company announced support for NVIDIA TAO toolkit in Edge Impulse, and now they’ve added the latest GPT-4o LLM to the ML platform to help users quickly train TinyML models that can run on boards with microcontrollers.

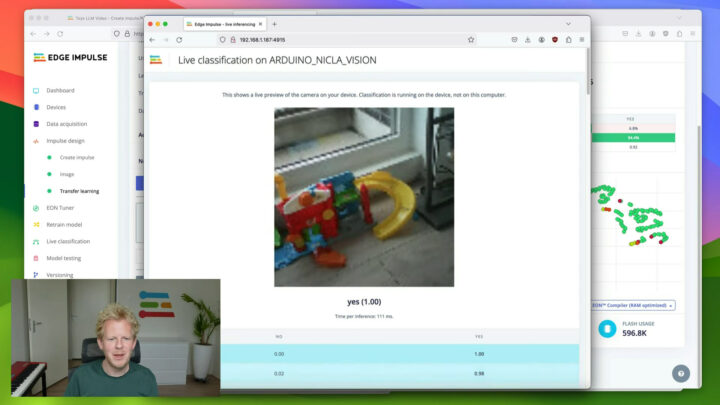

What’s interesting is how AI tools from various companies, namely NVIDIA (TAO toolkit) and OpenAI (GPT-4o LLM), are leveraged in Edge Impulse to quickly create some low-end ML model by simply filming a video. Jan Jongboom, CTO and co-founder at Edge Impulse, demonstrated the solution by shooting a video of his kids’ toys and loading it in Edge Impulse to create an “is there a toy?” model that runs on the Arduino Nicla Vision at about 10 FPS.

Another way to look at it (hence the title of the video embedded below) is that they’ve shrunk GPT-4o LLM with over 175 billion parameters to a much smaller specialized model with only 800K parameters suitable to run on MCU hardware.

There are five basic steps to achieve this:

- Data Collection: shooting a video

- Data Processing: Uploading the video to Edge Impulse to split it by frames with data unlabeled.

- Labeling with GPT-4o: Using the new transformation block “Label image data using GPT-4o” in Edge Impulse, users can ask GPT-4o to label the images automatically, discarding any blurry or uncertain images to provide a clean dataset. The question was “is there any toy?”, and the answer could only be “yes” or “no”.

- Model Training: Once the images are labeled (there are about 500 labeled items in the demo), NVIDIA TAO is used to train a small (MobileNet) model with these images. The model in the demo ended up having about 800,000 parameters.

- Deployment: The model can now be deployed on the hardware from the web interface. In this case, an Arduino Nicla Vision could accurately detect toys on-device, in real-time (10FPS) without requiring cloud services. That model was also tested in a web browser at 50 FPS and on an iPhone.

You can watch the video below with Jan going through the main steps in about 14 minutes.

While some features of Edge Impulse are free to use, the GPT-4o labeling block and TAO transfer learning models are only available to enterprise customers in Edge Impulse. If you have a company email address, there’s a 2-week free trial available. More details may be found in the announcement.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress