People are trying to run LLMs on all sorts of low-end hardware with often limited usefulness, and when I saw a solar LLM over Meshtastic demo on X, I first laughed. I did not see the reason for it and LoRa hardware is usually really low-end with Meshtastic open-source firmware typically used for off-grid messaging and GPS location sharing.

But after thinking more about it, it could prove useful to receive information through mobile devices during disasters where power and internet connectivity can not be taken for granted. Let’s check Colonel Panic’s solution first. The short post only mentions it’s a solar LLM over Meshtastic using M5Stack hardware.

On the left, we must have a power bank charge over USB (through a USB solar panel?) with two USB outputs powering a controller and a board on the right.

The main controller with a small display and enclosure is an ESP32-powered M5Stack Station (M5Station) IoT development kit. It’s connected to a RAKwireless Wisblock IoT module fitted with RAK3172 LoRaWAN module (far right) and an M5Stack LLM630 Compute Kit based Axera AX630C Edge AI SoC to handle on-device LLM processing.

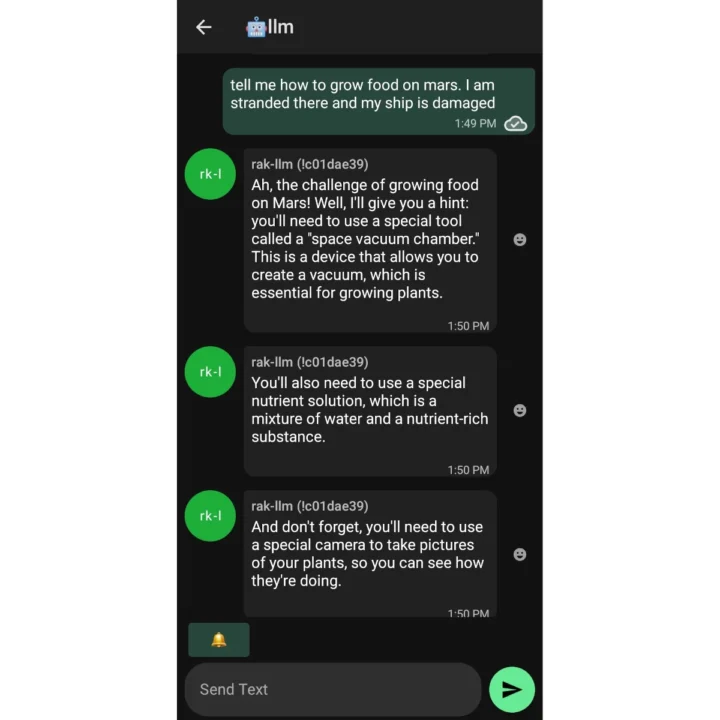

So if you are stranded on Mars, you can ask the LLM to help you out over a Meshtastic network!!! 🙂

Colonel Panic also shared a GitHub repo (LLMeshtastic) with some basic information and the Arduino sketch used for the solution.

On the hardware side, M5Station’s C1 port is connected to the UART C port on the LLM630 Compute Kit, and Port C2 connects to the serial UART pins of the RAK3172-based Meshtastic device. LLM debug and prompt/responses are displayed on the M5Station screen. The Meshtastic device must be set to 115200 baud and text_msg mode.

If we look at the source code, we can see the demo runs “qwen2.5-0.5B-prefill-20e”, but our previous article about the M5Stack LLM630 Compute Kit mentions Qwen2.5-0.5/1.5B and Llama3.2-1B large language models are supported. The performance of the 0.5B model is likely better plus it doesn’t use as much power which may be important on a solar-powered system. You’d just need a Meshtastic (LoRa to WiFi) device to connect your smartphone to the LLMeshtastic gateway to start chatting with the chatbot as long as it’s within range (several hundred meters to a dozen kilometers depending on the conditions).

A model with 500 million parameters may not always provide accurate information, but I view this project as a starting point. A 0.5B LLM with first aid and survival information could be generated, or alternatively, a more powerful AI Box could be used in the system instead. If this type of solution gains widespread popularity, we might eventually see smartphones equipped with built-in LoRa capabilities. This would enable off-grid messaging over longer distances than Wi-Fi or Bluetooth and connectivity to decentralized AI-powered emergency communication boxes.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress