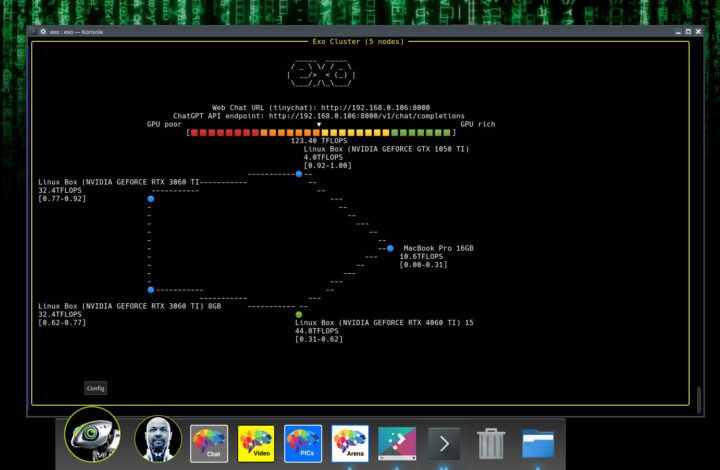

You’d typically need hardware with a large amount of memory and bandwidth and multiple GPUs, if you want to run the latest large language models (LLMs), such as DeepSeek R1 with 671 billion parameters. But such hardware is not affordable or even available to most people, and the Exo software works around that as a distributed LLM solution working on a cluster of computers with or without NVIDIA GPUs, smartphones, and/or single board computers like Raspberry Pi boards.

In some ways, exo works like distcc when compiling C programs over a build farm, but targets AI workloads such as LLMs instead.

Key features of Exo software:

- Support for LLaMA (MLX and tinygrad), Mistral, LlaVA, Qwen, and Deepseek.

- Dynamic Model Partitioning – The solution splits up models based on the current network topology and device resources available in order to run larger models than you would be able to on any single device. That’s what I call a “distributed LLM solution”. Several partitioning strategies are available and the default is “ring memory weighted partitioning” where each device runs a number of model layers proportional to the memory of the device.

- Automatic Device Discovery / Zero manual configuration – exo will automatically discover other devices using the best method available.

- ChatGPT-compatible API. It enables a one-line change in your application to run models on your own hardware using exo.

- Device Equality – Exo does not use a master-worker architecture and instead connects in a peer-to-peer (P2P) fashion. So as long as a device is connected somewhere in the network, it can be used to run models.

OS support is unclear, and I don’t see any Windows support. Instead, instructions related to Linux, Mac OS, Android, and iOS are available. The only other software requirement is Python>=3.12.0. Linux systems with NVIDIA GPU also need the NVIDIA driver, CUDA toolkit, and a cuDNN library.

On the hardware front, the important is to have enough memory across all devices to fit the model in memory. For example, since running Llama 3.1 8B (FP16) requires 16GB of RAM, the following configuration would work:

- 2x 8GB M3 MacBook Airs or

- 1x 16GB NVIDIA RTX 4070 Ti Laptop

- 2x Raspberry Pi 400 with 4GB of RAM each (running on CPU) and 1x 8GB Mac Mini; so heterogenous architectures can work together.

If I understand correctly, it would be possible to run DeepSeek R1 (Full 671B – FP16) on a cluster of Raspberry Pi with 8GB or 16GB, as long as there’s a combined ~1.3TB of RAM, or about 170 Raspberry Pi 5 with 8GB RAM. Performance would be horrendous likely better expressed in tokens per hour, but it should work in theory,… The developers explain that “adding less capable devices will slow down individual inference latency but will increase the overall throughput of the cluster.”

Exo needs to be installed from source on all machines as follows:

|

1 2 3 |

git clone https://github.com/exo-explore/exo.git cd exo source install.sh |

Once done, you only need to run the following command on all machines:

|

1 |

exo |

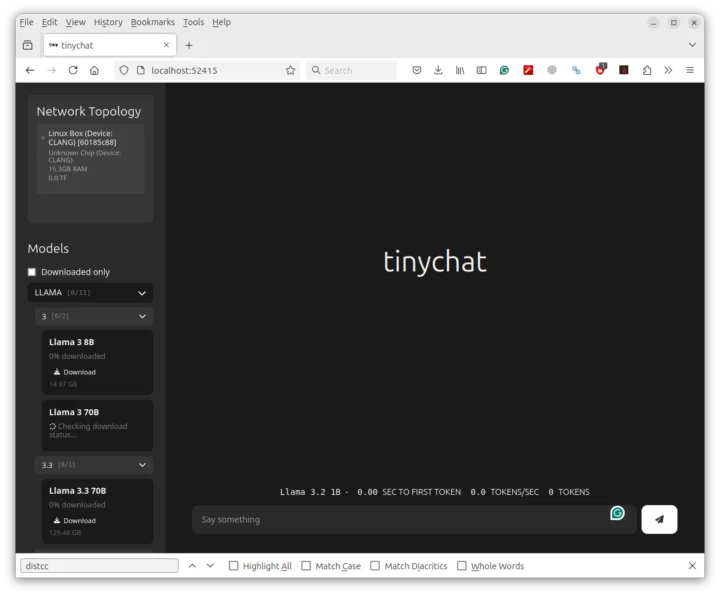

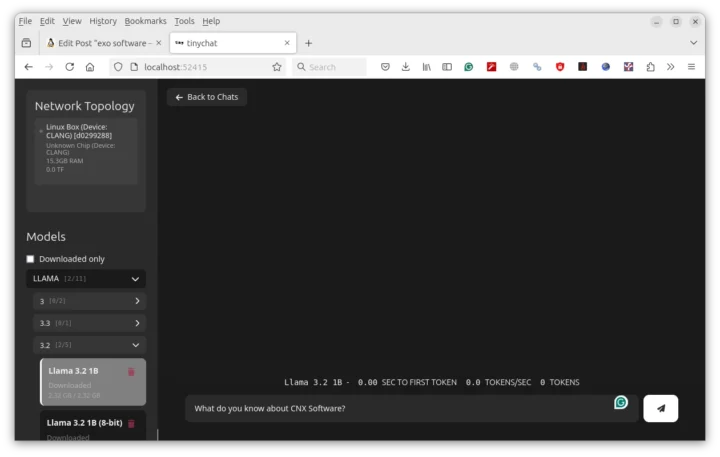

That’s it! A ChatGPT-like WebUI will be started on http://localhost:52415. This is what it looks like on my machine (I only installed it on Ubuntu 24.04 machine).

You can download various Llama models directly from the interface. It first failed when I tried due to an “llvmlite” error, but I fixed it after installing the missing library:

|

1 2 |

source .venv/bin/activate pip install llvmlite |

I installed two Llama 1B models from the web interface, but they still use a lot of memory (all of 16GB), and my laptop will typically hang when all memory is used… Even after rebooting my laptop and only running Exo and the web interface, it also hangs. Note that exos is also experimental software, so that might be why.

You’ll find the source code, extra instructions for macOS, and developer documentation (API) on GitHub.

Thanks to Onebir for the tip

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress