DeepSeek R1 model was released a few weeks ago and Brian Roemmele claimed to run it locally on a Raspberry Pi at 200 tokens per second promising to release a Raspberry Pi image “as soon as all tests are complete”. He further explains the Raspberry Pi 5 had a few HATs including a Hailo AI accelerator, but that’s about all the information we have so far, and I assume he used the distilled model with 1.5 billion parameters.

Jeff Geerling did his own tests with DeepSeek-R1 (Qwen 14B), but that was only on the CPU at 1.4 token/s, and he later installed an AMD W7700 graphics card on it for better performance. Other people made TinyZero models based on DeepSeekR1 optimized for Raspberry Pi, but that’s specific to countdown and multiplication tasks and still runs on the CPU only. So I was happy to finally see Radxa release instructions to run DeepSeek R1 (Qwen2 1.5B) on an NPU, more exactly the 6 TOPS NPU accelerator of the Rockchip RK3588 SoC, using the RKLLM toolkit.

The full instructions explain how to compile the model yourself, but if you only want to try it quickly, Radxa offers a pre-compiled RKLLM from ModelScope which you can get with:

|

1 |

git clone https://www.modelscope.cn/radxa/DeepSeek-R1-Distill-Qwen-1.5B_RKLLM.git |

It has four files:

- configuration.json – Configuration file

- librkllmrt.so – RKLLM library

- llm_demo – Demo program

- DeepSeek-R1-Distill-Qwen-1.5B.rkllm (1.9GB) – DeepSeek R1 Qwen 1.5B compiled with RKLLM

- README.md

Run the test with:

|

1 2 |

export RKLLM_LOG_LEVEL=1 ./llm_demo DeepSeek-R1-Distill-Qwen-1.5B.rkllm 10000 10000 |

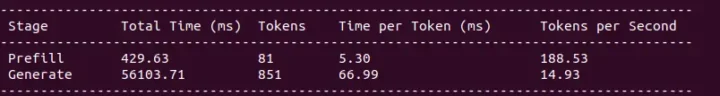

Radxa says the RK3588 achieves 14.93 tokens per second for the math program

Solve the equations x+y=12, 2x+4y=34, find the values of x and y

The demo was tested on Radxa ROCK 5B. I haven’t done it myself since I don’t have the board with me right now… It should also work on other Rockchip RK3588/RK3588S boards and even Rockchip RK3576 hardware platforms since they use the same NPU. Banana Pi also shared a post on X with a video showing DeepSeek R1 (Qwen 1.5B) running the Banana Pi BPI-M7 board (RK3588).

#DeepSeek is perfectly adapted and operates efficiently on #BananaPi BPI-M7 (#Siger7) #Rockchip #RK3588 #SBC https://t.co/tlNXB2KjfN pic.twitter.com/W24zaW3OH5

— Banana pi Open Source Hardware (@sinovoip) February 8, 2025

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress