YOLO is one of the most popular edge AI computer vision models that detects multiple objects and works out of the box for the objects for which it has been trained on. But adding another object would typically involve a lot of work as you’d need to collect a dataset, manually annotate the objects you want to detect, train the network, and then possibly quantize it for edge deployment on an AI accelerator.

This is basically true for all computer vision models, and we’ve already seen Edge Impulse facilitate the annotation process using GPT-4o and NVIDIA TAO to train TinyML models for microcontrollers. However, researchers at jevois.org have managed to do something even more impressive with YOLO-Jevois “open-vocabulary object detection”, based on Tencent AI Lab’s YOLO-World, to add new objects in YOLO at runtime by simply typing words or selecting part of the image. It also updates class definitions on the fly within one second, and YOLO-JeVois does not need to be re-parameterized or re-quantized.

Laurent Itti, Jevois’ Founder and professor of Computer Science, Psychology, and Neuroscience at the University of Southern California, provides some more insights and explains how YOLO-Jevois works:

YOLO-World (from Tencent AI Lab) started to address this by letting you define any class you like for object detection, by name. No need for an annotated training dataset, just describe in words what you want to detect. Your custom class definitions get embedded into the network to make it run fast, this is called re-parameterization. The problem is when you want to quantize your network to run it on a mobile device or hardware accelerator. Each time you change class definitions, you need to re-parameterize, and then re-quantize the network. Quantization requires a sample dataset of your desired objects to set the quantization parameters, and typically takes several hours. So, when quantizing for edge AI, we lose the convenience of YOLO-World: we still need a dataset for the new classes, we still need to spend hours quantizing the network every time we change the classes.

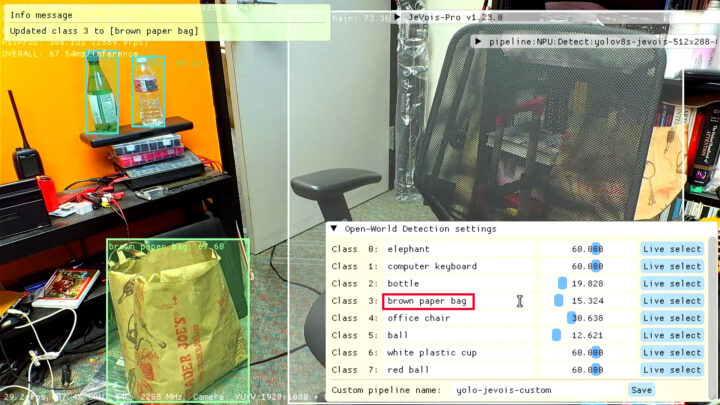

Researchers at jevois.org have now addressed this by modifying the inputs and the internal workflow of YOLO-World, leading to a new YOLO-JeVois. YOLO-JeVois takes partially-processed class definition embeddings as new inputs. The rest of the network is then quantized once and for all. At runtime, it takes less than a second to update the class embeddings, and YOLO-JeVois does not need to be re-parameterized or re-quantized. The end result is a quantized, accelerated object detection network for edge AI, where class definitions can easily be updated at runtime. As an extra bonus, classes can also be defined at runtime by image: draw a bounding box about an object you like, and in less than a second you now have a detector for that object.

The best might be to check out the video below using the Jevois Pro camera and the built-in NPU the Amlogic A311D SoC.

The “open-vocabulary object detection on JeVois-Pro” is implemented in Jevois 1.23.0 which has just been released on GitHub. You can also check Jevois 1.23.0 changelog on the documentation website.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress