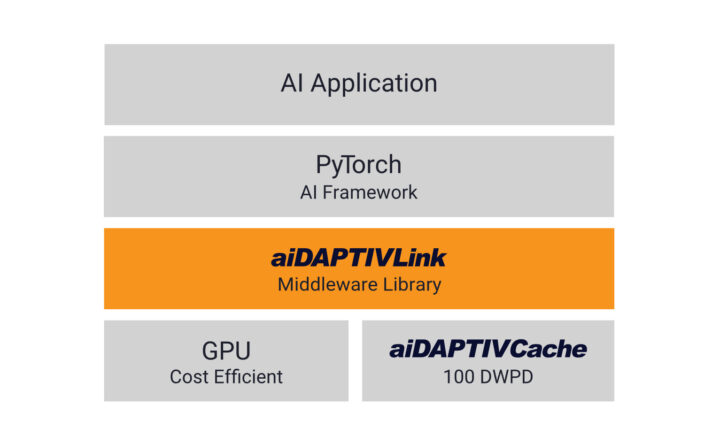

While looking for new and interesting products I found ADLINK’s DLAP Supreme series, a series of Edge AI devices built around the NVIDIA Jetson AGX Orin platform. But that was not the interesting part, what got my attention was it has support for something called the aiDAPTIV+ technology which made us curious. Upon looking we found that the aiDAPTIV+ AI solution is a hybrid (software and hardware) solution that uses readily available low-cost NAND flash storage to enhance the capabilities of GPUs to streamline and scale large-language model (LLM) training for small and medium-sized businesses. This design allows organizations to train their data models on standard, off-the-shelf hardware, overcoming limitations with more complex models like Llama-2 7B.

The solution supports up to 70B model parameters with low latency and high-endurance storage (100 DWPD) using SLC NAND. It is designed to easily integrate with existing AI applications without requiring hardware changes, making it accessible for businesses seeking cost-efficient and powerful LLM training solutions.

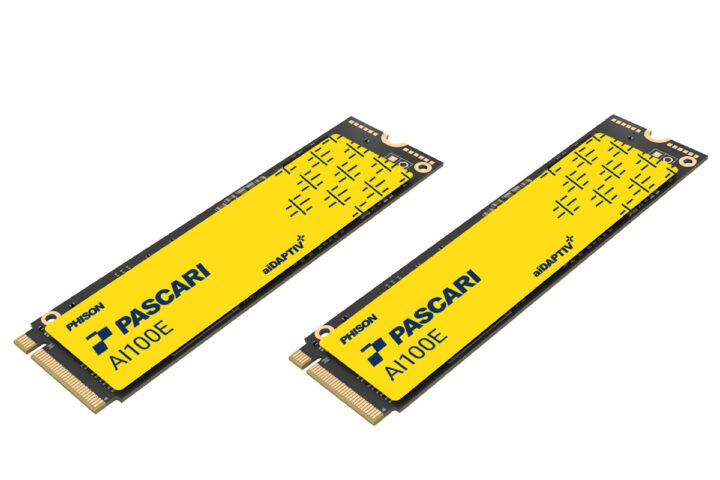

Phison mentions they have used a technique similar to “swapping” in operating systems. When a computer’s RAM is full, it moves less frequently used data to the hard drive to free up space for active processes. Similarly, aiDAPTIV+ leverages the high-speed, large capacity of SSDs (Phison’s AI100E M.2 SSDs) to extend the memory capabilities of GPUs. These specially designed SSDs or “aiDAPTIVCache” are optimized for the high demands of AI processing.

On the other hand, the aiDAPTIV+middleware manages data flow between the GPU memory and the SSDs. It analyzes the AI model’s needs, identifies less active data segments, and transfers them to the SSDs, effectively creating a vast virtual memory pool for the GPU. This technique allows for the training of much larger models than would be possible with the GPUs onboard.

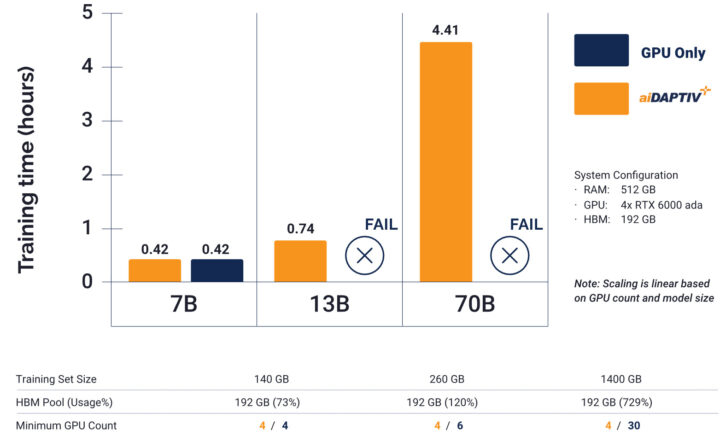

The chart shows the training time for large language models of different sizes—7B, 13B, and 70B. It compares traditional approaches with the aiDAPTIV+ system. The chart indicates that training a 70B model using 4x RTX6000 Ada would fail using the GPUs only, whereas the aiDAPTIV+ solution succeeded in training the model in 4.41 hours. This improvement is due to Phison’s middleware, which optimizes GPU memory by slicing the model, holding pending slices in aiDAPTIVCache, and swapping slices efficiently between the cache and the GPU.

As mentioned at the beginning, ADLINK’s newly launched DLAP Supreme series uses Phison’s aiDAPTIV+ technology to solve memory and performance limitations in edge devices for generative AI. The DLAP Supreme series achieves 8x faster inference speeds, and 4x longer token lengths, and supports large language model training, even on devices with limited memory configurations like NVIDIA Jetson AGX Orin 32GB using the Gemma 27B model. This allows edge devices to handle tasks that usually require high-end GPUs like the H100 or A100. Several companies like ADLINK, Advantech, ASRock, ASUS, and GIGABYTE have already incorporated Phison’s aiDAPTIV+ technology into their solutions.

Debashis Das is a technical content writer and embedded engineer with over five years of experience in the industry. With expertise in Embedded C, PCB Design, and SEO optimization, he effectively blends difficult technical topics with clear communication

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress