Firefly has recently introduced the CSB1-N10 series AI cluster servers designed for applications such as natural language processing, robotics, and image generation. These 1U rack-mounted servers are ideal for data centers, private servers, and edge deployments.

The servers have multiple computing nodes, featuring either energy-efficient processors (Rockchip RK3588, RK3576, or SOPHON BM1688) or high-performance NVIDIA Jetson modules (Orin Nano, Orin NX). With 60 to 1000 TOPS AI power, the CSB1-N10 servers can handle the demands of large AI models, including language models like Gemma-2B and Llama3, as well as visual models like EfficientVIT and Stable Diffusion.

CSB1-N10 series specifications

| Features | CSB1-N10S1688 | CSB1-N10R3588 | CSB1-N10R3576 | CSB1-N10NOrinNano | CSB1-N10NOrinNX |

|---|---|---|---|---|---|

| SoC | Octa-core BM1688 | Octa-core RK3588 | Octa-core RK3576 | Hexa-core Jetson Orin Nano | Octa-core Jetson Orin NX |

| Frequency | 1.6 GHz | 2.4 GHz | 2.2 GHz | 1.5 GHz | 2.0 GHz |

| Number of Nodes | 10 nodes + 1 control node |

||||

| Control Node Processor | RK3588, 2.4 GHz |

||||

| AI Computing Power (INT8) | 160 TOPS | 60 TOPS | 400 TOPS | 1000 TOPS |

|

| RAM per Compute Node | 8GB LPDDR4 (optional up to 16GB) | 16GB LPDDR4 (optional up to 32GB) | 8GB LPDDR4 (optional up to 16GB) | 8GB LPDDR5 | 16GB LPDDR5 |

| Storage per Node | 32GB eMMC (optional up to 256GB) | 256GB eMMC (optional 16GB and up) | 64GB eMMC (optional up to 256GB) | 256GB PCIe NVMe SSD |

|

| Video Encoding/Decoding | 160 channels of H.265/H.264 1080p@30fps decoding, 100 channels encoding | 10 channels of H.265 8K@30fps decoding, 10 channels encoding |

|||

| Storage Expansion | SATA 3.0/SSD hard drive slot |

||||

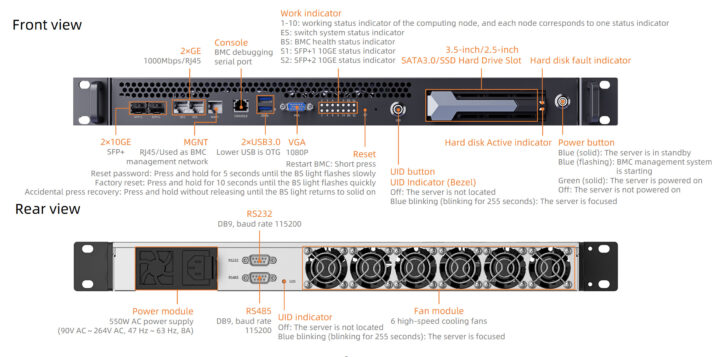

| Ethernet | 2 × 10G Ethernet (SFP+) 2 × Gigabit Ethernet (RJ45) 1 × Gigabit Ethernet (RJ45, MGNT is used as BMC management network) |

||||

| Console | RJ45 console port |

||||

| Display | VGA up to 1080p60 |

||||

| USB | 2 × USB3.0 |

||||

| Button | Reset, UID, power button |

||||

| Misc | 1 × RS232 (DB9, baud rate 115200) 1 × RS485 (DB9, baud rate 115200 |

||||

| Cooling | 6 high-speed fans |

||||

| Power Supply | 550W (non-hot-swappable) |

||||

| Form Factor | 1U rack-mounted |

||||

| Physical Dimensions | 420×421.3×44.4mm |

||||

| Operating Temperature | 0°C ~ 45°C |

||||

| Deep Learning Frameworks | TensorFlow, PyTorch, PaddlePaddle, ONNX, Caffe | cuDNN frameworks: TensorFlow, PyTorch, MATLAB, etc. |

|||

| Large Model Support | Transformer-based LLMs | LLaMa3, Phi-3 Mini, and vision models |

|||

All CSB1-N10 AI servers have the same interfaces, and the only differences are the CPU, memory, storage, multimedia, AI capabilities, and related software support. So it’s likely Firefly has made Rockchip system-on-modules compatible with NVIDIA Jetson SO-DIMM form factor, and indeed we previously noted that Firefly designed Core-1688JD4, Core-3576JD4, or Core-3588JD4 CPU modules with BM1688, RK3576, and RK3588 SoCs, so those are likely the ones used in the CSB1-N10 servers.

In terms of software, the CSB1-N10 series AI cluster servers feature a BMC system with Redfish, VNC, NTP, and monitoring for real-time management. Additionally, they have support for deep learning frameworks like TensorFlow, PyTorch, PaddlePaddle, ONNX, and Caffe, with cuDNN acceleration for certain models. They also support Docker containers that enable private deployment of large models like LLaMa3, Phi-3 Mini, EfficientVIT, and Stable Diffusion. Note the wiki entries for the CSB1-N10 series are all empty, so you may want to refer to the documentation for the respective CPU modules mentioned above.

Previously we have written about various clusters and cluster motherboards including the Lichee Cluster 4A mini-ITX, the Mixtile Cluster Box, and the Turing Pi 2.5 mini-ITX motherboard, but the solutions provided by Firefly are more powerful and can be easily integrated into a rack.

The price ranges from $2,059.00 for the CSB1-N10R3576 to $14,709.00 for the high-performance CSB1-N10NOrinNX Computing Server with 1000 TOPS of processing power. Purchasing links for all models are available on the products page, and you’ll also find some additional information on the datasheets for the Rockchip/SOPHGO and NVIDIA cluster servers.

Debashis Das is a technical content writer and embedded engineer with over five years of experience in the industry. With expertise in Embedded C, PCB Design, and SEO optimization, he effectively blends difficult technical topics with clear communication

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress