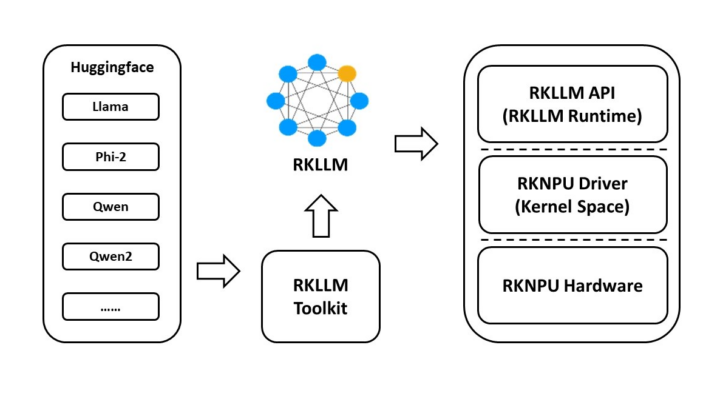

Rockchip RKLLM toolkit (also known as rknn-llm) is a software stack used to deploy generative AI models to Rockchip RK3588, RK3588S, or RK3576 SoC using the built-in NPU with 6 TOPS of AI performance.

We previously tested LLM’s on Rockchip RK3588 SBC using the Mali G610 GPU, and expected NPU support to come soon. A post on X by Orange Pi notified us that the RKLLM software stack had been released and worked on Orange Pi 5 family of single board computers and the Orange Pi CM5 system-on-module.

The Orange Pi 5 Pro‘s user manual provides instructions on page 433 of the 616-page document, but Radxa has similar instructions on their wiki explaining how to use RKLLM and deploy LLM to Rockchip RK3588(S) boards.

The stable version of the RKNN-LLM was released in May 2024 and currently supports the following models:

- TinyLLAMA 1.1B

- Qwen 1.8B

- Qwen2 0.5B

- Phi-2 2.7B

- Phi-3 3.8B

- ChatGLM3 6B

- Gemma 2B

- InternLM2 1.8B

- MiniCPM 2B

You’ll notice all models have between 0.5 and 3.8 billion parameters except for the ChatGLM3 with 6 billion parameters. By comparison, we previously tested Llama3 with 8 billion parameters on the Radxa Fogwise Airbox AI box with a more powerful 32 TOPS AI accelerator.

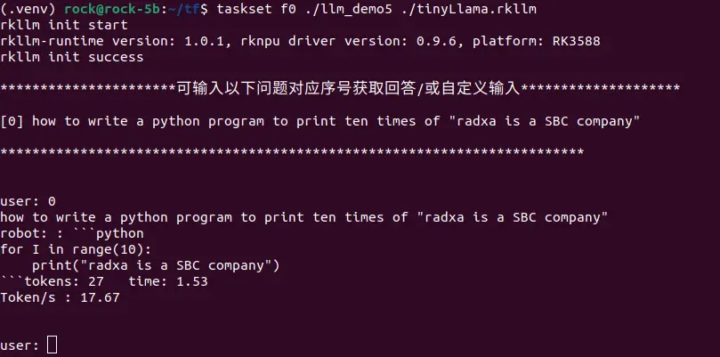

The screenshot above shows the TinyLLMA 1.1B running on the Radxa ROCK 5C at 17.67 token/s. That’s fast but obviously, it’s only possible because it’s a smaller model. It also supports Gradio to access the chatbot through a web interface. As we’ve seen in the Radxa Fogwise Airbox review, the performance decreases as we increase the parameters or answer length.

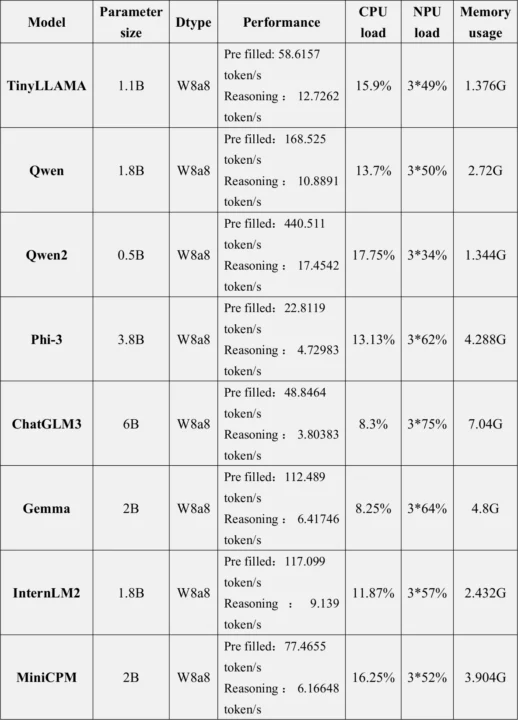

Radxa tested various models and reported the following performance on Rockchip RK3588(S) hardware:

- TinyLlama 1.1B – 15.03 tokens/s

- Qwen 1.8B – 14.18 tokens/s

- Phi3 3.8B – 6.46 tokens/s

- ChatGLM3 – 3.67 tokens/s

When we tested Llama 2 7B on the GPU of the Mixtile Blade 3 SBC, we achieved 2.8 token/s (decode) and 4.8 tokens/s (prefill). So it’s unclear whether the NPU does provide a noticeable benefit in terms of performance, but it may consume less power than the GPU and frees up the GPU for other tasks. The Orange Pi 5 Pro’s user manual provides additional numbers for performance, CPU and NPU loads, and memory usage.

While the “reasoning” (decoding) performance may not be that much better than on the GPU, it looks like pre-fill is significantly faster. Note that this was all done on the closed-source NPU driver, and work is being done for an open-source NPU driver for the RK3588/RK3576 SoC for which the kernel driver was submitted to mainline last month.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress