Neural networks and other machine learning processes are often associated with powerful processors and GPUs. However, as we’ve seen on the page, AI is also moving to the very edge, and the BitNetMCU open-source project further showcases that it is possible to run low-bit quantized neural networks on low-end RISC-V microcontrollers such as the inexpensive CH32V003.

As a reminder, the CH32V003 is based on the QingKe 32-bit RISC-V2A processor, which supports two levels of interrupt nesting. It is a compact, low-power, general-purpose 48MHz microcontroller that has 2KB SRAM with 16KB flash. The chip comes in a TSSOP20, QFN20, SOP16, or SOP8 package.

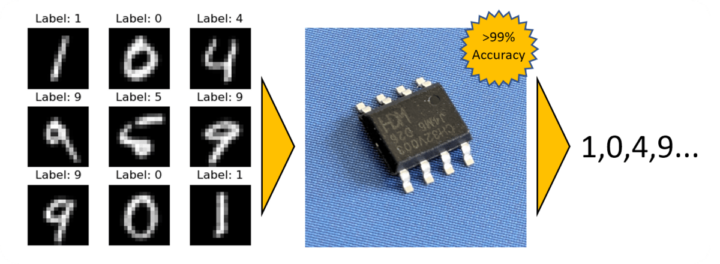

To run machine learning on the CH32V003 microcontroller, the BitNetMCU project does Quantization Aware Training (QAT) and fine-tunes the inference code and model structure, which makes it possible to surpass 99% test accuracy on a 16×16 MNIST dataset without using any multiplication instructions. This performance is impressive, considering the 48 MHz chip only has 2 kilobytes of RAM and 16 kilobytes of flash memory.

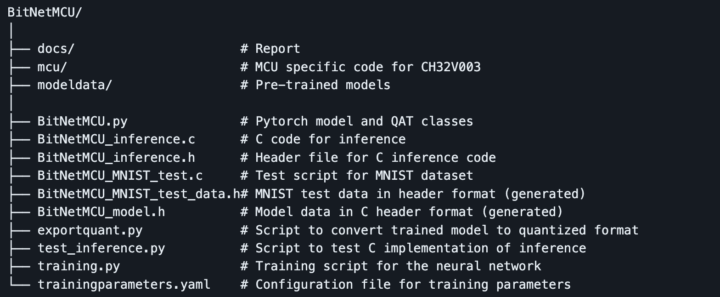

The training data pipeline for this project is based on PyTorch and consists of several Python scripts. These include:

- trainingparameters.yaml configuration file to set all the parameters for the training model

- training.py Python script trains the model, then stores it in the model data folder as a .pth file (weights are stored as floats, with quantization happening on the fly during training).

- exportquant.py Quantized model exporting file converts the stored trained model into a quantized format and exports it into the C header file (BitNetMCU_model.h)

- Optional test-inference.py script that calls the DLL (compiled from the inference code) for testing and comparing results with the original Python model

The inference engine (BitNetMCU_inference.c) is implemented in ANSI-C, which you can use with the CH32V003 RISC-V MCU or port to any other microcontroller. You can test the inference of 10 digits by compiling and executing BitNetMCU_MNIST_test.c. The model data is in the BitNetMCU_MNIST_test_data.h file, and the test data is in the BitNetMCU_MNIST_test_data.h file. You can check the code and follow the instructions in the readme.md file found on GitHub to give Machine Learning on the CH32V003 a try.

Dennis Mwihia is a technical writer specializing in IoT, PCBs, SBCs, and single-board microcontrollers. He has worked with several companies in those areas and has over 5 years of research, writing, and software development experience.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress