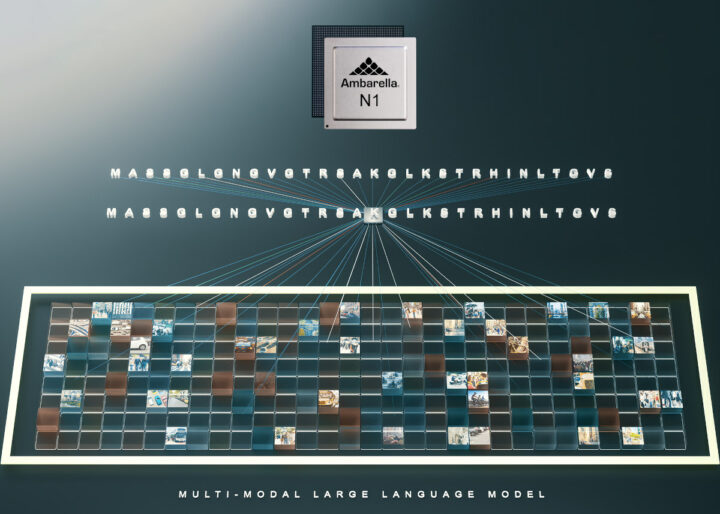

Ambarella has been working on adding support for generative AI and multi-modal large language models (LLMs) to its AI Edge processors including the new 50W N1 SoC series with server-grade performance and the 5W CV72S for mid-range applications at a fraction of the power-per-inference of leading GPU solutions.

Last year, Generative AI was mostly offered as a cloud solution, but we’ve also seen LLM running on single board computers thanks to open-source projects such as Llama2 and Whispter, and analysts such as Alexander Harrowell, Principal Analyst, at Omdia expect that “virtually every edge application will get enhanced by generative AI in the next 18 months”. The Generative AI and LLM solutions running on Ambarella AI Edge processors will be used for video analytics, robotics, and various industrial applications.

Compared to GPUs and other AI accelerators, Ambarella provides AI Edge SoC solutions that are up to 3x more power-efficient per generated token, while enabling generative AI workloads to run on the device for lower latency. Ambarella claims the N1 SoC can handle both natural language processing, video processing, and AI vision workloads simultaneously at very low power, while standalone AI accelerators require a separate host processor and may not be as efficient.

Ambarella AI SoCs are supported by the company’s new Cooper Developer Platform which includes pre-ported and optimized popular LLM that will be available for download from the Cooper Model Garden Some of the pre-ported modes include Llama-2 and the Large Language and Video Assistant (LLava) model running on N1 for multi-modal vision analysis of up to 32 camera sources. As I understand it, this should allow the user to input a text search for specific items in the video streaming such as “red car”. Other applications include robot control with natural language commands, code generation, image generation, etc.

Ambarella further explains the N1 series of SoCs is based on the CV3-HD architecture, initially developed for autonomous driving applications, which has been repurposed to run multi-model LLMs with up to 34 billion parameters at low power. For instance, the N1 SoC is said to run the Llama2-13B with up to 25 output tokens per second in single-streaming mode at under 50W of power.

I could not find any product pages for either the N1 SoC or Cooper Developer Platform at the time of writing, so the only information we have is from the press release, and people who attend CES 2024 will be able to see a multi-modal LLM demo running on an N1-based platform at the Ambarella booth.

Thanks to TLS for the tip.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress