Modular Mojo is a new programming language designed for AI developers that is said to combine the usability of Python with the performance of C with over 36,000 times the performance of Python on a matrix multiplication workload.

Modular Mojo programming language was not in the initial plan of the company but came about when the company’s founders – who focused on building a platform to unify the world’s ML/AI infrastructure – realized that programming across the entire stack was too complicated and also ended up writing a lot of MLIR (Multi-Level Intermediate Representation) by hand.

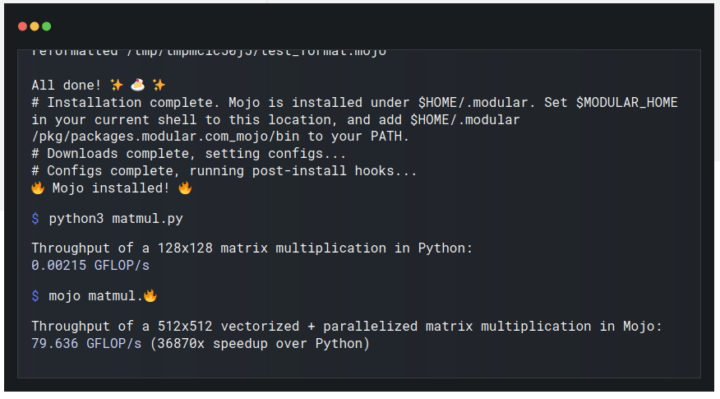

The “over 36,000 times speedup” claim comes with the matmul.py script performing a 128×128 matrix multiplication in Python with a throughput of 0.00215 GFLOP/s and another script doing 512×512 vectorized + parallelized matrix multiplication in Mojo at 79.636 GFLOP/s.

The claim looks dubious and that’s odd they used different matrix sizes, but some are ready to spend a lot of money on the company, as Monitor claims to have raised $100 million dollars. A discussion thread on Twitter/X reveals the results may not be that relevant because as Sachin Shekhar puts it:

All ML libraries use C under the hood. Python is merely an interface. Given, C is still being used, it isn’t easy to replace a programming language. You need to give something special. Nobody’s running big loops using native Python in production.

We can’t reproduce the test above just yet, because the Mojo SDK will only be released in September, but the documentation is available and includes a such about matrix multiplication. Since Mojo is a superset of Python, both matmul scripts are identical:

|

1 2 3 4 5 6 7 |

%%python def matmul_python(C, A, B): for m in range(C.rows): for k in range(A.cols): for n in range(C.cols): C[m, n] += A[m, k] * B[k, n] |

They just changed the function name to “matmul_untyped” in the Mojo script. Here’s the code for the Python matrix multiplication script:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

import numpy as np from timeit import timeit class Matrix: def __init__(self, value, rows, cols): self.value = value self.rows = rows self.cols = cols def __getitem__(self, idxs): return self.value[idxs[0]][idxs[1]] def __setitem__(self, idxs, value): self.value[idxs[0]][idxs[1]] = value def benchmark_matmul_python(M, N, K): A = Matrix(list(np.random.rand(M, K)), M, K) B = Matrix(list(np.random.rand(K, N)), K, N) C = Matrix(list(np.zeros((M, N))), M, N) secs = timeit(lambda: matmul_python(C, A, B), number=2)/2 gflops = ((2*M*N*K)/secs) / 1e9 print(gflops, "GFLOP/s") return gflops python_gflops = benchmark_matmul_python(128, 128, 128).to_float64() |

The throughput is shown to be 0.0016717199881536883 GFLOP/s in the documentation.

Now we can look at the Mojo script which also imports modules specific to Mojo:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 |

from benchmark import Benchmark from sys.intrinsics import strided_load from utils.list import VariadicList from math import div_ceil, min from memory import memset_zero from memory.unsafe import DTypePointer from random import rand, random_float64 from sys.info import simdwidthof fn matrix_getitem(self: object, i: object) raises -> object: return self.value[i] fn matrix_setitem(self: object, i: object, value: object) raises -> object: self.value[i] = value return None fn matrix_append(self: object, value: object) raises -> object: self.value.append(value) return None fn matrix_init(rows: Int, cols: Int) raises -> object: let value = object([]) return object( Attr("value", value), Attr("__getitem__", matrix_getitem), Attr("__setitem__", matrix_setitem), Attr("rows", rows), Attr("cols", cols), Attr("append", matrix_append), ) def benchmark_matmul_untyped(M: Int, N: Int, K: Int, python_gflops: Float64): C = matrix_init(M, N) A = matrix_init(M, K) B = matrix_init(K, N) for i in range(M): c_row = object([]) b_row = object([]) a_row = object([]) for j in range(N): c_row.append(0.0) b_row.append(random_float64(-5, 5)) a_row.append(random_float64(-5, 5)) C.append(c_row) B.append(b_row) A.append(a_row) @parameter fn test_fn(): try: _ = matmul_untyped(C, A, B) except: pass let secs = Float64(Benchmark().run[test_fn]()) / 1_000_000_000 _ = (A, B, C) let gflops = ((2*M*N*K)/secs) / 1e9 let speedup : Float64 = gflops / python_gflops print(gflops, "GFLOP/s, a", speedup.value, "x speedup over Python") benchmark_matmul_untyped(128, 128, 128, python_gflops) |

The output from the script is:

|

1 |

0.029258 GFLOP/s, a 17.501798 x speedup over Python |

So when using the same 128 x 128 matrix, Mojo is 17.5 times faster than Python so the 36,000 times speedup claim feels fake to me as it’s only due to the larger matrix, and makes Monitor look dishonest… A 17.5 times speedup is still pretty good, but if most Python programs for AI workloads indeed rely on C libraries, it may not be relevant.

Since Mojo is a superset of Python, I suppose it won’t run on low-end hardware like microcontroller boards, but should be fine on any Linux platforms.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress