Lyra V2 is an update to the open-source Lyra audio codec introduced last year by Google, with a new architecture that offers scalable bitrate capabilities, better performance, higher quality audio, and works on more platforms.

Under the hood, Lyra V2 is based on an end-to-end neural audio codec called SoundStream with a “residual vector quantizer” (RVQ) sitting before and after the transmission channel, and that can change the audio bitrate at any time by selecting the number of quantizers to use. Three bitrates are supported: 3.2 kps, 6 kbps, and 9.2 kbps. Lyra V2 leverages artificial intelligence, and a TensorFlow Lite model enables it to run on Android phones, Linux, as well as Mac and Windows although support for the latter two is experimental. iOS and other embedded platforms are not supported at this time, but this may change in the future.

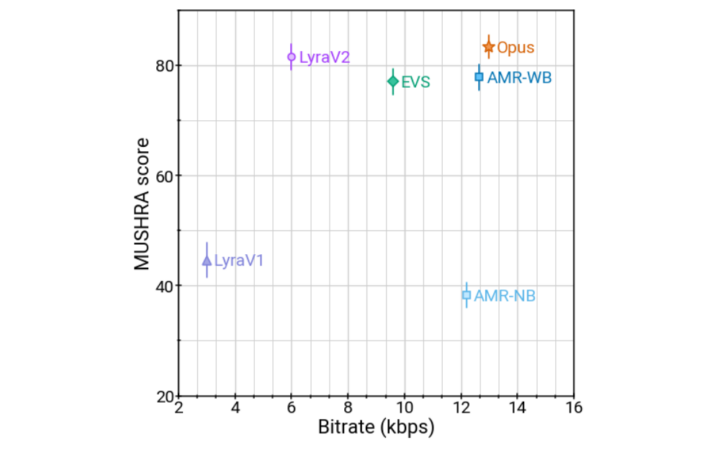

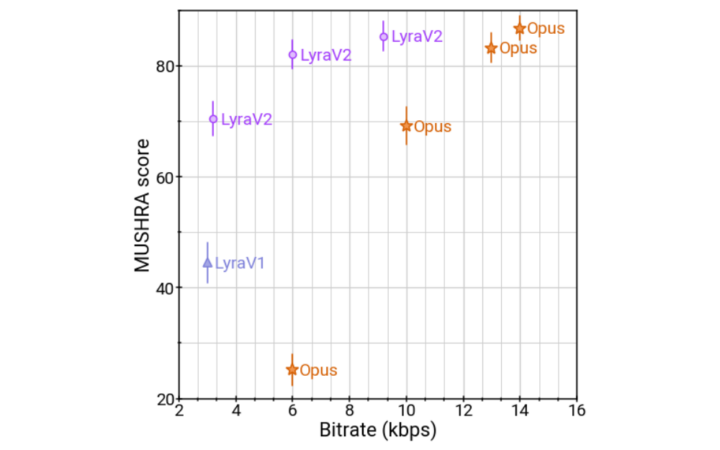

It gets more interesting once we start to compare Lyra V2 against other audio codecs such as Lyra (V1) and Opus with the new audio codec delivering a higher quality (MUSHRA score) than those at a given bitrate, and the chart above shows Lyra V2 @ 9.2 kbps offers about the same quality as Opus at 14 kbps.

It gets more interesting once we start to compare Lyra V2 against other audio codecs such as Lyra (V1) and Opus with the new audio codec delivering a higher quality (MUSHRA score) than those at a given bitrate, and the chart above shows Lyra V2 @ 9.2 kbps offers about the same quality as Opus at 14 kbps.

Latency has also been improved from 100ms to 20 ms, making the second generation codec comparable to Opus for WebRTC, which has a typical delay of 26.5 ms, 46.5 ms, and 66.5 ms. Lyra V2 also encodes and decodes five times faster than Lyra V1 to enable real-time audio encoding/decoding and lower power consumption. For instance, the new audio codec takes 0.57 ms to encode and decode a 20 ms audio frame on a Pixel 6 Pro phone, or about 35 times faster than real-time.

While LyraV1 would compare to AMR-NB, Lyra V2 offers improved quality compared to Enhanced Voice Services (EVS) and Adaptive Multi-Rate Wideband (AMR-WB), and similar quality as Opus while using just about 50% to 60% of the bandwidth.

The source code for Lyra V1/V2 implementation can be found on Github with the C++ API most of the same since the first release, except for a few changes such as the ability to change the bitrate during encoding. The model definitions and weights are also included as .tflite files.

More details and audio samples can be found on Google Open Source Blog.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress