FOMO used to stand for “Fear Of Missing Out” in my corner of the Internet, but Edge Impulse’s FOMO is completely different, as the “Faster Object, More Objects” model is designed to lower the footprint and improve the performance of object detection on resource-constrained embedded systems.

The company says FOMO is 30x faster than MobileNet SSD and works on systems with less than 200K of RAM available. Edge Impulse explains the FOMO model provides a variant between basic image classification (e.g. is there a face in the image?) and more complex object detection (how many faces are in the image, if any, and where and what size are they?). That’s basically a simplified version of object detection where we’ll know the position of the objects in the image, but not their sizes.

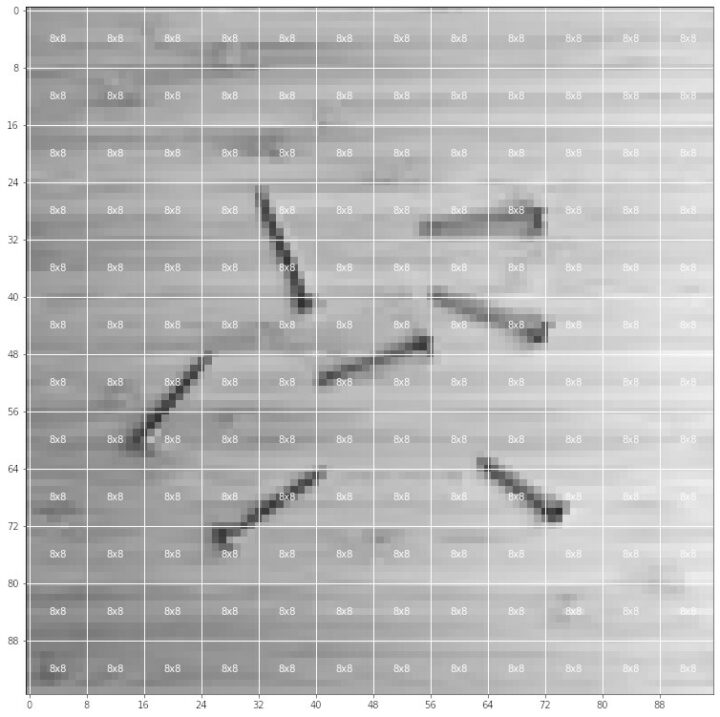

So instead of seeing the usual bounding box while the model is running, the face position will be shown with the small circle called “centroid”. FOMO works on images as small as 96×96 resolution, it will split the image into a grid of default size 8×8 pixels, and run on image classification across all cells in the grid independently in parallel. For a 96×96 image, and an 8×8 grid, we’d get a 12×12 partitioning.

The smaller the image, the lower the requirements, and for instance, FOMO with 96×96 grayscale input and MobileNetV2 0.05 alpha can run at about 10 fps on a Cortex-M4F at 80 MHz while utilizing less than 100KB RAM. Interested developers should bear in mind FOMO’s limitations due to the splitting of the image: it works better when objects are all of a similar size, and when they are not too close to each other.

The video below shows 30 fps object detection (cans and bottles) on the Arduino Nicla Vision board powered by an STM32H7 Cortex-M7 microcontroller.

FOMO is not limited to hardware with microcontrollers, and it can also be used on Linux hardware where you may need extra performance, and for example, you can achieve 60 fps object detection on Raspberry Pi 4 SBC.

FOMO works only on boards fully supported by Edge Impulse and equipped with a camera. More details can be found in the announcement and documentation.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress