When Sipeed introduced MAIX-II Dock AIoT vision development kit, they asked help from the community to help reverse-engineer Allwinner V831‘s NPU in order to make an open-source AI toolchain based on NCNN.

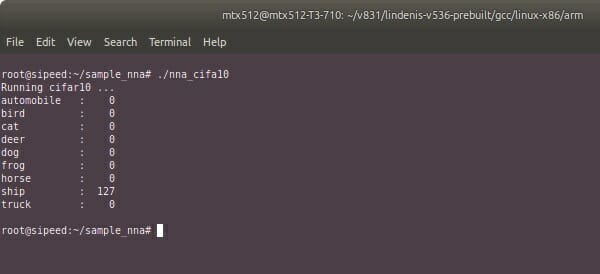

Sipeed already had decoded the NPU registers, and Jasbir offered help for the next step and received a free sample board to try it out. Good progress has been made and it’s now possible to detect objects like a boat using cifar10 object recognition sample.

Allwinner V831’s NPU is based on a customized implementation of NVIDIA Deep Learning Accelerator (NVDLA) open-source architecture, something that Allwinner (through Sipeed) asked us to remove from the initial announcement, and after reverse-engineering work, Jasbir determined the following key finding:

- The NPU clock defaults to 400 MHz, but can be set between 100 and 1200 MHz

- NPU is implemented with nv_small configuration (NV Small Model), and relies on shared system memory for all data operations.

- int8 and int16 are supported with int8 preferred for speed and limited on-board memory (64Mb)

- 64 MACs (Atomic-C * Atomic-K)

- Memory-mapped register programmable from userspace

- Physical address locations are required when referencing weights & input/output data locations, meaning kernel memory needs to be allocated and the physical addresses retrieved if accessed from userspace.

- NPU weights and input/output data follow a similar layout to the NVDLA private formats, so formats like nhwc or nchw must be transformed before being fed to the NPU.

Those findings allowed him to adapt the code for the cifar10 demo from Arm’s CMSIS_5 NN library, removing all Allwinner closed-source binaries in the process. You’ll find the source code on v831-npu repository on Github, and can check out Jasbir post to find out how to try it out provided you have an Allwinner V831 board on hand.

The current code supports direct convolutions, bias addition, relu/prelu, element wise operations, and max/average pooling, and there’s more work to be done including the development of a weight and input/output data conversion utility and integrating into an existing AI framework.

The good news is the work should also benefit other platform features an NVDLA based AI accelerator including Beagle V SBC that has just started to find its way into the hands of developers in the few days.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress