Rockchip RK3399 and RK3328 are typically used in Chromebooks, single board computers, TV boxes, and all sort of AIoT devices, but if you ever wanted to create a cluster based on those processor, Firefly Cluster Server R2 leverages the company’s RK3399, RK3328, or even RK1808 NPU SoM to bring 72 modules to a 2U rack cluster server enclosure, for a total of up to 432 Arm Cortex-A72/A53 cores, 288 GB RAM, and two 3.5-inch hard drives.

Firefly Cluster Server R2 specifications:

- Supported Modules

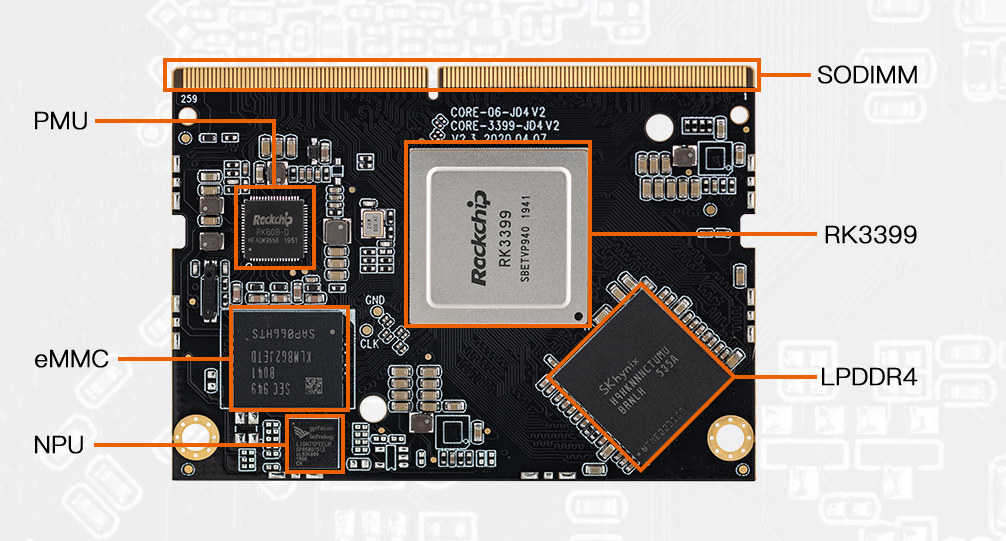

- Core-3399-JD4 with Rockchip RK3399 hexa-core Cortex-A72/A53 processor up to 1.5 GHz, up to 4GB RAM, and optional on-board 2.8 TOPS NPU (Gyrfalcon Lightspeeur SPR5801S)

- Core-3328-JD4 with Rockchip RK3328 quad-core Cortex-A53 processor up to 1.5 GHz, up to 4GB RAM

- Core-1808-JD4 with Rockchip RK1808 dual-core Cortex-A35 processor @ 1.6 GHz with integrated 3.0 TOPS NPU, up to 4GB RAM

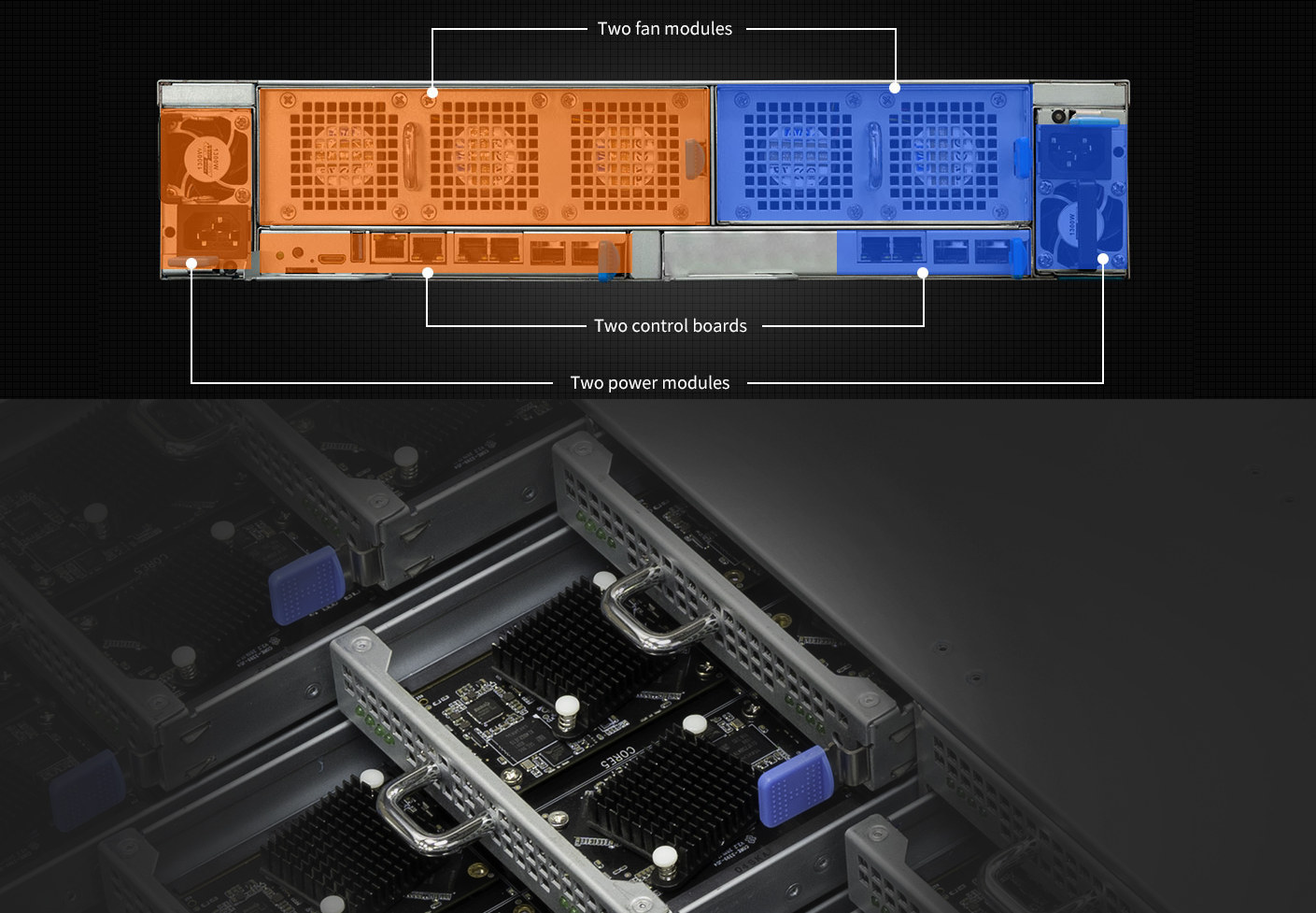

- Configuration – Up to 9x blade nodes with 8x modules each

- Storage

- 32GB eMMC flash on each module (options: 8, 16, 64, or 128 GB)

- 2x hot-swappable 3.5-inch SATA SDD / hard disks on blade node #9.

- SD card slot

- BMC module:

- Networking

- 2x Gigabit Ethernet RJ45 ports (redundant)

- 2x 10GbE SFP+ cages

- Support for network isolation, link aggregation, network load balancing and flow control.

- Video Output – Mini HDMI 2.0 up to 4Kp6 on master board

- USB – 1x USB 3.0 host

- Debugging – Console debug port

- Misc

- Indicators – 1x master board LED, 8x LEDs on each blade (one per board), 2x fan LEDs, 2x power LEDs, 2x switch module LEDs, UID LED, BMC LED

- Reset and UID buttons

- Fan modules – 3×2 redundancy fan module , 2×2 redundancy fan module

- Networking

- Power Supply – Dual-channel redundant power design: AC 100~240V 50/60Hz, 1300W / 800W optional

- Dimensions – 580 x 434 x 88.8mm (2U rack cluster server)

- Temperature Range – 0ºC – 35ºC

- Operating Humidity – 8%RH~95%RH

Firefly says the cluster can run Android, Ubuntu, or some other Linux distributions. Typical use cases include “cloud phone”, virtual desktop, edge computing, cloud gaming, cloud storage, blockchain, multi-channel video decoding, app cloning, etc. When fitted with the AI accelerators, it looks similar to Solidrun Janux GS31 Edge AI server designed for real-time inference on multiple video streams for the monitoring of smart cities & infrastructure, intelligent enterprise/industrial video surveillance, object detection, recognition & classification, smart visual analysis, and more. There’s no Wiki for Cluster Server R2 just yet, but you may find some relevant information on the Wiki for an earlier generation of the cluster server.

There’s no price for Cluster Server R2, but for reference, Firefly Cluster Server R1 is currently sold for $2000 with eleven Core-3399-JD4 module equipped with 4GB RAM and 32GB eMMC flash storage, so a complete NPU less Cluster Server R2 system might cost around $8,000 to $10,000. Further details may be found on the product page.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress