Last week, Flex Logix announced the InferX X1 AI Inference Accelerator at Linley Fall Conference 2020. Today, they announced the InferX X1 SDK, PCIe board, and M.2 board.

InferX X1 Edge Inference SDK

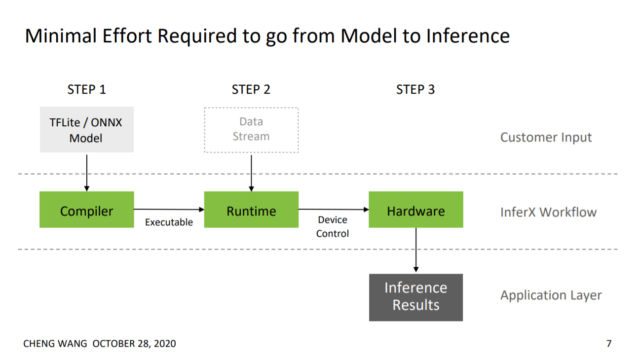

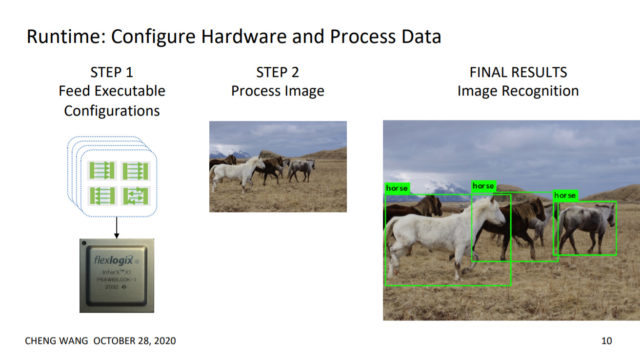

The InferX Edge Inference SDK is simple and easy. The input to the compiler can be an open-source high-level, hardware-agnostic implementation of the neural network model that can be TensorFlow Lite or ONNX model. The compiler takes this model and looks for the available X1 resources and generates a binary executable file. This goes to the runtime which then takes the input stream, for example, a live feed from a camera. The user has to specify which compiler model, then the InferX X1 driver takes it and sends it to hardware.

The binary file generated is fed to InferX X1 through the runtime. Then it takes the input data stream with a user-specified model and gives the output back to the host. This gives an advantage to the user as more than one model can be specified by the user.

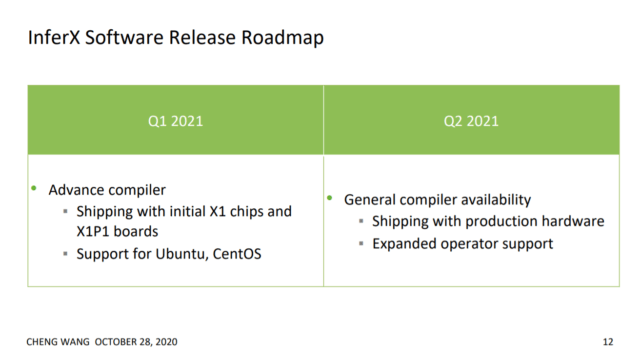

YOLOv3 is out now through the compiler framework and we can expect it to be demonstrated in the coming weeks. By Q1 2021, it will support popular customer models and initial support for Linux-based operating system Ubuntu and CentOS.

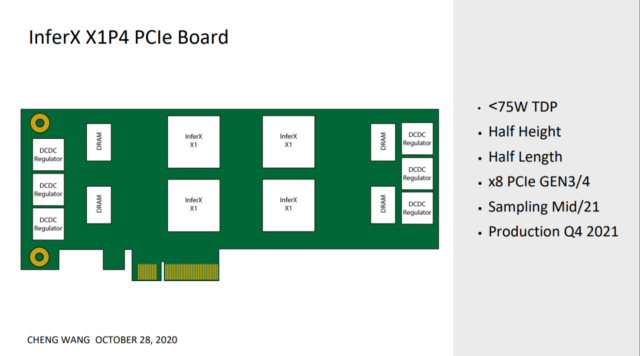

InferX X1P1 and X1P4 PCIe Board

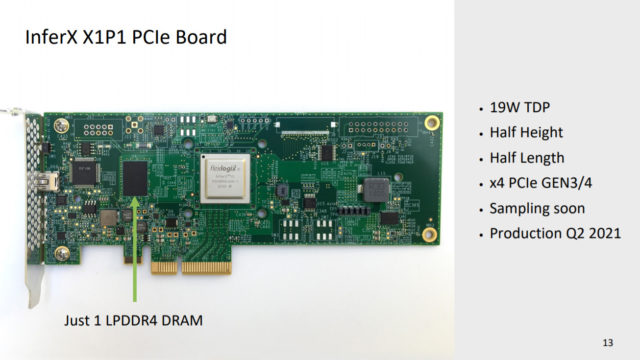

The InferX X1P1 PCIe card has a single X1 chip on it. X1P1 has the same form factor as Tesla T4 with half-length and half-height by fitting 4 slots for PCIe Gen 3/4.

The InferX X1P4 is about the same size as X1P1. The connector side is yet to be decided on by 8 or by 16. This will have four InferX X1 chips on board with its own DRAM attached to it. The TDP is expected to be under 75 watts.

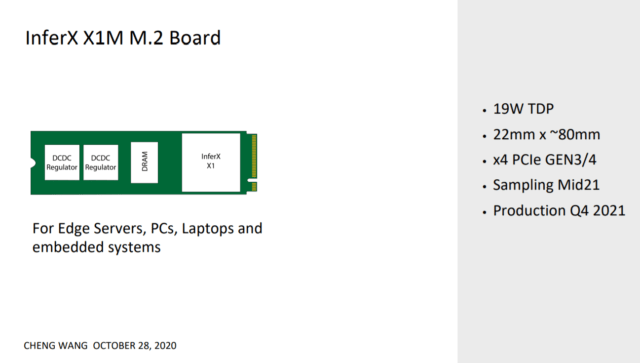

InferX X1M M.2 Board

Besides all this, Flex Logix even announced a few details on the InferX X1M M.2 board. It will be 22mm x ~80mm. The estimated TDP is 19W but will be less than that on customer models. It is for edge servers, PCs, laptops, and even embedded systems.

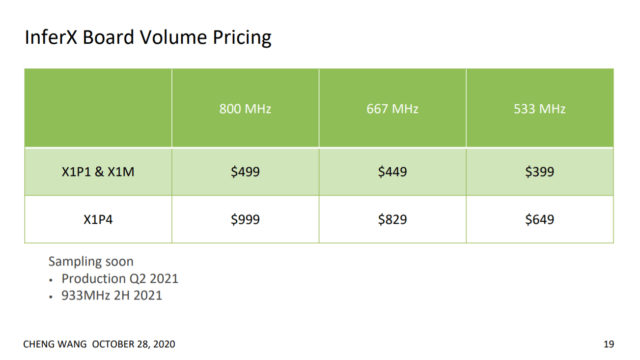

InferX Board Price and Availability

Both the PCIe boards will be cheaper than the Tesla T4 by a substantial amount. InferX X1P1 will be at 1/4th cost of Tesla T4. The X1P1 can deliver a lot more throughput per dollar than T4. The lower frequency customers can enjoy them, even more, at less cost.

It will be interesting to see the actual release of these products in the market. The major advantages are cost, throughput, latency, and accuracy.

Source: All the figures used are from Flex Logix slides at the Linley Fall Conference 2020.

Abhishek Jadhav is an engineering student, RISC-V Ambassador, freelance tech writer, and leader of the Open Hardware Developer Community.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress