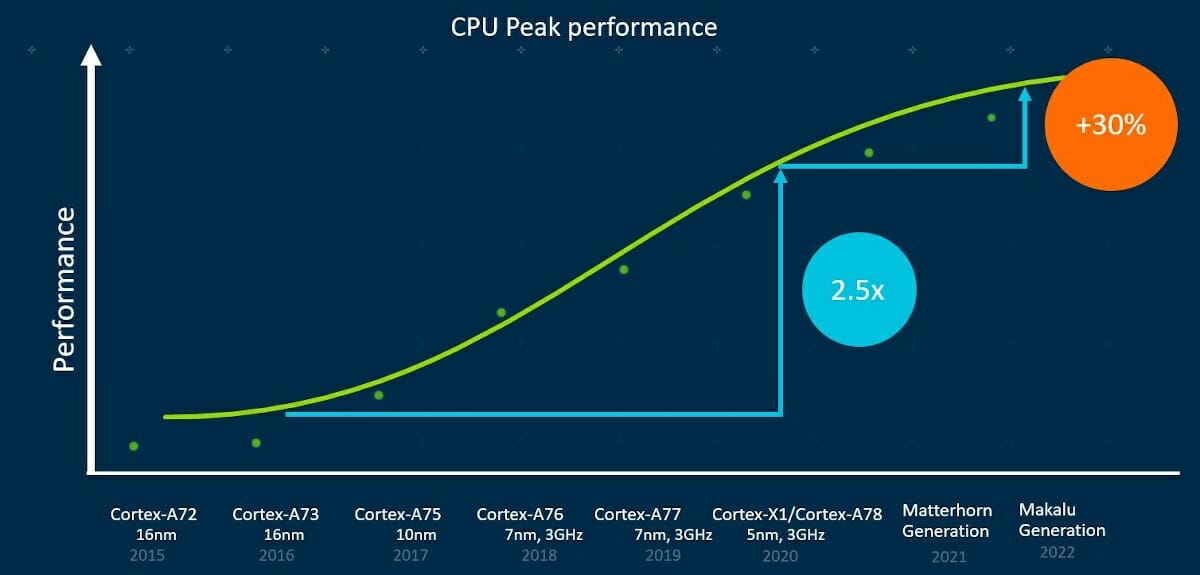

The Arm DevSummit 2020, previously known as Arm TechCon, is taking place virtually this week until Friday 9th, and besides some expected discussions about NVIDIA’s purchase of Arm, there have been some technical announcements, notably a high-performance CPU roadmap for the next two years, which will see Matterhorn (Cortex-A79?) in 2021, and Makalu (Cortex-A80?), the first 64-bit only Arm processor, in 2022.

The company did not provide many details about the new cores, but they expected a peak performance uplift of up to 30% from the Cortex-A78 to the future Makalu generations. It should be noted that while performance keeps improving, the curve has flattened a bit.

But the main announcement is that starting from 2022, all high-end Arm CPU cores (i.e. the “big” cores) will be 64-bit. So far, most Cortex-A cores supported both 32-bit (Aarch32) and 64-bit (Aarch64) architecture, and as we noted four years ago, the latter does not only makes it possible to address more memory, but 64-bit instructions can boost performance up 15 to 30% compared to 32-bit instructions.

Arm explains it did the move as complex digital immersion experiences and compute-intensive workloads from future AI, XR (AR and VR), and high-fidelity mobile gaming experiences require 64-bit for improved performance and compute capabilities. The move to 64-bit only will also lower the cost and time-to-market of mobile apps, since developers of app suitable for high-end devices will be able to focus on 64-bit development only.

https://twitter.com/ArmMobile/status/1313864805615370241

Since most phones will likely ship with dynamIQ SoCs combining 64-bit big and 32-bit/64-bit LITTLE Arm cores, “legacy” 32-bit apps should still be able to run on those phones, but only on the LITTLE cores. What’s a little confusing is that Arm talks about “64-bit only mobile devices expected to arrive by 2023”, implying 32-bit app will not be supported at all. We’ll have to wait a little longer to understand the implications of the move.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress