SolidRun CEx7-LX2160A COM Express module with NXP LX2160A 16-core Arm Cortex A72 processor has been found in the company’s Janux GS31 Edge AI server in combination with several Gyrfalcon AI accelerators. But now another company – Bamboo Systems – has now launched its own servers based on up to eight CEx7-LX2160A module providing 128 Arm Cortex-A72 cores, support for up to 512GB DDR4 ECC, up to 64TB NVMe SSD storage, and delivering a maximum of 160Gb/s network bandwidth in a single rack unit.

Bamboo Systems B1000N Server specifications:

- B1004N – 1 Blade System

- B1008N – 2 Blade System

- N series Blade with 4x compute nodes each (i.e. 4x CEx7 LX2160A COM Express modules)

- Compute Node – NXP 2160A 16-core Cortex-A72 processor for a total of 64 cores per blade.

- Memory – Up to 64GB ECC DDR4 per compute node or 256GB per blade.

- Storage – 1x 2.5” NVMe SSD PCIe up to 8TB per compute node, or 32TB per blade

- Network

- 16-port 10/40Gb Ethernet non-blocking level 3 switch per blade

- Uplink – 2x 10/40Gb QSFP Ports per blade

- Misc – Six dual counter-rotating fans

- Power supply – 1 or 2 1300W AC/DC PFC 48V DC Power

- Dimensions – 778 x 483 x 43 mm (1U 19″ rack – Bamboo B1000 chassis)

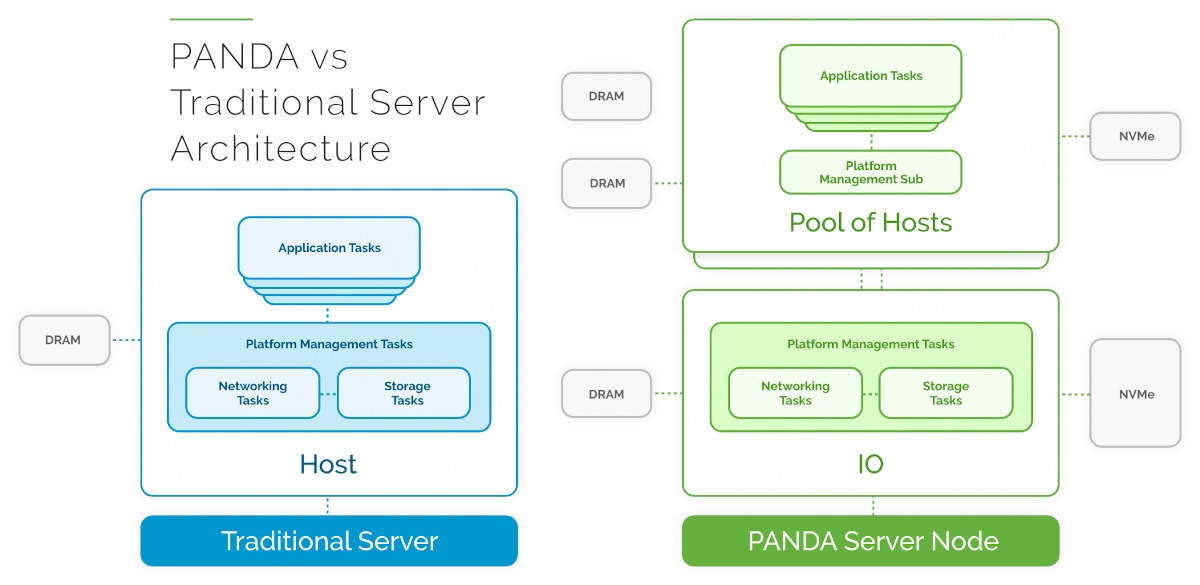

Bamboo B1008N is more like an 8 Linux servers-in-a-box rather than a single 128-core server, and all 8 servers interface using Bamboo PANDA (Parallel Arm Node Designed Architecture) system architecture “utilizing embedded systems methodologies, to maximize compute throughput for modern microservices-based workloads while using as little power as possible and generating as little heat as possible”.

The Bamboo Pandamonium System is a management module with UI and REST API using for configuration and control, for example, to turn off individual nodes to save power when they are not needed. Bamboo B1000N server is especially suited for tasks such as Kubernetes, edge computing, AI/ML simulation, and Platform-as-a-Service (PaaS).

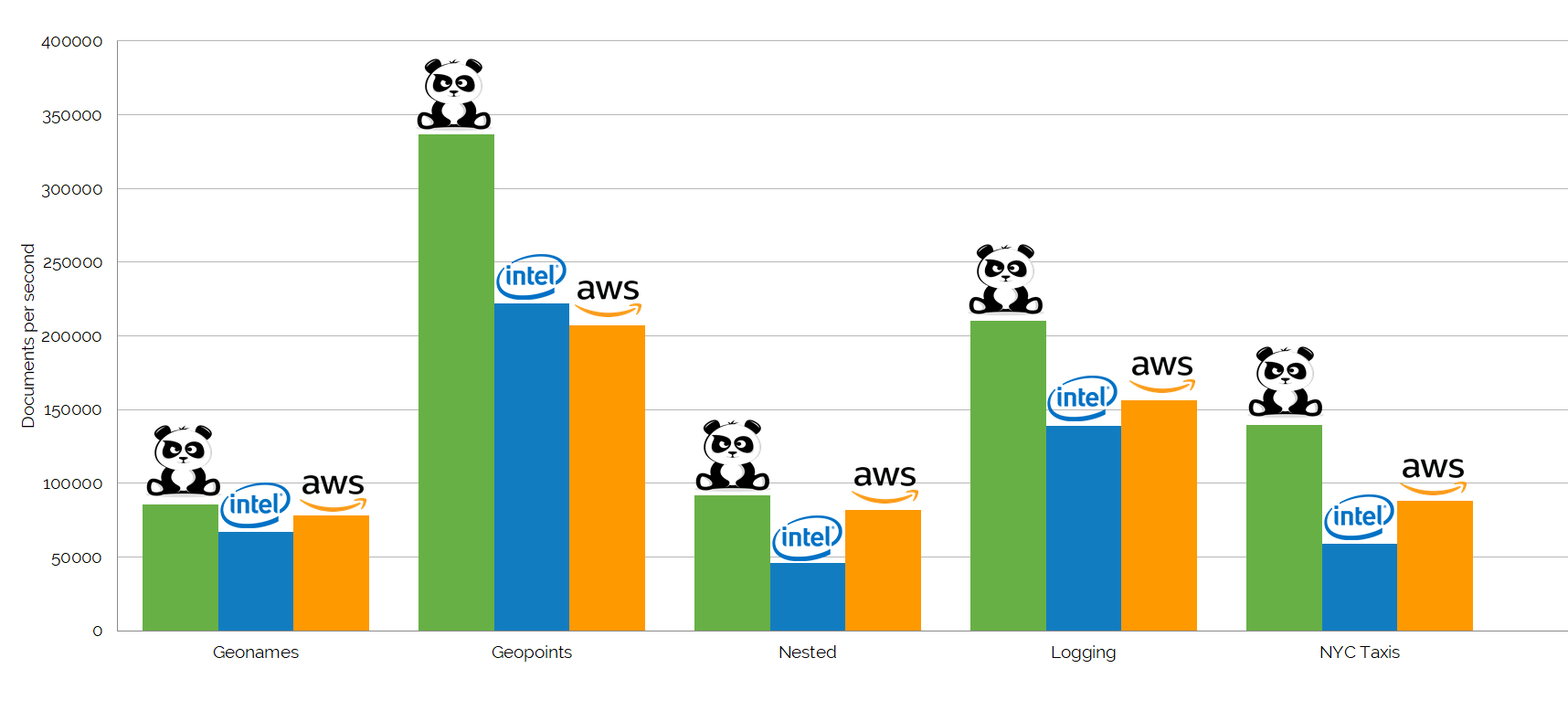

The company compared B1008N server fitted with 64GB RAM and 32TB SSD to a server with three Xeon E3-1246v3 processors @ 3.5GHz, 32GB RAM, and two AWS Instances m4.2xlarge with 8x Intel Xeon vCPU @ 2.3 GHz, 32GB RAM using the industry-standard ElasticSearch benchmark.

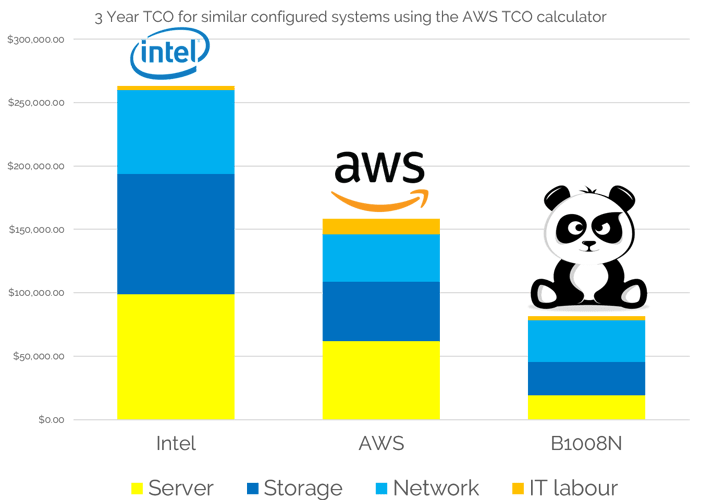

Bamboo Arm server’s performance is clearly competitive against those Intel solutions, but the real advantages are the lower acquisition cost (50% cheaper), the much lower energy consumption (75% lower), and it’s much more compact saving up to 80% of the rack space, and this shows in the total cost of ownership as calculation over a period of three years using AWS TCO calculator.

B1008N costs about $80,000 to operate over 3 years, against around $155,000 for the AWS and over $250,000 for the Intel Xeon servers. The server costs are quite straightforward as it’s the one-time cost of the hardware or AWS subscriptions over three years. Bamboo Systems explains B1008N network costs are 50% less than Intel as traffic is contained within the system thanks to the built-in Layer 3 switches. However, I don’t understand the higher storage costs for the Intel servers.

There’s no available or pricing on the product page, but Cambridge Network reports Bamboo Systems B1000N Arm server will be available in the US and Europe in Q3 2020 with price starting at $9,995.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress