Silicon vendors have been cheating in benchmarks for years, mostly by detecting when popular benchmarks run and boost the performance of their processors without regard to battery life during the duration of the test in order to deliver the best possible score.

There was a lot of naming and shaming a few years back, and we did not hear much about benchmarks cheating in the last couple of years, but Anandtech discovered MediaTek was at it again while comparing results between Oppo Reno3 Europe (MediaTek Helio P95) and China (MediaTek Dimensity 1000L) with the older P95 model delivering much higher performance contrary to expectations.

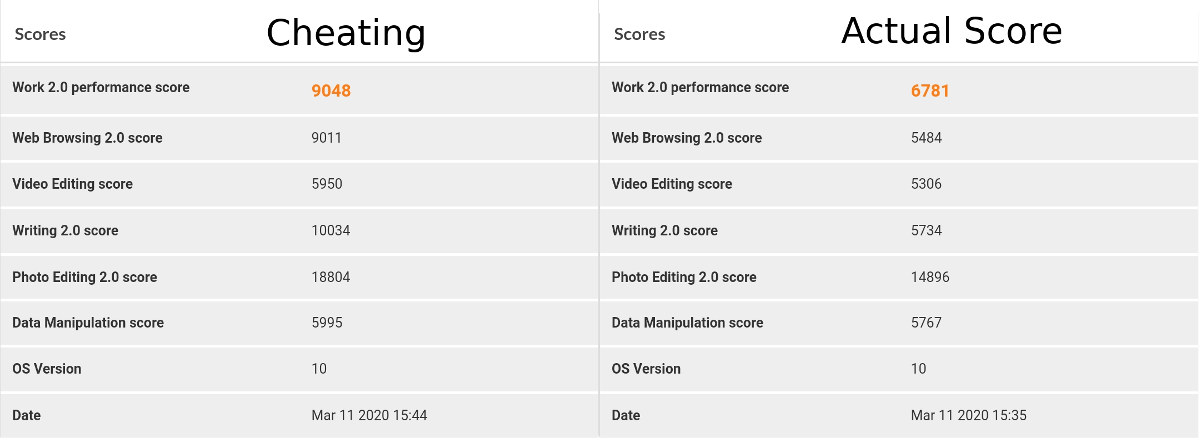

Cheating was suspected, so they contact UL to provide an anonymized version of PCMark so that the firmware could not detect the benchmark was run. Here are the before and after results.

As noted by Anandtech, the differences are really stunning: a 30% difference in the overall score, with up to a 75% difference in individual scores (e.g. Writing 2.0).

After digging into the issue they found power_whitelist_cfg.xml text file, usually found in /vendor/etc folder, added some interesting entries:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

... <Package name="com.futuremark.pcmark.android.benchmark"> <Activity name="Common"> <PERF_RES_POWERHAL_SPORTS_MODE Param1="1"/> <PERF_RES_GPU_FREQ_MIN Param1="0"/> </Activity> </Package> <Package name="com.antutu.ABenchMark"> <Activity name="Common"> <PERF_RES_POWERHAL_SPORTS_MODE Param1="1"/> </Activity> </Package> <Package name="com.antutu.benchmark.full"> <Activity name="Common"> <PERF_RES_POWERHAL_SPORTS_MODE Param1="1"/> </Activity> </Package> ... |

Just found UMIDIGI A5 Pro has similar parameters but using “CMD_SET_SPORTS_MODE” instead of “PERF_RES_POWERHAL_SPORTS_MODE”.

AnandTech went on to check other MediaTek phones from Oppo, Realme, Sony, Xiaomi, and iVoomi and they all had the same tricks!

The way it works is that smartphone makers must find the right balance between performance and power consumption, and do that by adjusting the frequency and voltage using DVFS to ensure the best tradeoff. Apps listed in power_whitelist_cfg.xml are whitelisted in order to run at full performance. So in some ways, the benchmarks still represent the real performance of the smartphone if it was fitted with a large heatsink and/or phone fan 🙂

That’s basically what MediaTek answer is, plus everybody is doing it anyway:

We want to make it clear to everyone that MediaTek stands behind our benchmarking practices. We also think this is a good time to share our thoughts on industry benchmarking practices and be transparent about how MediaTek approaches benchmarking, which has always been a complex topic.

…

MediaTek follows accepted industry standards and is confident that benchmarking tests accurately represent the capabilities of our chipsets. We work closely with global device makers when it comes to testing and benchmarking devices powered by our chipsets, but ultimately brands have the flexibility to configure their own devices as they see fit. Many companies design devices to run on the highest possible performance levels when benchmarking tests are running in order to show the full capabilities of the chipset. This reveals what the upper end of performance capabilities are on any given chipset.

…

We believe that showcasing the full capabilities of a chipset in benchmarking tests is in line with the practices of other companies and gives consumers an accurate picture of device performance.

So is that cheating, or should it just be accepted as business as usual or best practices? 🙂

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress