XMOS, known for its high-performance voice interfaces, is joining the AIoT bandwagon with the announcement of the Xcore.ai, a flexible and economical processor delivering high-performance AI, DSP, control, and I/O’s in a single device.

IoT and AI have been one of the most trending topics and fields in the last decade. Both areas have seen large innovations in between them. Deep neural networks have become better, IoT deployment cost has also been greatly reduced, and most importantly, they both have a significant impact on multiple industries. An interesting trend recently is the emergence of applications merging AI and IoT together to form so-called AIoT applications. IoT will be the digital nervous system, while AI will become the brain that makes all the critical decisions which will control the whole system.

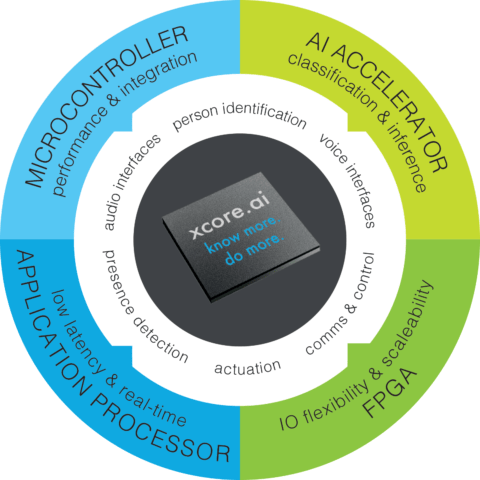

AIoT has led to the development and deployment of what we call AI processors or AI modules that can be deployed to the edge for high-performance Edge computing applications. An example is the Google Coral TPU Accelerator or AI processors from the likes of MediaTek, Amlogic, Rockchip, and now Xcore.ai.

Xcore.ai is a scalable, multi-core, general-purpose crossover processor that has gone over 2 generations of iterations now. Xcore originally started as an “FPGA-like” chip with I/O flexibility and significant control processing for building differentiated products. The 2nd generation provides DSP capability support, and the latest generation (3rd) has added machine learning capability to the Xcore platform.

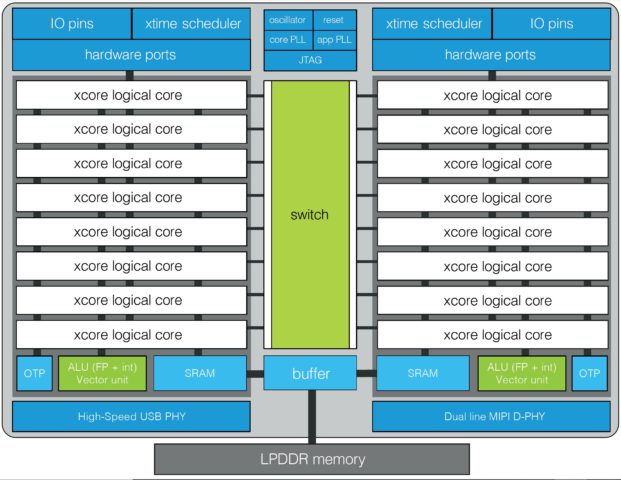

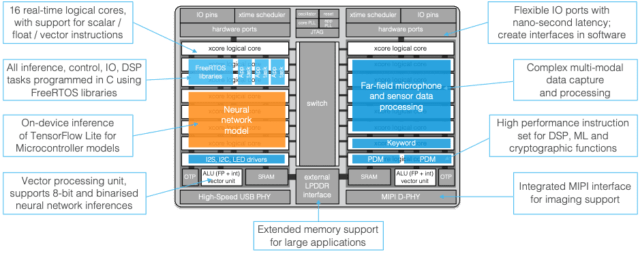

The building block of the Xcore is a tile, containing a RISC core with a tightly coupled SRAM. In the 2nd and 3rd generations architecture, each processor has a dual-issue execution unit capable of executing instructions at twice the pipeline clock frequency. Execution is split over up to eight concurrent hardware threads, which are each capable of running software tasks that execute I/O, control, DSP, and AI processing.

Xcore is built on the standard RISC-like instruction set and comes with instructions for storing and loading values in SRAM, while also supporting both 32-bit integers and floating-point instructions. The Xcore platform provides support for different physical layer interfaces. It supports USB, MIPI (receiver), and using GPIO pins it can communicate over SPI, QSPI, MII, I2S, I2C, PDM, and many more interfaces.

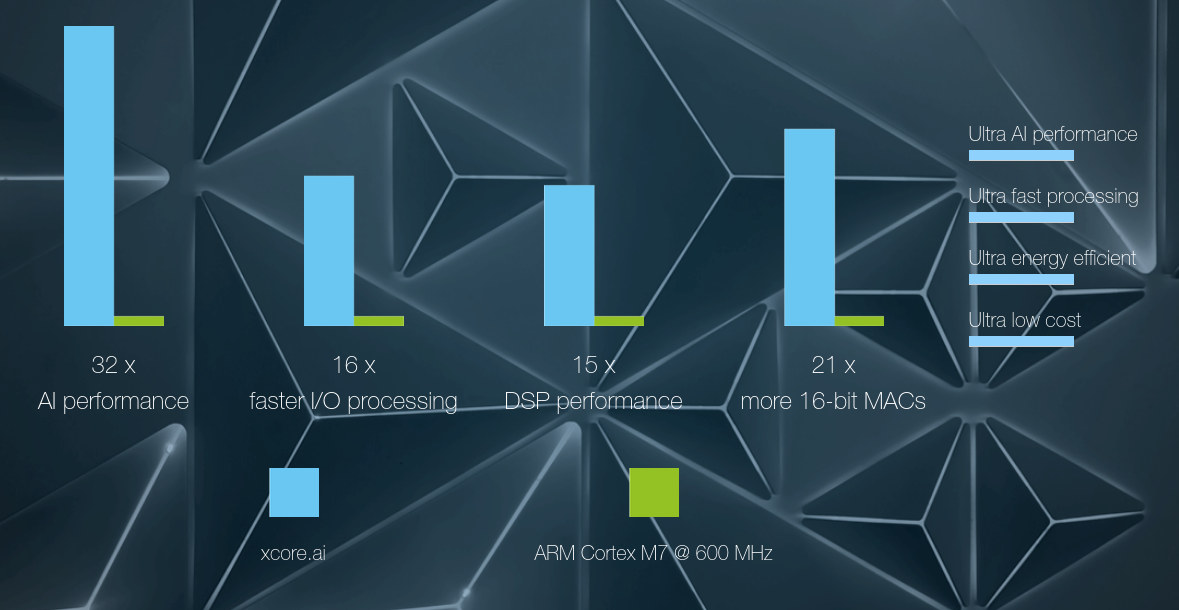

The Xcore architecture is scalable, which allows multiple Xcore to be stacked together to increase performance. A standard device like xcore.ai contains two tiles (1 MByte of memory, up to 38.4 GMACC/s), stacking two of those together produces 2 MByte of memory with up to 76.8 GMACC/s. The scalability is provided by the Xconnect interconnect, providing up to 2 Gbit/s interconnect between adjoining chips.

Xcore is fully programmable in ‘C’ using industry-standard tools (LLVM compiler, Tensorflow Lite) and runtime firmware (for example, freeRTOS).

Summary of Xcore.ai:

- Vector arithmetic up to 38 billion multiply accumulates per second.

- Complex arithmetic up to one million 512-point FFTs per second.

- 2 x 512 kByte on-chip SRAM, with an LPDDR interface for optional external memory.

- 128 GPIO, on-chip USB transceiver, and MIPI receiver.

- Employs deep neural networks using binary values for activations and weights.

Xcore.ai is not available now, but product demos will be available from June 2020. For more technical information, you can download their technical whitepaper. More information is available on the product page, as well as the announcement page.

I enjoy writing about the latest news in the areas of embedded systems with a special focus on AI on edge, fog computing, and IoT. When not writing, I am working on some cool embedded projects or data science projects. Got a tip, freebies, launch, idea, gig, bear, hackathon (I love those), or leak? Contact me.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress