Edge computing on the Raspberry PI has been a bit of ups and downs, especially with everyone gearing for AI in everything. The Raspberry Pi, on its own, isn’t really capable of any reliable AI applications. Typical object detection on the Raspberry Pi would get you something around 1 – 2 fps depending on the nature of your model and this because all those processing is done on the CPU.

To address this poor performance of AI applications on the Raspberry Pi, AI Accelerators came to the rescue. The Intel Neural Compute Stick 2 is one such accelerator capable of somewhere around 8 – 15 fps depending on your application. The NCS2, which is based on the Myriad X VPU technology, offers so much more than the compute stick delivers, and this is something that the team behind DepthAI has exploited to create a powerful AI module for edge computing called DepthAI.

It is one thing to do object detection. Still, it’s another thing to do localization in the physical world, an essential feature for mobile robots, and this is something DepthAI is gearing to deliver. DepthAI is an embedded platform for combining Depth and AI, which is built around Myriad X VPU.

DepthAI is an embedded platform conceived by Luxonis, and they participated in the Microchip Get Launched 2019 design competition and the Hackaday Price for 2019. Initializing conceived from the desire to improve bike safety of building an artificial intelligence bike light that detects and prevents from behind crashes.

DepthAI is a platform built around the Myriad X to combine depth perception, object detection (neural inference), and object tracking that gives you this power in a simple, easy-to-use Python API.

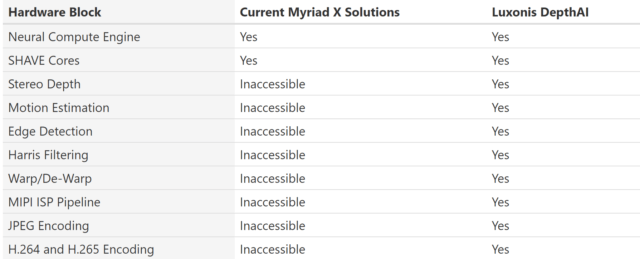

At the core of the device is the Myriad X SOM, the same module powering the Intel Neural Compute Stick 2. Unlike the compute stick, some of the capabilities of the Myriad aren’t being utilized; for example, the MIPI Lanes are unused and some of the other features, as shown in the image below. Through custom integration, DepthAI enables the full power usage of the Myriad X, which takes full advantage of the four trillion-operations-per-second vision processing capability of the Myriad X.

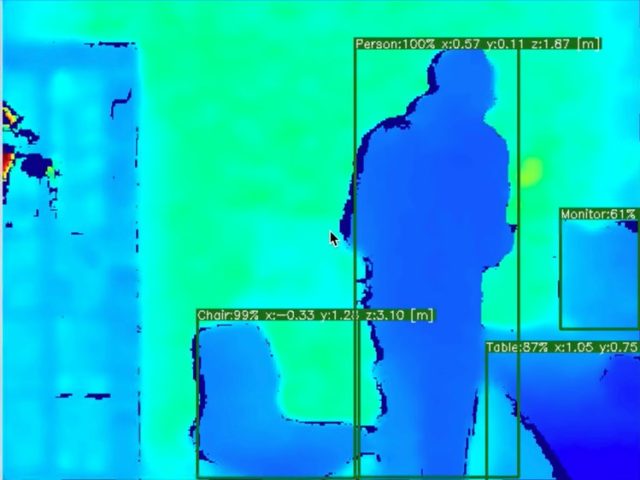

Object localization in the physical world is possible on the module at 25FPS where the x, y, z (cartesian) coordinates in meters can be gotten. This feature is possible through the attachment of two MIPI cameras sparsed apart. It also comes with an accompanying RGB Camera.

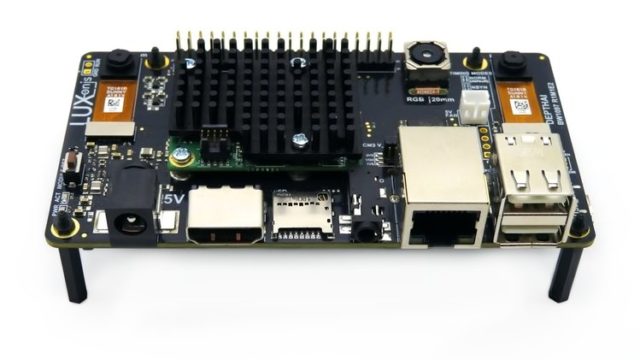

DepthAI comes as a system on a module with the idea of making it possible to integrate into any custom embedding platform. Aside from the module, DepthAI is also available in 3 editions:

- Fully-integrated solution with on-board cameras that work upon boot-up for easy prototyping (Powered by the Raspberry Compute Module 3+)

- Raspberry Pi HAT with modular/remote cameras.

- USB3 interface that’s usable with any host.

The Raspberry Pi Compute Module Edition comes with everything needed: pre-calibrated stereo cameras on-board with a 4K, 60 Hz color camera, and a microSD card with Raspbian and DepthAI Python code automatically running on bootup. The Pi HAT Edition allows users to attach the module to the Raspberry Pi has a HAT, and the cameras mounted on the HAT itself. The USB3 Edition will enable you to use DepthAI with any platform through only a single USB connection.

Some of the features possible with DepthAI are:

- Object Localization

- Object Detection

- Depth Video or Image

- Color Video or Image

- Stereo Pair Video or Image

DepthAI works with OpenVINO for optimizing neural models, just like with the NCS2. This allows you to train models with any popular frameworks such as TensorFlow, Keras, etc. and then use OpenVINO to optimize them to run efficiently and at low latency on DepthAI/Myriad X.

DepthAI is currently crowdfunding on Crowd Supply. The SoM is available for $99, the USB3 Edition for $149, the HAT Edition for $149, and the Complete Version for $299. If the campaign is successful, shipping is expected from February 2020.

I enjoy writing about the latest news in the areas of embedded systems with a special focus on AI on edge, fog computing, and IoT. When not writing, I am working on some cool embedded projects or data science projects. Got a tip, freebies, launch, idea, gig, bear, hackathon (I love those), or leak? Contact me.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress