CNXSoft: Guest post by Blu about setting up arm64 toolchain on 64-bit Arm hardware running a 32-bit Arm (Armv7) rootfs.

Life is short and industry progress is never fast enough in areas we care about. That’s an observation most of us are familiar with. One would think that by now most aarch64 desktops would be running arm64 environments, with multi-arch support when needed.

Alas, as of late 2019, chromeOS on aarch64 is still shipping an aarch64 kernel and an armhf userspace. And despite the fine job by the good folks at chromebrew, an aarch64 chromeOS machine in dev mode an otherwise excellent road-warrior ride, is stuck with 32-bit armhf. Is that a problem, some may ask? Yes, it is aarch64 is the objectively better arm ISA outside of MCUs, from gen-purpose code to all kinds of ISA extensions, SIMD in particular. That shows in contemporary compiler support and in the difference in quality of codegen. Particularly with the recent introduction of ILP32 ABI for aarch64 (AKA arm64_32) and Thumb2 notwithstanding (unsupported under aarch64), there won’t be a good reason one would want to build aarch32 code on an aarch64 machine soon.

As a long-time arm chromebook user, I have been using a sandboxed arm64 compiler on my chromebooks. A recent incident (read: author’s sloppiness) destroyed that sandbox on my daily ride while I was away from home, so I had to do it again from scratch, over a precious mobile data plan. This has led me to document the minimal set of steps required to set up the sandbox, and I’m sharing those with you. Please note, that the steps would be valid for any setup of 3.7-or-newer aarch64 kernel & armhf userspace, but in the case of chromeOS some extra constraints apply the root fs is sternly immutable to the user.

Under chromeOS dev-mode a writable fs space is available under /usr/local, so this is where we’ll set up our sandbox.

|

1 2 3 |

$ cd /usr/local $ mkdir root64 $ cd root64 |

We need to choose a good source of arm64 packages some (non-rolling) distro with up-to-date package base will do. That casually means Debian or Ubuntu we go with the latter due to its better refresh rate of packages. Next we need to decide which version of the compiler we want, as that will determine what system libraries we’ll need. For personal reasons I’ve decided upon gcc 8.2.0. Here’s the list of packages one needs to download from http://ports.ubuntu.com/ubuntu-ports/pool/main/ for the gcc version of choice:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

-rw-r--r--. 1 chronos chronos 2180000 Apr 9 2018 binutils-aarch64-linux-gnu_2.30-15ubuntu1_arm64.deb -rw-r--r--. 1 chronos chronos 2391032 Apr 9 2018 binutils-dev_2.30-15ubuntu1_arm64.deb -rw-r--r--. 1 chronos chronos 5711228 Sep 18 2018 cpp-8_8.2.0-7ubuntu1_arm64.deb -rw-r--r--. 1 chronos chronos 6465184 Sep 18 2018 gcc-8_8.2.0-7ubuntu1_arm64.deb -rw-r--r--. 1 chronos chronos 428092 Apr 9 2018 libbinutils_2.30-15ubuntu1_arm64.deb -rw-r--r--. 1 chronos chronos 2270660 Apr 17 2018 libc6_2.27-3ubuntu1_arm64.deb -rw-r--r--. 1 chronos chronos 2051800 Apr 17 2018 libc6-dev_2.27-3ubuntu1_arm64.deb -rw-r--r--. 1 chronos chronos 474272 Apr 17 2018 libc-bin_2.27-3ubuntu1_arm64.deb -rw-r--r--. 1 chronos chronos 36228 Sep 18 2018 libcc1-0_8.2.0-7ubuntu1_arm64.deb -rw-r--r--. 1 chronos chronos 58632 Apr 17 2018 libc-dev-bin_2.27-3ubuntu1_arm64.deb -rw-r--r--. 1 chronos chronos 34292 Sep 18 2018 libgcc1_8.2.0-7ubuntu1_arm64.deb -rw-r--r--. 1 chronos chronos 856036 Sep 18 2018 libgcc-8-dev_8.2.0-7ubuntu1_arm64.deb -rw-r--r--. 1 chronos chronos 212856 Dec 2 2018 libgmp10_6.1.2+dfsg-4_arm64.deb -rw-r--r--. 1 chronos chronos 520464 Aug 14 2018 libisl19_0.20-2_arm64.deb -rw-r--r--. 1 chronos chronos 37156 Jan 24 2018 libmpc3_1.1.0-1_arm64.deb -rw-r--r--. 1 chronos chronos 202380 Feb 8 2018 libmpfr6_4.0.1-1_arm64.deb -rw-r--r--. 1 chronos chronos 371988 Sep 18 2018 libstdc++6_8.2.0-7ubuntu1_arm64.deb -rw-r--r--. 1 chronos chronos 45430 Sep 13 2015 zlib1g_1.2.8.dfsg-2ubuntu4_arm64.deb |

In case of difficulties locating those packages on the main Ubuntu repo, one can use the global registry http://ports.ubuntu.com/ubuntu-ports/ls-lR.gz.

Next we ‘deploy’ those packages. We can do it one by one, e.g. if we’re curious to check what’s in each package:

|

1 2 3 |

$ ar x package.deb $ tar xf data.tar.xz $ rm -v control.tar.{xz,gz} data.tar.xz debian-binary |

Or en masse:

|

1 |

$ for i in *.deb; do ar x $i ; tar xf data.tar.xz ; rm -v control.tar.{xz,gz} data.tar.xz debian-binary ; done |

That was the straight-forward part. The next part is less so.

An aarch64 kernel can natively run statically-linked binaries no problem. Such binaries are a rarity on desktop Linux, though most of the binaries you’ll encounter or compile yourself will be dynamically-linked. For each architecture supported by the platform, there must be a corresponding dynamic loader. On arm multi-arch nowadays those would be:

|

1 2 |

/lib/ld-linux-aarch64.so.1 /lib/ld-linux-armhf.so.3 |

For aarch64 and armhf, respectively; on non-multi-arch there would be only one, naturally. If you inquire about any given dynamically-linked binary, you’d see those loaders reported immediately after the ELF type:

|

1 2 3 4 5 |

$ file a.out # some freshly built armhf dynamically-loaded binary a.out: ELF 32-bit LSB executable, ARM, EABI5 version 1 (SYSV), dynamically linked, interpreter /lib/ld-linux-armhf.so.3, for GNU/Linux 2.6.32, stripped $ file a.out # same code built for aarch64 a.out: ELF 64-bit LSB pie executable ARM aarch64, version 1 (SYSV), dynamically linked, interpreter /lib/ld-linux-aarch64.so.1, for GNU/Linux 3.7.0, BuildID[sha1]=e999425cae14ba4fa0ad8bf44ad1c50bb32e012e, stripped |

The part that reads “dynamically linked, interpreter /lib/ld-linux-armhf.so.3” tells what dynamic loader should be used to launch each binary, with path and all. As we cannot modify /lib on chromeOS, we cannot place an aarch64 loader there, so we will need to revert to other means.

As many are familiar, the binary of gcc is just a facade that invokes a bunch of actual business binaries to carry out a build. In the context of our sandbox, those are:

|

1 2 3 4 |

/usr/local/root64/usr/lib/gcc/aarch64-linux-gnu/8/cc1 /usr/local/root64/usr/lib/gcc/aarch64-linux-gnu/8/collect2 /usr/local/root64/usr/bin/as /usr/local/root64/usr/bin/ld |

Now, the first two were delivered through our .deb packages, while the latter two are normally mere symlinks to the actual aarch64-linux-gnu-* binaries, but we don’t have those symlinks yet, and we don’t need them as symlinks anyway.

Each time our arm64 gcc will try to execute one of those, it will fail due to lack of system-wide loader for aarch64. So how do we solve that? Easily we manually specify the loader that should be used with any given arm64 binary we want to run. So let’s shadow those binaries by some scripts of the same names to do our bidding, while preserving the original binaries for cc1 and collect2 as .bak files at their original locations:

|

1 2 3 |

$ cd usr/lib/gcc/aarch64-linux-gnu/8 $ mv cc1 cc1.bak $ mv collect2 collect2.bak |

Eventually we want the following scripts at their corresponding locations:

- /usr/local/root64/usr/lib/gcc/aarch64-linux-gnu/8/cc1:

12#!/bin/bash/usr/local/root64/lib/ld-linux-aarch64.so.1 /usr/local/root64/usr/lib/gcc/aarch64-linux-gnu/8/cc1.bak $@

- /usr/local/root64/usr/lib/gcc/aarch64-linux-gnu/8/collect2:

12#!/bin/bash/usr/local/root64/lib/ld-linux-aarch64.so.1 /usr/local/root64/usr/lib/gcc/aarch64-linux-gnu/8/collect2.bak $@

- /usr/local/root64/usr/bin/as:

12#!/bin/bash/usr/local/root64/lib/ld-linux-aarch64.so.1 /usr/local/root64/usr/bin/aarch64-linux-gnu-as $@

- /usr/local/root64/usr/bin/ld:

12#!/bin/bash/usr/local/root64/lib/ld-linux-aarch64.so.1 /usr/local/root64/usr/bin/aarch64-linux-gnu-ld $@

Last but not least, we need a gcc top-level script to set up some things for us (placed somewhere in PATH, ideally):

- gcc-8.sh:

12345#!/bin/bashroot=/usr/local/root64export PATH=$root/usr/bin/:$PATHexport LD_LIBRARY_PATH=$root/lib/aarch64-linux-gnu/:$root/usr/lib/aarch64-linux-gnu/$root/lib/aarch64-linux-gnu/ld-linux-aarch64.so.1 $root/usr/bin/gcc-8 $@ --sysroot=$root

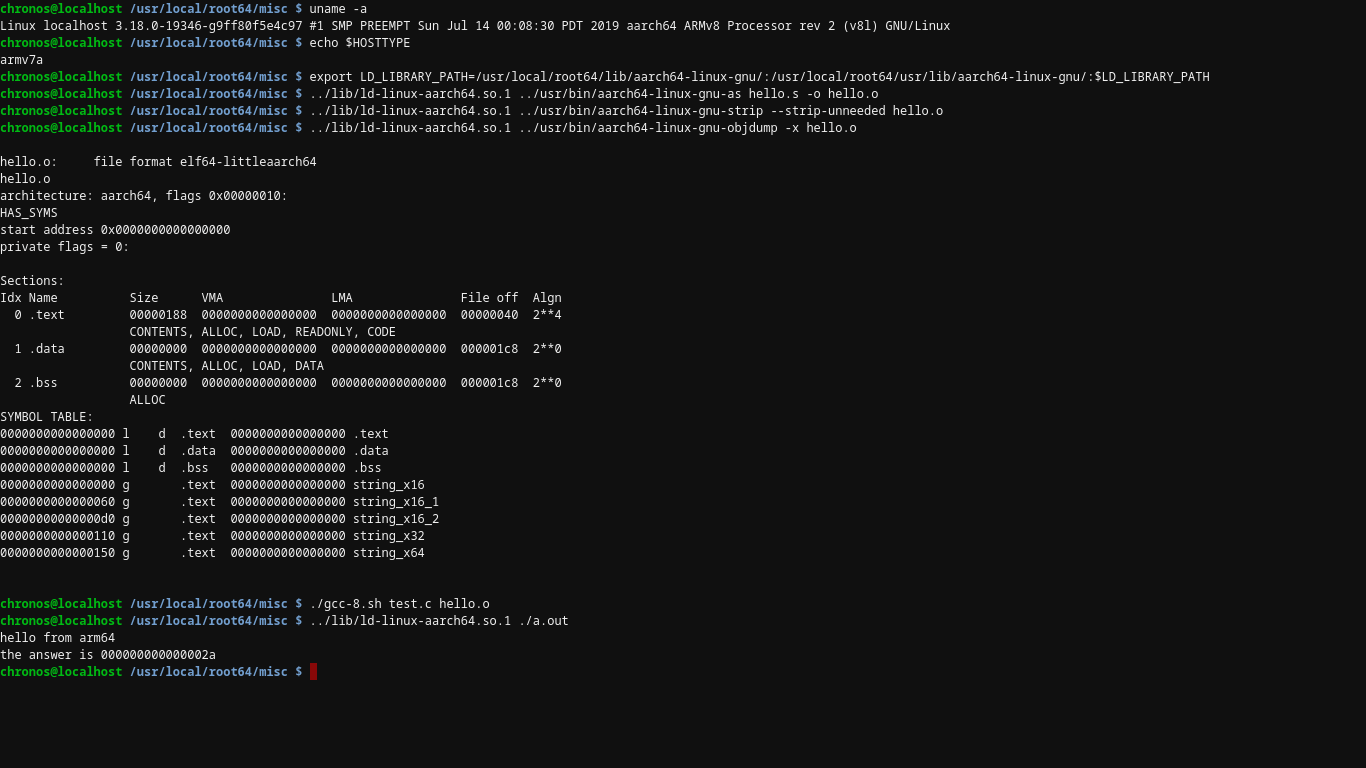

Let’s test out contraption with the following sample code:

|

1 2 3 4 5 6 7 8 9 10 11 12 |

#include <stdio.h> #if __LP64__ #define PLATFORM_STR "arm64" #else #define PLATFORM_STR "armhf" #endif int main(int argc, char** argv) { fprintf(stdout, "hello from " PLATFORM_STR "\n"); return 0; } |

First, with the native armhf gcc-8.2.0 compiler, courtesy of chromebrew:

|

1 2 3 4 |

chronos@localhost /usr/local/root64/misc $ gcc-8.2 test.c chronos@localhost /usr/local/root64/misc $ ./a.out hello from armhf chronos@localhost /usr/local/root64/misc $ |

Next with the arm64 gcc-8.2.0 we just set up:

|

1 2 3 4 |

chronos@localhost /usr/local/root64/misc $ ./gcc-8.sh test.c chronos@localhost /usr/local/root64/misc $ LD_LIBRARY_PATH=/usr/local/root64/lib/aarch64-linux-gnu/:/usr/local/root64/usr/lib/aarch64-linux-gnu/ ../lib/ld-linux-aarch64.so.1 ./a.out hello from arm64 chronos@localhost /usr/local/root64/misc $ |

Please note, that since we have not exported an arm64-aware LD_LIBRARY_PATH yet, we have to specify that on the command line, right before invoking the aarch64 loader.

Well, that’s all, folks. I hope this comes of use to some aarch64+armhf users out there.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress