Linux EXT-4 File System Corruption & Attempted Recovery

There’s a file system corruption bug related to EXT-4 in Linux, and it happened to me a few times in Ubuntu 18.04. You are using your computer normally, then suddenly you can’t write anything to the drive, as the root partition has switched to read-only. Why? Here are some error messages:

|

1 2 3 4 |

[15882.773747] EXT4-fs (dm-4): re-mounted. Opts: (null) [15898.557605] EXT4-fs error (device dm-4): ext4_iget:4831: inode #2113041: comm rm: bad extra_isize 20100 (inode size 256) [15898.568305] EXT4-fs error (device dm-4): ext4_iget:4831: inode #2113042: comm rm: bad extra_isize 35148 (inode size 256) [15898.569774] EXT4-fs error (device dm-4): ext4_lookup:1577: inode #2557277: comm rm: deleted inode referenced: 2113043 |

What then happens is that you restart your PC, and get to the command where you are asked to run:

|

1 |

esfsck /dev/sda2 |

Change /dev/sda2 to whatever your drive is, and manually review errors. You can take note of the file modified, as you’ll likely have to fix your Ubuntu installation later on. Usually the fix consists of various package re-installations:

|

1 |

sudo apt install --resintall <package-name> |

It happened to me two or three times in the past, and it’s a pain, but I eventually recovered. But this time, I was not so lucky. The system would not boot, but I could still SSH to it. I fixed a few issues, but there was a problem with the graphics drivers and gdm3 would refuse to start because of it was to open “EGL Display”. Three hours had passed with no solution in sights, so I decided to reinstall Ubuntu 18.04.

Backup Sluggishness

Which brings me to the main topic of this post: Backups. I normally backup the files on my laptop once week. Why only once a week? Because it considerably slow down my computer especially during full backups, as opposed to incremental backups. So I normally leave my laptop on on Wednesday night, so the backup will start at midnight, and hopefully ends before I start working in the morning but it’s not always the case. I’m using the default Duplicity via Deja Dup graphical interface to backup files to a USB 3.0 drive directly connected to my laptop.

Nevertheless, since I only do a backup once a week on Thursday, and I got the file system bug on Tuesday evening, I decided to manually backup my full home directory to the USB 3.0 using tar and bz2 compression as extra precaution before reinstalling Ubuntu. I started at 22:00, and figured it would be done when I’d wake up in the morning. How naive of me! I had to wait until 13:00 the next day for the ~300GB tarball to be ready.

One of my mistakes was to use the single-threaded version of bzip2 with a command like:

|

1 |

tar cjvf backup.tar.bz2 /home/user |

Instead of going with the faster multi-threaded bzip2 (lbzip2):

|

1 |

tar cvf linux2.tar.bz2 linux --use-compress-program=lbzip2 |

It would probably have made sure it finished before the morning, but still would have take many hours.

(Failing to) Reinstall Ubuntu 18.04

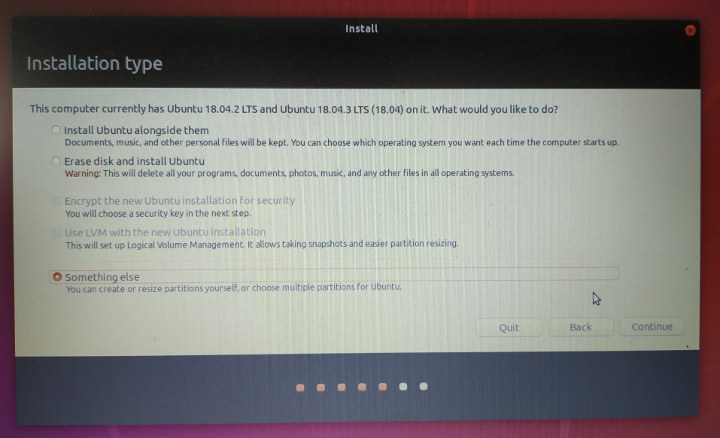

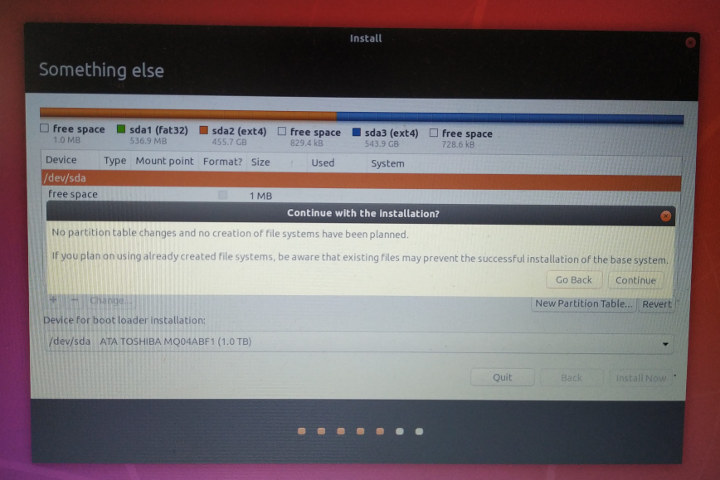

Time to reinstall Ubuntu 18.04. I did not search on the Internet the first time, as I’d assume it would be straightforward, but the installer does not have a nice-an-easy way to reinstall Ubuntu 18.04. Instead you need to select “Something Else”

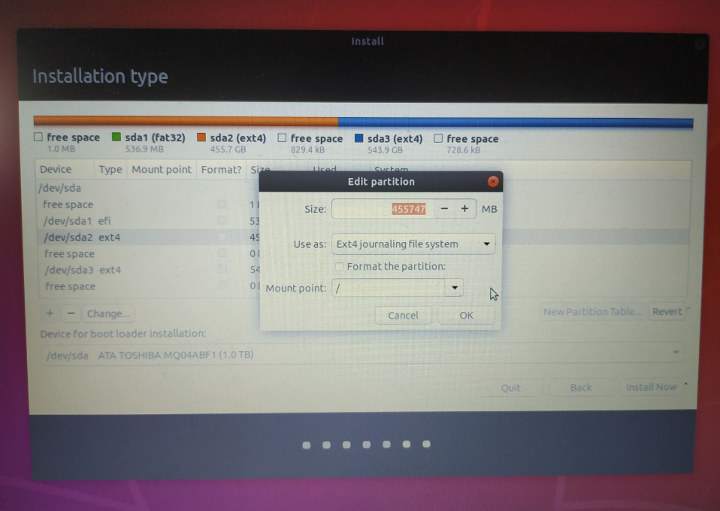

and do the configuration manually by selection the partition you want to re-install, select the mount point, and make sure “Format the partition” is NOT ticked.

and do the configuration manually by selection the partition you want to re-install, select the mount point, and make sure “Format the partition” is NOT ticked.

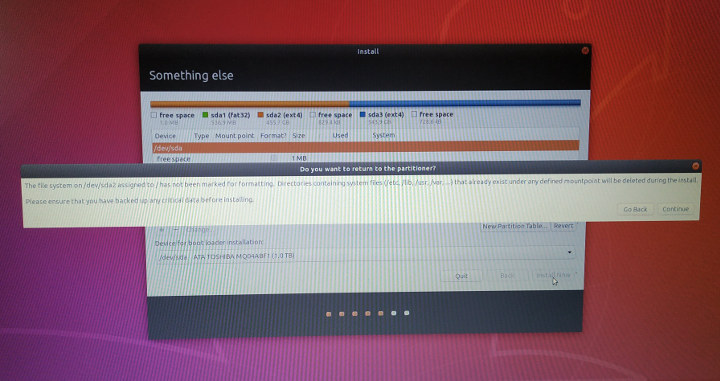

Click OK, and you’ll get a first warning that the partition has not marked for formatting, and that you should backup any critical data before installation.

That’s followed by another warning that reinstalling Ubuntu may completely fail due to existing files.

Installation completed in my case, but but the system would not boot. So I reinstall Ubuntu 18.04 on another partition on my hard drive. With hindsight, I wonder if Ubuntu 18.04.3 LTS release may have been the cause the re-installation failure, since I had the Ubuntu 18.04.2 ISO, and my system may have already been updated to Ubuntu 18.04.3.

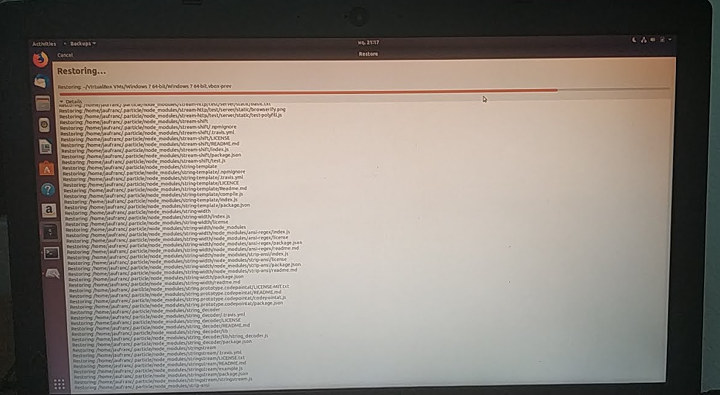

Fresh Ubuntu 18.04 Install and Restoring Backup – A time-consumption endeavor

Installation on the other partition worked well, but I decided to use Duplicity backup in case I forgot about some important files in my manual tar backup. I was sort of hoping restoring a backup would be faster, but I was wrong. I started at 13:00, and it completed the next day around 10:00 in the morning.

It looks like duplicity does not support multi-threaded compression. The good news is that I can still have my previous home folder, so I’ll be able to update the recently modified files accordingly, before deleting the old Ubuntu 18.04 installation and recovering space.

Lessons Learned, Potential Improvements, and Feedback

Anyway I learned a few important lessons:

- Backups are very important as you’ll never know when problem may happen

- Restoring backup may be a timing consuming process

- Having backup hardware like another PC or laptop is critical if your work depends on you having access to a computer. I also learned this the day the power supply of my PC blew up, and since it was under warranty I would get a free replacement. I just had to wait for three weeks since they had to send the old one to a shop in the capital city, and get a new PSU back

- Ubuntu re-installation procedure is not optimal.

I got back to normal use after three days due to the slow backup creation and restoration. I still have to reinstall some of the apps I used, but the good thing if that they’ll still have customizations and things like list of recent files.

I’d like to improve my backup situation. To summarize, I’m using Duplicity / Deja Dup in Ubuntu 18.04 backing up to a USB 3.0 drive directly attached to my laptop weekly.

One of the software improvements I can think of is making sure the backup software uses multi-threaded compression and decompression. I may also be more careful when selecting which files I backup to decrease the backup time & size, but this is a time consuming process that I’d like to avoid.

Hardware-wise, I consider saving the files to an external NAS, as Gigabit Ethernet should be faster than USB 3.0, and it may also lower I/O to my laptop. Using a USB 3.0 SSD instead of a hard drive may also help, but it does not seem the most cost-effective solution. I’m not a big fan of backup personal files to the cloud, but at the same time, I’d like this option to restore programs and configuration files. I just don’t know if that’s currently feasible.

So how do you handle backups in Linux on your side? What software & hardware combination do you use, and how often do you backup files from your personal computer / laptop?

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress