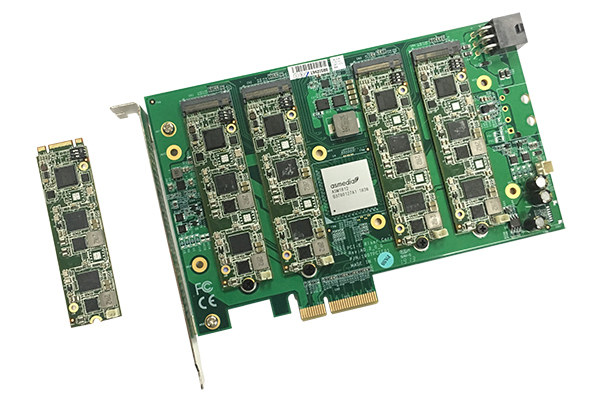

Movidius Myriad X is Intel’s latest vision processing unit (VPU) first unveiled in 2017, and available for evaluation in Intel Neural Compute Stick 2 since the end of 2018. Later on, AAEON also launched their own AI Core XM2280 M.2 card equipped with two Myriad X 2485 VPU’s and capable of up to 200 fps (160 fps typical) inferences, thanks to over 2 TOPS of deep neural network (DNN) performance.

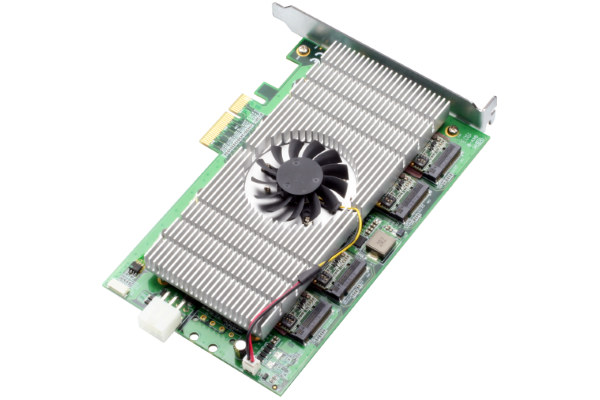

But what if you need even more performance? The company has now launched AI Core XP4/XP8 card with either two or four AI Core XM2280 M.2 cards that can be connected into any computer or workstation with a PCIe x4 slot.

AAEON AI Core XP4/XP8 specifications:

AAEON AI Core XP4/XP8 specifications:

- 4x M.2 sockets for 2x or 4x M.2 2280 M-key cards with 2x Myriad X VPU’s and 2x 4Gbit LPDDR4x memory each

- Asmedia PCIe switch

- Cooling – Fan heatsink

- PCIe x4 standard full-length low profile slot card

- Dimensions – 167 x 111 mm

- Temperature Range – 0 to 50°C

- Certifications – CE/FCC

The card is compatible with Intel Vision Accelerator Design SW SDK, supports TensorFlow, Caffe, and MXNET frameworks, and works with Ubuntu 16.04, and Windows 10.

The card is compatible with Intel Vision Accelerator Design SW SDK, supports TensorFlow, Caffe, and MXNET frameworks, and works with Ubuntu 16.04, and Windows 10.

I wrote this post to introduce a new product for A.I. application, but also because I have a question that I’m unable to answer myself, and I hope some readers experience with this type of solution can bring some lights to the subject. My main probably is that marketing materials often contain apparently useless information with regards to performance.

According to the press release, combining eight Myriad X VPU’s means a performance of up to 840 fps (560 typical) is possible with the AI Core XP8. That may be true, but why isn’t there any mention of the resolution?

Let’s look at TOPS (Trillion of Operation per Seconds) numbers instead. Each Myriad X VPU can achieve 1 TOPS of deep neural network (DNN) performance and 4 TOPS of overall performance per chip. So if we combine eight Myriad X chips, the total DNN performance should be 8 TOPS.

Fair enough, but then how does that apparently powerful PCIe card compares to the $70 Gyrfalcon 2803 Plai Plug we covered earlier this morning and boasts 16.8 TOPS at just 700 mW of power? Does that mean Gyrfalcon is so much better with over twice the performance, in a much smaller form factor, much lower power consumption, at only a fraction of the price? Probably not, as TOPS numbers depending on the bit depth, and you’d get much different values with 4-bit, 8-bit, or 16-bit, and those are almost always omitted from any press release or product page. The MobileNet demo for the Gyrfalcon USB neural compute stick showed inference at a little over 100 fps with 488 x 488 images, we know AI Core XP8 can handle 800 fps (560 fps typical) but again we can’t compare directly since AAEON failed to mention the resolution and model used for their claim. I’ve asked more details to AAEON and will update this post once/if I get an answer.

[Update June 13, 2019: AAEON provided more details about the resolution:

The image input we tested with is resolution 640 x 480 pixel, running on MobileNetSSD topology, with 8x Myriad X, the FPS is up to 720 (92 x 8)

]

So my question is: is there a way to estimate the performance from the publicly available information before purchase, or is the only way to get the hardware and benchmark those with identical models?

AAEON AI Core XP4 and XP8 can be pre-ordered from AAEON Store for respectively $379 and $649 with delivery scheduled for July. There may also be a few more details on the product page.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress