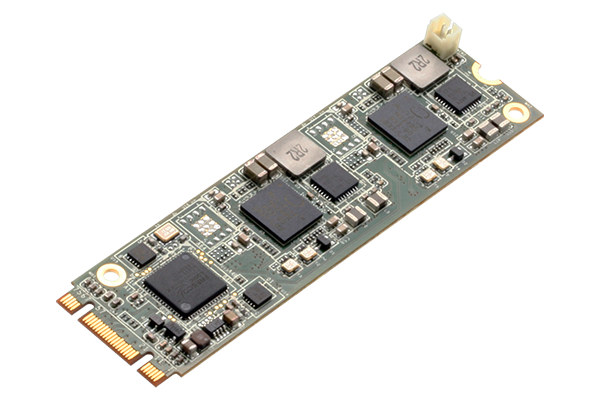

AAEON released UP AI Core mPCIe card with a Myriad 2 VPU (Vision Processing Unit) last year. But the company also has an AI Core X family powered by the more powerful Myriad X VPU with the latest member being AI Core XM2280 M.2 card featuring not one, but two Myriad X 2485 VPUs coupled with 1GB LPDDR4 RAM (512MB x2).

The card supports Intel OpenVINO toolkit v4 or greater, and is compatible with Tensorflow and Caffe AI frameworks.

AI Core XM2280 M.2 specifications:

- VPU – 2x Intel Movidius Myriad X VPU, MA2485

- System Memory – 2x 4Gbit LPDDR4

- Host Interface – M.2 connector

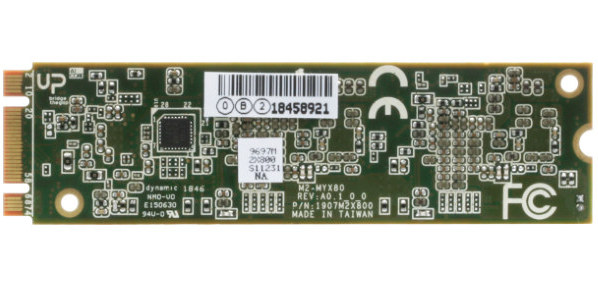

- Dimensions – 80 x 22 mm (M.2 M+B key form factor)

- Certification – CE/FCC Class A

- Operating Temperature – 0~50°C

- Operating Humidity – 10%~80%RH, non-condensing

The card works with Intel Vision Accelerator Design SW SDK available for Ubuntu 16.04, and Windows 10. Thanks to the two Myriad X VPU’s, the card is capable of up to 200 fps (160 fps typical) inferences, and delivers over 2 trillion floating point operations per second (TOPS).

The card works with Intel Vision Accelerator Design SW SDK available for Ubuntu 16.04, and Windows 10. Thanks to the two Myriad X VPU’s, the card is capable of up to 200 fps (160 fps typical) inferences, and delivers over 2 trillion floating point operations per second (TOPS).

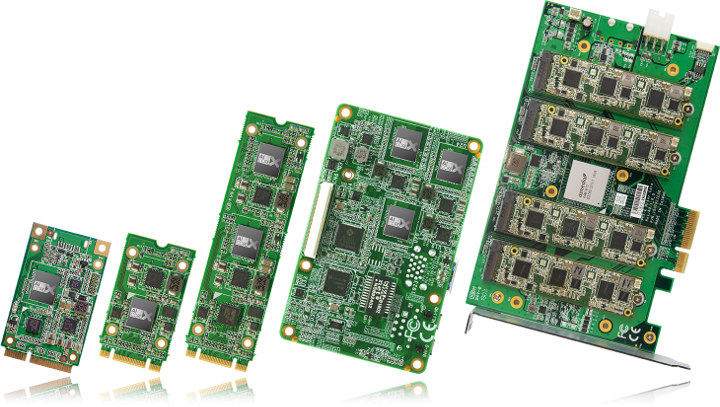

Pricing and availability are not known at the time of writing, but eventually it might be possible to purchase the card via the product page. The AI Core X product page reveals a total of 6 cards with Myriad X VPU are in the pipe from UP AI Core XM2242 M.2 card with one VPU, and up to UP AI Core XP 8MX PCIe x4 card with 8 Myriad X 2485 VPU’s and 4GB RAM.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress