NVIDIA Tegra X1 octa-core Arm processor with a 256-core Maxwell GPU was introduced in 2015. The processor powers the popular NVIDIA Shield Android TV box, and is found in Jetson TX1 development board which still costs $500 and is approaching end-of-life.

The company has now introduced a much cheaper board with Jetson Nano Developer Kit offered for just $99. It’s not exactly powered by Tegra X1 however, but instead what appears to be a cost-down version of the processor with four Arm Cortex-A57 cores clocked at 1.43 GHz and a 128-core Maxwell GPU.

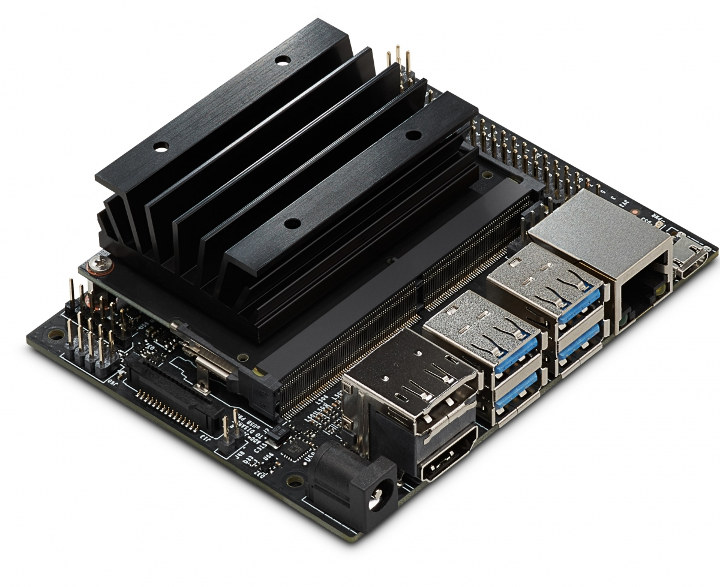

Jetson Nano developer kit specifications:

- Jetson Nano CPU Module

- 128-core Maxwell GPU

- Quad-core Arm A57 processor @ 1.43 GHz

- System Memory – 4GB 64-bit LPDDR4 @ 25.6 GB/s

- Storage – microSD card slot (devkit) or 16GB eMMC flash (production)

- Video Encode – 4K @ 30 | 4x 1080p @ 30 | 9x 720p @ 30 (H.264/H.265)

- Video Decode – 4K @ 60 | 2x 4K @ 30 | 8x 1080p @ 30 | 18x 720p @ 30 (H.264/H.265)

- Dimensions – 70 x 45 mm

- Baseboard

- 260-pin SO-DIMM connector for Jetson Nano module.

- Video Output – HDMI 2.0 and eDP 1.4 (video only)

- Connectivity – Gigabit Ethernet (RJ45) + 4-pin PoE header

- USB – 4x USB 3.0 ports, 1x USB 2.0 Micro-B port for power or device mode

- Camera I/F – 1x MIPI CSI-2 DPHY lanes compatible with Leopard Imaging LI-IMX219-MIPI-FF-NANO camera module and Raspberry Pi Camera Module V2

- Expansion

- M.2 Key E socket (PCIe x1, USB 2.0, UART, I2S, and I2C) for wireless networking cards

- 40-pin expansion header with GPIO, I2C, I2S, SPI, UART signals

- 8-pin button header with system power, reset, and force recovery related signals

- Misc – Power LED, 4-pin fan header

- Power Supply – 5V/4A via power barrel or 5V/2A via micro USB port; optional PoE support

- Dimensions – 100 x 80 x 29 mm

Jetson Nano runs full desktop Ubuntu out of the box, and is supported by the JetPack 4.2 together with Jetson AGX Xavier and Jetson TX2. You’ll find hardware and software documentation in the developer page, and some AI benchmarks as well as other details in the blog post announcing the launch of the kit and module.

The company explains their new low cost “AI computer delivers 472 GFLOPS of compute performance for running modern AI workloads and is highly power-efficient, consuming as little as 5 watts”.

While Jetson Nano module found in the development kit will only support microSD card storage, NVIDIA will also offer a production-ready Jetson Nano module with a 16GB eMMC flash slot that will start selling for $129 in June.

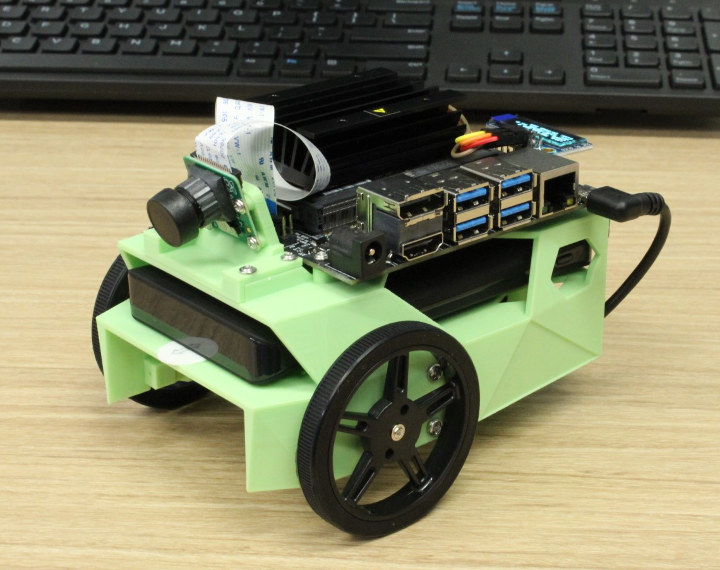

NVIDIA Jetson Nano targets AI projects such as mobile robots and drones, digital assistants, automated appliances and more. To make everything even easier to get started with robotics, NVIDIA also designed Jetbot educational open source AI robotics platform. All resources will be found in a dedicated Github repo by the end of this month.

NVIDIA Jetson Nano developer kit is up for pre-order on Seeed Studio, Arrow, and other websites for $99, and shipping is currently scheduled for April 12, 2019. Jetbot will be sold for $249 including Jetson Nano developer kit.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress