In the x86 world, one operating system image can usually run on all hardware thanks to clearly defined instruction sets, hardware and software requirements. Arm provides most flexibility in terms of peripherals, while having a fixed set of intrusions for a given architecture (e.g. Armv8, Armv7…), and this lead to fragmentation, so that in the past you had to customize your software with board files and other tweaks, and provide one binary per board, leading to lots of fragmentation. With device trees, things improved a bit, but there are still few images that will run on multiple boards without modifications.

RISC-V provides even more flexibility that Arm since you can mess up with the instructions set with designers able to add or remove instructions as they see fit for their application. One can easily imagine how this can lead to a complete mess with binary code only running on a subset of RISC-V platforms, and lots of compiler options to build the code for a particular RISC-V SoC.

Brian Bailey, Technology Editor/EDA for Semiconductor Engineering, goes into details about the challenges of RISC-V compliance in details, and I’ll try to summarize the key points in this post.

Allen Baum, system architect at Esperanto Technologies and chair of the RISC-V Compliance Task Group explains:

RISC-V is an open-source standard ISA with exceptional modularity and extensibility. Anyone can build an implementation and there are no license fees, except for commercial use of the trademarks.

…

Implementers are free to add custom extensions to boost capabilities and performance, while at the same time don’t have to include features that aren’t needed

…

Unconstrained flexibility, however, can lead to incompatibilities that could fragment the customer base and eliminate the incentives for cooperative ecosystem development.

That defines the issue, especially RISC-V is not controlled by one or two companies in like Arm and x86 worlds. Multiple companies will be designing their own RISC-V products, so they’ll each have to make sure their implementation complies with the specification. That’s why the RISC-V Compliance Task Group is working on developing RISC-V compliance tests in order to define rules to ensure software compatibility.

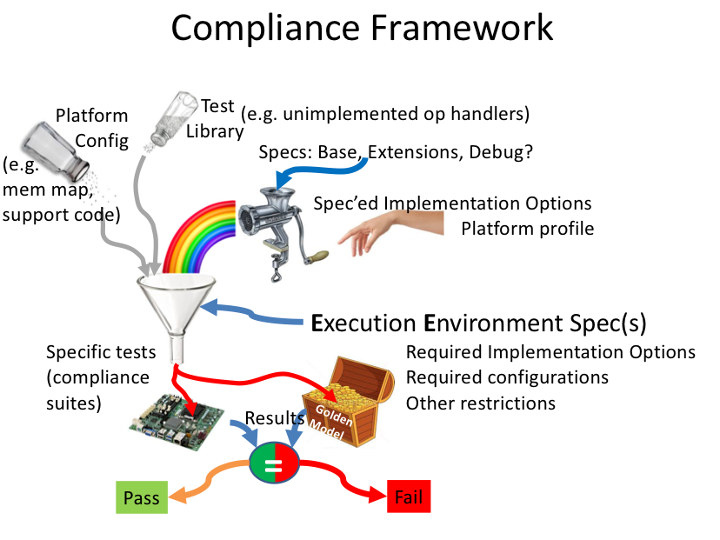

The RISC-V compliance framework is said to have three major elements

- A modular set of test suites that exercise all aspects of the ISA

- Golden reference signatures that define correct execution results

- Frameworks that select and configure appropriate test suites based on both platform requirements and claimed device capabilities

Running the tests will report fail/pass results for each test. The framework is only part of the verification process, as explained in the documentation:

The goal of compliance tests is to check whether the processor under development meets the open RISC-V standards or not. It is considered as non-functional testing meaning that it doesn’t substitute for design verification. This can be interpreted as testing to check all important aspects of the specification but without focusing on details, for example, on all possible values of instruction operands or all combinations of possible registers.

The result that compliance tests provide to the user is an assurance that the specification has been interpreted correctly and the design under test (DUT) can be declared as RISC-V compliant.

The tools are still work-in-progress, tests to be performed still need to be define, and will go beyond just ISA compliance, for example Tilelink cache-coherent interconnect fabric may have to be added to the compliance tests. Those tools will help making sure the processors compliant with basic RISC-V specifications, but it’s unclear how all RISC-V extensions that may be vendors specific will be handled, and some may even end up into the standard RISC-V compliance tests.

Thanks to Blu for the tip.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress