Rockchip RK3399Pro was announced as an updated version of RK3399 processor with an NPU (Neural Processing Unit) capable of delivering 2.4 TOPS for faster A.I. workloads such as face or object recognition.

There haf been some delays in the past because of a redesign of the processor that placed the NPU’s RAM on the PCB instead of on-chip for cost reasons. Eventually we got more details about RK3399Pro, and today I also received a 15-page presentation with some more information about the software, and processor itself.

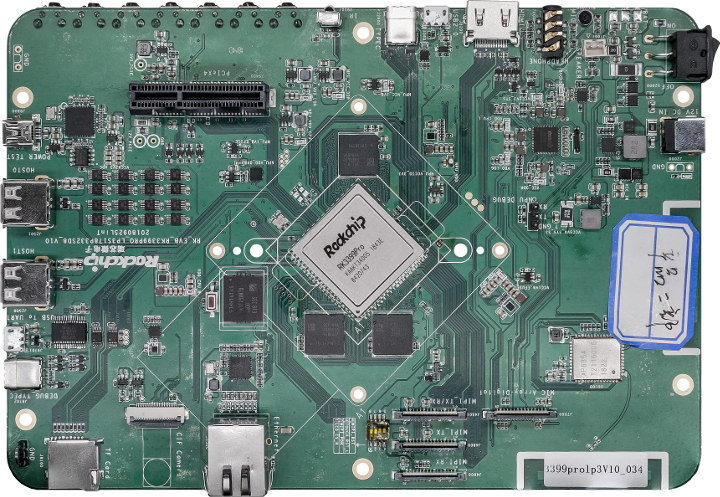

But even more interesting, that’s the first time I see Rockchip’s official RK3399Pro EVB (Evaluation Board), and the guys at Khadas uploaded a video to explain a bit more about the board, and showcase the NPU performance measured up to 3.0 TOPS with an object recognition demo, and an “body feature” detection demo – for the lack of a better word – running in Android 8.0.

Eventually, Shenzhen Wesion (Khadas) will launch their own RK3399Pro board with Khadas Edge-1S now “available” on Indiegogo.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress