We’ve previously seen several clusters made of Raspberry Pi boards with a 16 RPi Zero cluster prototype, or BitScope Blade with 40 Raspberry Pi boards. The latter now even offers solutions for up to 1,000 nodes in a 42U rack.

Circumference offers an other option with either 8 or 32 Raspberry Pi 3 (B+) boards managed by UDOO x86 board acting as a dedicated front-end processor (FEP) that’s designed as a “Datacenter-in-a-Box”.

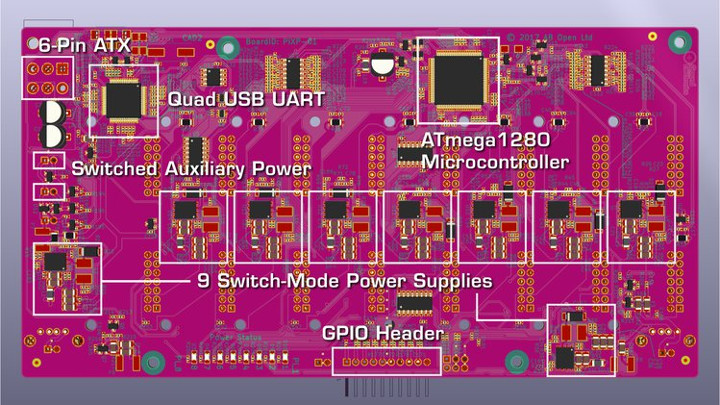

Key features and specifications:

- Compute nodes – 8x or 32x Raspberry Pi 3 B+ boards for a total of 128x 64-bit 1.4 GHz cores max

- Backplane

- MCU – Microchip ATmega1280 8-bit AVR microcontroller

- Serial Comms – FTDI FT4232 quad-USB UART

- Switched Mode Power Supply Units (SMPSUs):

- 8x / 32x software controlled (one per compute node)

- 1x / 4x always-on (microcontroller)

- HW monitoring:

- 8x / 32x compute node energy

- 2x / 8x supply voltage

- 2x / 8x temperature

- Remote console – 8x / 32x (1x / 4x UARTs multiplexed x8)

- Ethernet switch power – 2x / 8x software controlled

- Cooling – 1x / 4x software controlled fans

- Auxiliary power – 2x / 8x software controlled 12 VDC ports

- Expansion – 8x / 32x digital I/O pins (3x / 12x PWM capable)

- Networking

- 2x or 8x 5-port Gigabit Ethernet switches (software power control)

- 10x / 50x Gigabit Ethernet ports in total (C100 has additional 2x AON switches in base)

- FEP – UDOO x86 Ultra board with Quad-core Intel Pentium N3710 2.56 GHz with 3x Gigabit Ethernet

- C100 (32+1) model only

- 32x LED compute node power indicators

- 6x LED matrix displays for status

- 1x additional always-on fan in the base

- Dimensions & Weight:

- C100 – 37x30x40 cm; 12 kg

- C25 – 17x17x30 cm; 2.2 kg

The firmware for the backplane has been developed with the Arduino IDE and Wiring C libraries, and as such will be “hackable” by the user once the source code is released. The same can be said for the front panel board used in C100 model and equipped with a Microchip ATmega328 MCU.

The UDOO x86 Ultra board runs a Linux distribution – which appears to be Ubuntu – and supports cluster control out of the out-of-the-box thanks to a daemon and a command line utility (CLI), as well as Python and MQTT APIs.

Hardware design files, firmware, and software source code will be made available after the hardware for the main pledge levels have been shipped.

Circumference C25 and C100 are offered as kits with the following:

Circumference C25 and C100 are offered as kits with the following:

- Custom PCBAs

- 1 or 4 backplane(s) depending on model

- Front Panel (C100 only)

- 2x (C25) / 10x (C100) 5-port Gigabit Ethernet switches

- 1x UDOO x86 dual-gigabit Ethernet adapter

- All internal network and USB cabling

- Power switch and cabling

- Fans and guard

- Laser cut enclosure parts and fasteners. C100 also includes 2020-profile extruded aluminum frame

What you don’t get are the Raspberry Pi 3 (B+) and UDOO x86 (Ultra) boards, SSD and/or micro-SD cards, and power supply, which you need to purchase separately. That’s probably means you could also replace RPI 3 boards, by other mechanically and electrically compatible boards such Rock64 or Renegade boards, or even mix them up (TBC).

The solution can be used for cloud, HPC, and distributed systems development, as well as testing, and education.

The project has just launched on Crowdsupply aiming to raise $40,000 for mass production. Rewards start at $549 for the C25 (8+1) model, and $2,599 for the C1000 (32+1) model with an optional $80 120W power supply for the C25 model only, but as just mentioned without the Raspberry Pi and UDOO boards. Shipping is free worldwide and planned for the end of January 2019, except for more expensive early access pledges which are scheduled for the end of November.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress