SolidRun MACCHIATOBin is a mini-ITX board powered by Marvell ARMADA 8040 quad core Cortex A72 processor @ up to 2.0 GHz and designed for networking and storage applications thanks to 10 Gbps, 2.5 Gbps, and 1 Gbps Ethernet interfaces, as well as three SATA port. The company is now taking order for the board (FCC waiver required) with price starting at $349 with 4GB RAM.

MACCHIATOBin board specifications:

MACCHIATOBin board specifications:

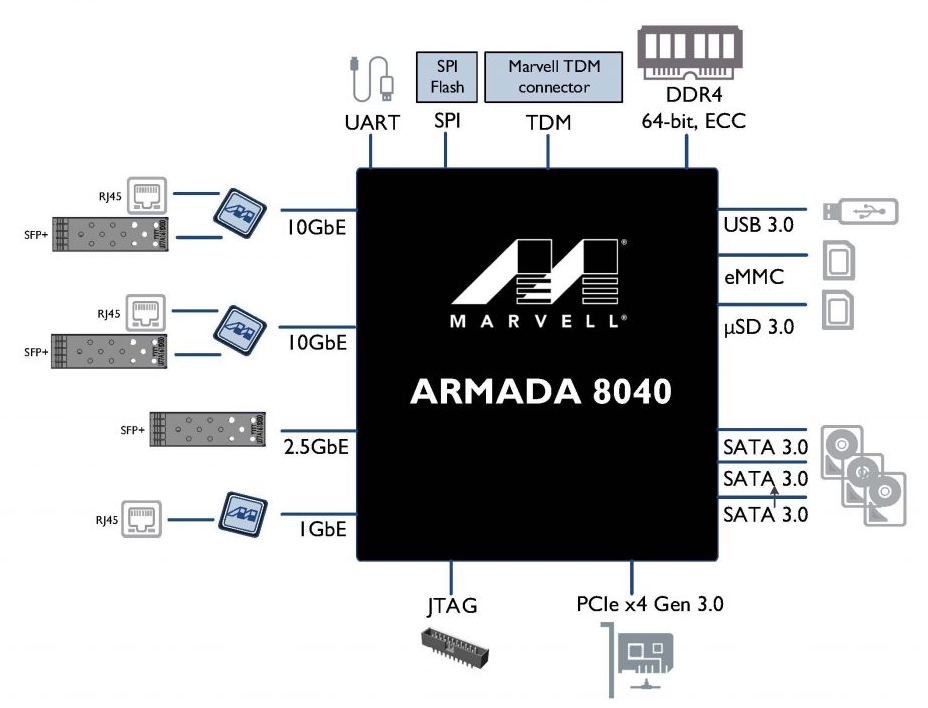

- SoC – ARMADA 8040 (88F8040) quad core Cortex A72 processor @ up to 2.0 GHz with accelerators (packet processor, security engine, DMA engines, XOR engines for RAID 5/6)

- System Memory – 1x DDR4 DIMM with optional ECC and single/dual chip select support; up to 16GB RAM

- Storage – 3x SATA 3.0 port, micro SD slot, SPI flash, eMMC flash

- Connectivity – 2x 10Gbps Ethernet via copper or SFP, 2.5Gbps via SFP, 1x Gigabit Ethernet via copper

- Expansion – 1x PCIe-x4 3.0 slot, Marvell TDM module header

- USB – 1x USB 3.0 port, 2x USB 2.0 headers (internal), 1x USB-C port for Marvell Modular Chip (MoChi) interfaces (MCI)

- Debugging – 20-pin connector for CPU JTAG debugger, 1x micro USB port for serial console, 2x UART headers

- Misc – Battery for RTC, reset header, reset button, boot and frequency selection, fan header

- Power Supply – 12V DC via power jack or ATX power supply

- Dimensions – Mini-ITX form factor (170 mm x 170 mm)

The board ships with either 4GB or 16GB DDR4 memory, a micro USB cable for debugging, 3 heatsinks, an optional 12V DC/110 or 220V AC power adapter, and an optional 8GB micro SD card. The company also offers a standard mini-ITX case for the board. The board supports mainline Linux or Linux 4.4.x, mainline U-Boot or U-Boot 2015.11, UEFI (Linaro UEFI tree), Yocto 2.1, SUSE Linux, netmap, DPDK, OpenDataPlane (ODP) and OpenFastPath. You’ll find software and hardware documentation in the Wiki.

The Wiki actually shows the board for $299 without any memory, but if you go to the order page, you can only order a version with 4GB RAM for $349, or one with 16GB RAM for $498 with the optional micro SD card and power adapter bringing the price up to $518.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress