GPU compute promises to deliver much better performance compared to CPU compute for application such a computer vision and machine learning, but the problem is that many developers may not have the right skills or time to leverage APIs such as OpenCL. So ARM decided to write their own ARM Compute library and has now released it under an MIT license.

The functions found in the library include:

- Basic arithmetic, mathematical, and binary operator functions

- Color manipulation (conversion, channel extraction, and more)

- Convolution filters (Sobel, Gaussian, and more)

- Canny Edge, Harris corners, optical flow, and more

- Pyramids (such as Laplacians)

- HOG (Histogram of Oriented Gradients)

- SVM (Support Vector Machines)

- H/SGEMM (Half and Single precision General Matrix Multiply)

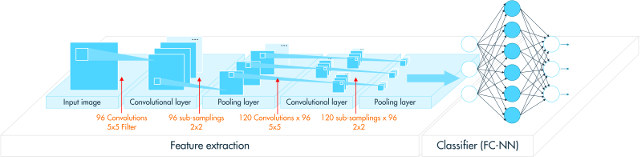

- Convolutional Neural Networks building blocks (Activation, Convolution, Fully connected, Locally connected, Normalization, Pooling, Soft-max)

The library works on Linux, Android or bare metal on armv7a (32bit) or arm64-v8a (64bit) architecture, and makes use of NEON, OpenCL, or NEON + OpenCL. You’ll need an OpenCL capable GPU, so all Mali-4xx GPUs won’t be fully supported, and you need an SoC with Mali-T6xx, T-7xx, T-8xx, or G71 GPU to make use of the library, except for NEON only functions.

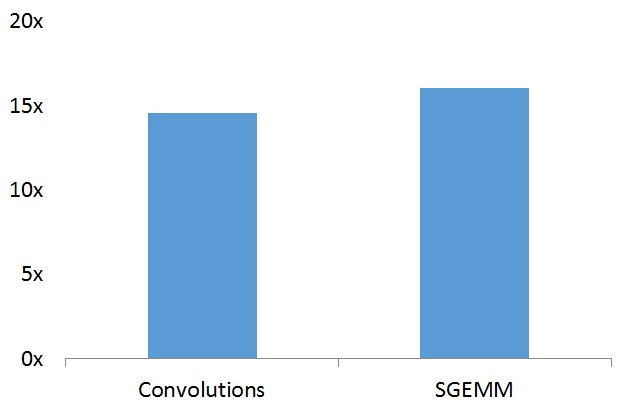

In order to showcase their new library, ARM compared its performance to OpenCV library on Huawei Mate 9 smartphone with HiSilicon Kirin 960 processor with an ARM Mali G71MP8 GPU.

Even with some NEON acceleration in OpenCV, Convolutions and SGEMM functions are around 15 times faster with the ARM Compute library. Note that ARM selected a hardware platform with one of their best GPU, so while it should still be faster on other OpenCL capable ARM GPUs the difference will be lower, but should still be significantly, i.e. several times faster.

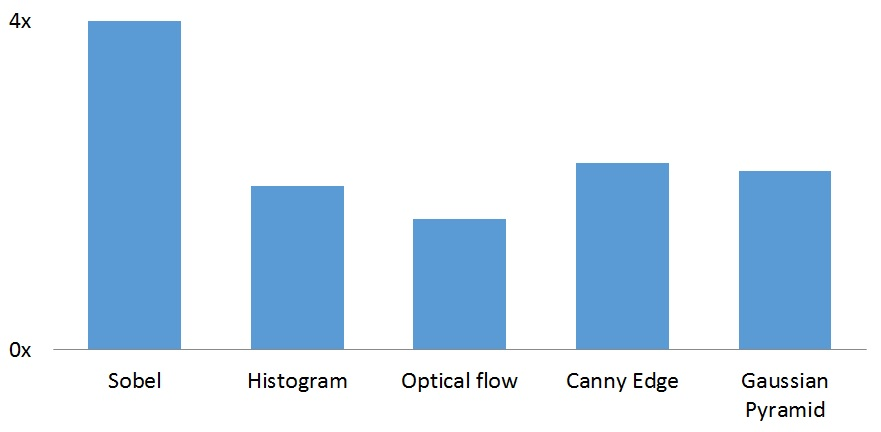

The performance boost in other function is not quite as impressive, but the compute library is still 2x to 4x faster than OpenCV.

While the open source release was just about three weeks ago, the ARM Compute library has already been utilized by several embedded, consumer and mobile silicon vendors and OEMs better it was open sourced, for applications such as 360-degree camera panoramic stitching, computational camera, virtual and augmented reality, segmentation of images, feature detection and extraction, image processing, tracking, stereo and depth calculation, and several machine learning based algorithms.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress