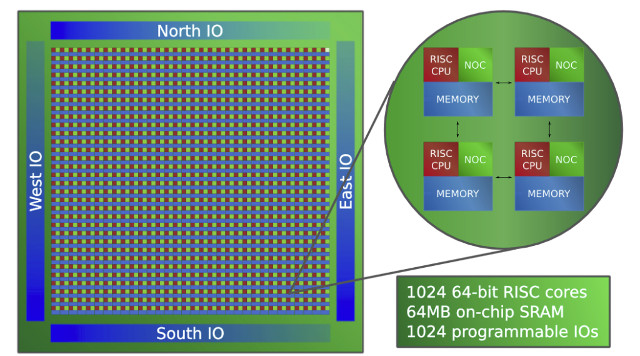

Parallella project’s goal is to ” democratize access to parallel computing by offering affordable open source hardware platforms and development tools”, and they’ve already done that with their very first $99 “Supercomputer” board combining a Xilinz Zynq FPGA + ARM SoC to the company’s Epiphany-III 16-core coprocessor. But the company has made progress after their 64-core Epiphany-IV, by taping out Epiphany-V processor with 1024-core last October.

Epiphany-V main features and specifications:

- 1024 64-bit RISC processors

- 64-bit memory architecture

- 64-bit and 32-bit IEEE floating point support

- 64 MB of distributed on-chip SRAM

- 1024 programmable I/O signals

- 3x 136-bit wide 2D mesh NOCs (Network-on-Chips)

- 2052 separate power domains

- Support for up to one billion shared memory processors

- Support for up to one petabyte of shared memory (That’s 1,000,000 gigabytes)

- Binary compatibility with Epiphany III/IV chips

- Custom ISA extensions for deep learning, communication, and cryptography

- TSMC 16FF process

- 4.56 Billion transistors, 117mm2 silicon area

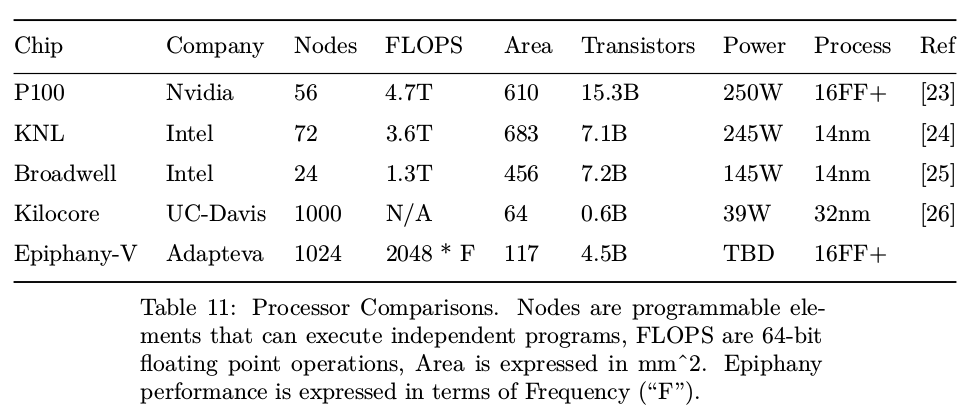

With its 4.5 billion transistors, the chip has 36% more transistors than Apple’s A10 processor at about the same die size, and compared to other HPC processors, such as NVIDIA P100 or UC-Davis Kilocore, the chip offers up to 80x better processor density and up to 3.6x advantage in memory density.

You’ll find more technical details, minus power consumption numbers and frequency which are not available yet, in Epiphany V technical paper.

The first chips taped out at TSMC will be sent back mid to end Q1 2017.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress