So I’ve just received a Roseapple Pi board, and I finally managed to download Debian and Android images from Roseapple pi download page. It took me nearly 24 hours to be successful, as the Debian 8.1 image is nearly 2GB large and neither download links from Google Drive nor Baidu were reliable, so I had to try a few times, and after several failed attempt it work (morning is usually better).

One way is to use better servers like Mega, at least in my experience, but another way to reduce download time and possibly bandwidth costs is to provide a smaller image, in this case not a minimal image, but an image with the same exact files and functionalities, but optimized for compression.

I followed three main steps to reduce the firmware size from 2GB to 1.5GB in a computer running Ubuntu 14.04, but other Linux operating systems should also do:

- Fill unused space with zeros using sfill (or fstrim)

- Remove unallocated and unused space in the SD card image

- Use the best compression algorithm possible. Roseapple Pi image was compressed with bzip2, but LZMA tools like 7z offer usually better compression ratio

This can be applied to any firmware, and sfill is usually the most important part.

Let’s install the required tools first:

|

1 |

sudo apt-get install secure-delete p7zip-full util-linux gdisk |

We’ll now check the current firmware file size, and uncompress it

|

1 2 3 4 5 |

ls -lh debian-s500-20151008.img.bz2 -rw------- 1 jaufranc jaufranc 2.0G Oct 13 11:45 debian-s500-20151008.img.bz2 bzip2 -d debian-s500-20151008.img.bz2 ls -lh debian-s500-20151008.img -rw------- 1 jaufranc jaufranc 7.4G Oct 13 11:49 debian-s500-20151008.img |

Good, so the firmware image is 7.4GB, since it’s an SD card image you can check the partitions with fdisk

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

fdisk -l debian-s500-20151008.img WARNING: GPT (GUID Partition Table) detected on 'debian-s500-20151008.img'! The util fdisk doesn't support GPT. Use GNU Parted. Disk debian-s500-20151008.img: 7860 MB, 7860125696 bytes 202 heads, 56 sectors/track, 1357 cylinders, total 15351808 sectors Units = sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x00000000 Device Boot Start End Blocks Id System debian-s500-20151008.img1 1 14680063 7340031+ ee GPT |

Normally fdisk will show the different partitions, with a start offset which you can use to mount a loop device, and run sfill. But this image a little different, as it uses GPT. fdisk recommends to use gparted graphical tool, but I’ve found out gdisk is also an option.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

sudo gdisk debian-s500-20151008.img GPT fdisk (gdisk) version 0.8.8 Partition table scan: MBR: protective BSD: not present APM: not present GPT: present Found valid GPT with protective MBR; using GPT. Command (? for help): p Disk debian-s500-20151008.img: 15351808 sectors, 7.3 GiB Logical sector size: 512 bytes Disk identifier (GUID): 4DE7340C-7BA3-4508-B556-E774FF755B2B Partition table holds up to 128 entries First usable sector is 34, last usable sector is 14680030 Partitions will be aligned on 2048-sector boundaries Total free space is 34814 sectors (17.0 MiB) Number Start (sector) End (sector) Size Code Name 1 2048 32734 15.0 MiB 8300 2 32768 106496 36.0 MiB EF00 3 <strong>139264</strong> 14680030 6.9 GiB 8300 Command (? for help): |

That’s great. There are two small partitions in the image, and a larger 6.9 GB with offset 139264. I have mounted it, and filled unused space with zeros once as follows:

|

1 2 3 4 |

mkdir mnt sudo mount -o loop,offset=$((512*139264)) debian-s500-20151008.img mnt sudo sfill -z -l -l -f mnt sudo umount mnt |

The same procedure could be repeated on the other partitions, but since they are small, the gains would be minimal. Time to compress the firmware with 7z with the same options I used to compress a Raspberry Pi minimal image:

|

1 |

7z a -t7z -m0=lzma -mx=9 -mfb=64 -md=32m -ms=on debian-s500-20151008.img.7z debian-s500-20151008.img |

After about 20 minutes, the results is that it saved about 500 MB.

|

1 2 |

ls -lh debian-s500-20151008.img.7z -rw-rw-r-- 1 jaufranc jaufranc 1.5G Oct 13 14:20 debian-s500-20151008.img.7z |

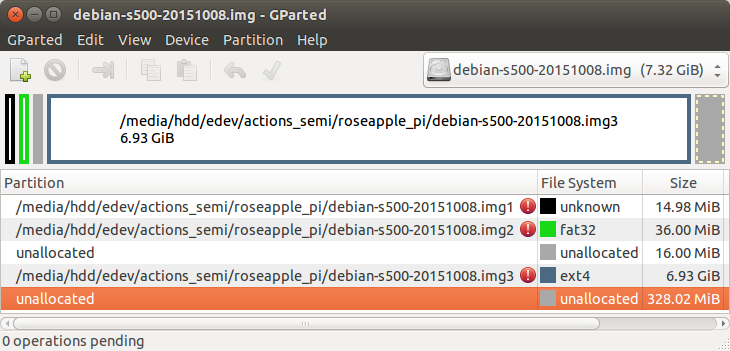

Now if we run gparted, we’ll find 328.02 MB unallocated space at the end of the SD card image.

Some more simple maths… The end sector of the EXT-4 partition is 14680030, which means the actuall useful size is (14680030 * 512) 7516175360 bytes, but the SD card image is 7860125696 bytes long. Let’s cut the fat further, and compress the image again.

|

1 2 |

truncate -s 7516175360 debian-s500-20151008.img 7z a -t7z -m0=lzma -mx=9 -mfb=64 -md=32m -ms=on debian-s500-20151008-sfill-remove_unallocated.img.7z debian-s500-20151008.img |

and now let’s see the difference:

|

1 2 3 |

ls -l *.7z -rw-rw-r-- 1 jaufranc jaufranc 1570931970 Oct 13 14:20 debian-s500-20151008.img.7z -rw-rw-r-- 1 jaufranc jaufranc 1570849097 Oct 13 14:50 debian-s500-20151008-sfill-remove_unallocated.img.7z |

Right… the file is indeed smaller, but it only saved a whooping 82,873 bytes, not very worth it, and meaning the unallocated space in that SD card image must have been filled with lots of zeros or other identical bytes.

There are also other tricks to decrease the size such as clearing the cache, running apt-get autoremove, and so on, but this is system specific, and does remove some existing files.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress